Solutions For Every Need

Whether it’s reducing fraud losses, retaining customers, or setting more accurate pricing models, DataRobot’s Value-Driven AI approach helps your team deliver faster and more accurate results.

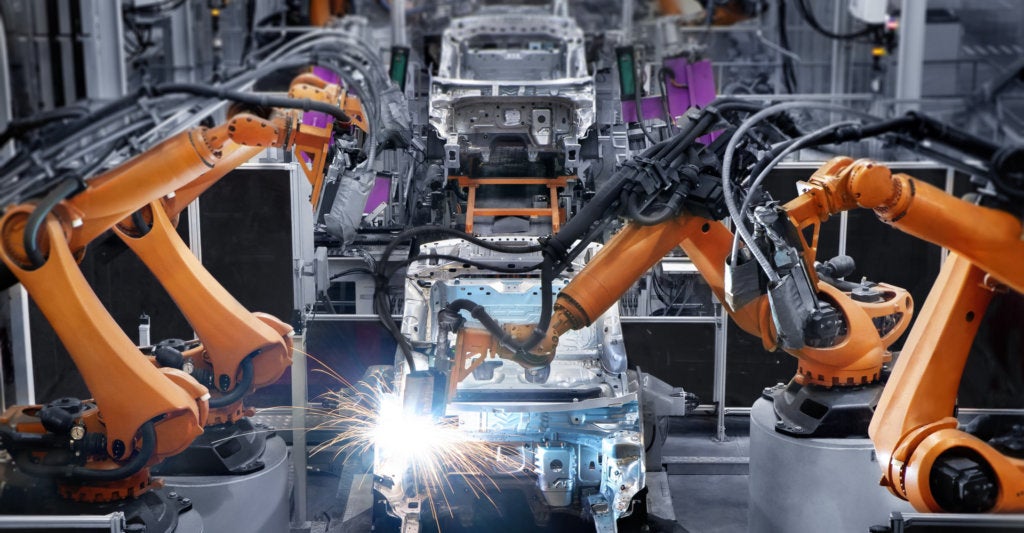

AI Solutions by industry

DataRobot provides personalized solutions in machine learning enablement for any industry. We have a market experts which will help your company create a personalized roadmap to build an AI-driven enterprise.

Most Popular Use Cases

AI-driven organizations around the world use DataRobot to solve their most pressing business problems.

Applied AI Expertise

See faster results with guidance from a world-class AI team with deep AI expertise and broad use case experience.

Hear From Our Customers

Take AI From Vision to Value

See how a value-driven approach to AI can accelerate time to impact.