- Blog

- AI Thought Leadership

- Kintsugi — the Art of Repair

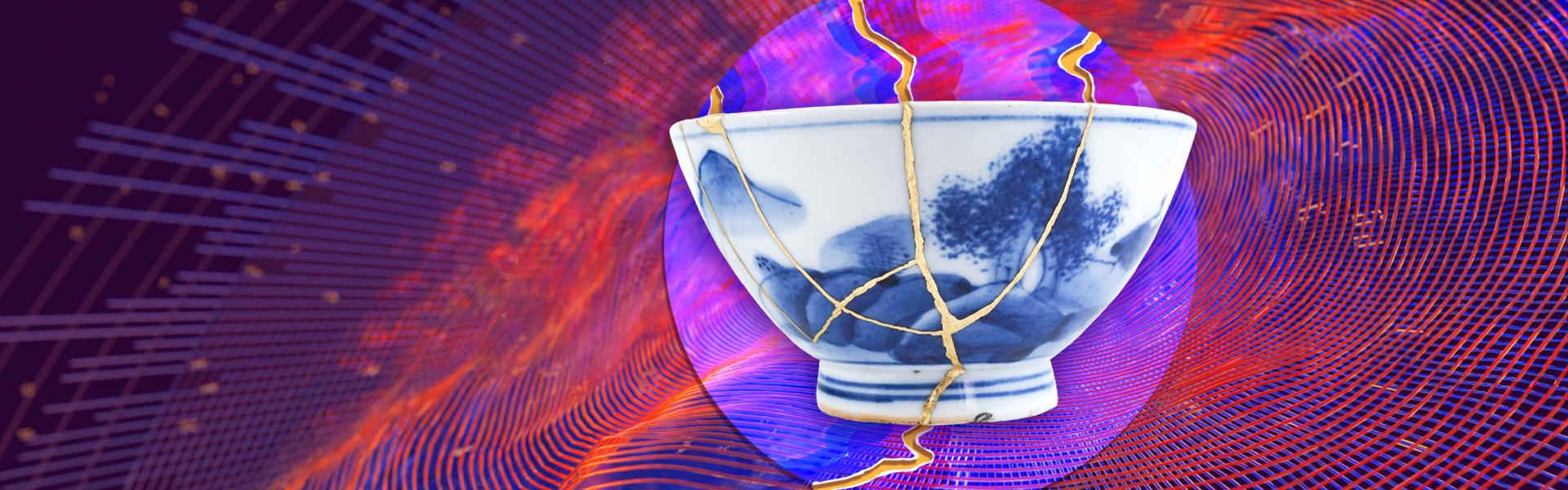

Kintsugi — the Art of Repair

Kintsugi is a Japanese art form that honors the effort given to repair, while emphasizing rather than hiding the broken pieces. Originating in the 15th century, broken vessels from Japanese tea ceremonies were repaired with precious metals, embracing the fused repairs as a visually elegant and historical record of everyday objects. In time, this activity of embracing the flawed, imperfect life of the vessel became a metaphor for similar aspects in human life. Ernest Hemingway expressed it this way: “The world breaks everyone and afterward many are strong at the broken places.” meaning broken people are often made stronger through the effort of self-repair as well.

When listening to data scientists discuss the inherent flaws in existing AI models, you’ll often hear the phrase, “All models are wrong, but some are useful.” Reality may be complicated, and humans complex, but in the end it’s the illuminating results that are necessary for progress.

It is accepted wisdom that—despite being crafted with as much skill and artisanship as a ceramics master—some models, when faced with the complexities of reality, break. Likewise, kintsugi is considered a contemplative approach to dealing with the repair of something useful, in a way that highlights the cracks and is transparent to everyone.

Perhaps there are other lessons here that we can take from art and literature. Is there a way to embrace the spirit of this methodology in our system’s design so they reflect this repair? In kintsugi, the gold lines create a completely new look for the bowl. It isn’t about tweaking a model in production as much as it is about identifying what breaks when we don’t expect it to and identifying what we should do about it. In machine learning, the “art of repair” needs to address aspects of transparency in order to create trust. The gold lacquer equals the ethical bonds that make our products stronger by highlighting the efforts of the past.

Pattern Tracking is the Artistry

Both recognizing patterns and historical record-keeping have been critical to the evolution of civilizations. History is fundamentally about the passage of time. In kintsugi, the history of an object doesn’t change its fundamental shape, nor does the repair. Historically, repeating patterns deepens the pattern’s importance as historical records keep civilizations accountable for those patterns and the changes they bring about. This awareness becomes an evolutionary advantage by creating landmarks for our memories—those who forget the past are doomed to repeat it, as the aphorism goes. Kintugi incorporates its past by wrapping itself around its history. Can AI annotate itself to provide us that awareness?

To do so, we must review the errors made in our algorithms over time within the context of repairing a broken vessel. If we simply discard or hide the lessons being learned now by our algorithms, society will not benefit and learn from those mistakes in the aggregate.

Our instinctive, cultural bias against defects challenges our ability to err, adjust, and improve. When things break, we throw them away. Many of the products we develop are designed to be disposable when the repair costs as much as the original device. Our technology becomes wasteful when it could be repurposed, but this doesn’t happen.

What impact could this awareness have on the tech industry’s approach to AI? Rather than sensationalize or shame waste through “reputation management,” there should be methods to capture—and even highlight—those cracks in the model. If auditors are interested, let them easily see the repairs. Presenting them with an established willingness to “emphasize the broken pieces” opens the door to constructive, supportive feedback.

How can we design our machine learning systems to do this? By creating a virtuous loop of feedback and improvement that, in turn, can validate a trusted model.

Some possibilities include:

- When building AI, we could shut down a project and tag a model as inadequate by agreeing not to move it to production. When an organization champions this kind of decision without negative consequence, it allows for greater experimentation and more possibilities. Rather than seeking perfectionism and failing, acknowledging the lack of benefits opens the door to other AI/ML projects that could be more impactful.

- We could consider the net risks of the system instead of a single risk. What is the “cost” (financially, reputationally) of repairing all the tiny broken pieces in comparison to the benefits the system is providing to the organization and society. In engineering terms, this is similar to the concept of “technical debt” which, over time, can become too difficult to maintain but incurs hidden costs in terms of reliability and usability. Knowing at what point it is appropriate to throw in the towel (or having the bravery to admit it is time), is key to understanding the weight of one snowflake error against a world of possible good.

- We could evaluate whether a particular AI model is worth salvaging. Is this particular broken vessel absolutely necessary, or is there perhaps a better material or vessel that could entirely replace the one under repair? This lends the focus to the right solution for the problem, rather than whether or not it will work in the first place.

- Rather than focusing on the technical aspects (such as the craftsmanship of the repair), we could set the use case as the target for continuous improvement. As an anthropologist might trace the lineage of a broken pottery shard by understanding the entire environment—the application, the contents, and the history, each use case improvement adds to the overall understanding of the alterations to our systems over time.

- Maybe there is a way we could create general awareness of where the system broke down in the past. By doing so, we provide assurance that our skills and attention have created a pot that will not break again in the same place (although it may still break in a different spot or in a different way). Leveraging existing risk analysis and mitigation tasks that already provide this type of insight could be adapted to include a historical record, and strengthen trust in the system.

- Do we believe that we could allow larger fault lines to become the most visible? While breakage over time is an inevitable risk, this evaluation is less about whether the pot is broken than it is about how we communicate that repair. What is or should be most visible to the community? How can we acknowledge that repairs may not result in something usable or even recyclable without creating more discord? The largest fault lines are what create the “character” of the system. What humans track must be conscious and intentional.

Strength Begets Trust

Everyone agrees that in order to adapt AI to your needs, you have to be able to trust in its outcomes.

If repairing bias in our models makes our systems stronger, then trust is what evolves from that strength. Likewise, what we construct evolves with us.

An essential criteria of that trust is the ability to communicate meaning. Humans cultivate trust through signals and by communicating clearly: by speaking plainly and avoiding buzzwords, by anticipating issues, sharing or acknowledging difficulties, and expressing the truth, even when it’s easier not to. A trusted advisor who shares outcomes with honesty, humility, and a dash of optimism is far more compelling than one who obfuscates conclusions with bravado or hides the facts.

Do we really trust that mistakes won’t happen with our models despite our tacit awareness that changes will occur regardless? Of course not. You don’t need to have been the one to have made a mistake for it to impact you. Both the mistake’s author and the downstream casualties must all view those golden lines reflecting the errors as paths for learning together.

Imagine if trust lived inside a database—a gallery of broken lines humanity can look at, dissect, and learn from. If we can see and embrace the bias in the overall design, then we must find a way to acknowledge and codify our learning through communication.

For example, the last decade has seen a rise in the use of virtual assistant technology. Amazon, Google, and Microsoft all sell these devices and all are trained on similar natural language processing that uses sophisticated algorithms to learn from data input to become better at predicting future needs. Recently in the news, Amazon’s Alexa assistant directed a ten-year-old to do a potentially lethal challenge. Amazon specifically responded, “Customer trust is at the center of everything we do and Alexa is designed to provide accurate, relevant, and helpful information to customers,” a company spokesperson told Insider. “As soon as we became aware of this error, we quickly fixed it, and are taking steps to help prevent something similar from happening again.”

This is a reactionary response to a public demand. Could the concept of kintsugi highlight this error so that the fix is transparent to all future developers and recorded for posterity outside of the sensationalized and reputation-damaging influence of a media expose?

Last July the AI Incident Database released the First Taxonomy of AI Incidents as a way to bring transparency to the forefront. It is a brilliant starting point—allowing users worldwide to acknowledge the breaks in their vessels. Many incidents are submitted anonymously and, according to the site, more than half pertain to “systems developed by much smaller organizations.” There are no fixes included as part of this database, only a record of incidents and that damage was done. What if the next step was to propose, debate, or simply include solutions? This could be a beginning to the “art” of repair.

It’s human nature to be resistant to sharing mistakes. Countless models never make it into production because the risk of failure is so high as to be intolerable. A choice between admitting or burying failure. No organization willingly airs their blunders by offering them up for external, out-of-context headlines. The Incident Database approach could be one method of historic log keeping reflective of kintsugi. It would allow future developers to see and value experiments over time (measured in decades not moments). Technical kintsugi could provide trusted explanations and repairs for those examining AI applications in the future.

The Future of Repair

The premise of kintsugi has lasted centuries, not just because of its technical execution, but because it says something about humans as creators. For AI, the art of repair can become the fundamental principle that ultimately influences people’s trust in machine intelligence.

Data science can collectively change the AI narrative from fear to acceptance. Let’s illustrate how making better choices, and improving ourselves in the process, is possible if we can communicate trust artfully and for the long haul. Motivation should be based on concern for not serving the greater good, rather than the fear of making mistakes. We need a dialog that emphasizes continual improvement by acknowledging mistakes made in the past:

Everyone craves a more efficient transaction. Improvisation is dead and curiosity is mortally wounded. If it’s awkward then it’s bad, most people think, when in fact the opposite is true: Awkwardness signals value, a chance to discover something unusual, something unnerving, in the silence between…the cringe.” – Heather Havrilesky

When it comes to work, or intelligence, or physical appearance, or machine learning, our culture sets the standards for perfection. People are quick to judge others for missing the mark, for displaying their awkwardness. Perhaps in the future, in the spirit of kintsugi, we can create repair systems that embrace mistakes by documenting the countless examples of how things can go wrong and how, by communicating honestly and clearly, how they were repaired.

Value-Driven AI

DataRobot is the leader in Value-Driven AI – a unique and collaborative approach to AI that combines our open AI platform, deep AI expertise and broad use-case implementation to improve how customers run, grow and optimize their business. The DataRobot AI Platform is the only complete AI lifecycle platform that interoperates with your existing investments in data, applications and business processes, and can be deployed on-prem or in any cloud environment. DataRobot and our partners have a decade of world-class AI expertise collaborating with AI teams (data scientists, business and IT), removing common blockers and developing best practices to successfully navigate projects that result in faster time to value, increased revenue and reduced costs. DataRobot customers include 40% of the Fortune 50, 8 of top 10 US banks, 7 of the top 10 pharmaceutical companies, 7 of the top 10 telcos, 5 of top 10 global manufacturers.

-

How to Choose the Right LLM for Your Use Case

April 18, 2024· 7 min read -

Belong @ DataRobot: Celebrating 2024 Women’s History Month with DataRobot AI Legends

March 28, 2024· 6 min read -

Choosing the Right Vector Embedding Model for Your Generative AI Use Case

March 7, 2024· 8 min read

Latest posts

Related Posts

You’ve just successfully subscribed