Dynamic Pricing for Non-US Insurers

Overview

Business Problem

A typical customer journey in insurance starts with a prospective client requesting a quote on an insurer’s website. The insurer’s technical cost model returns an initial price based on estimated claim risk, which may then be adjusted by other factors. Some prospects may continue to make their purchase, but many drop off at this point and do not return. This may result from a lower price being available at a competitor, the customer deciding not to buy insurance at all, or a combination of other factors.

Intelligent Solution

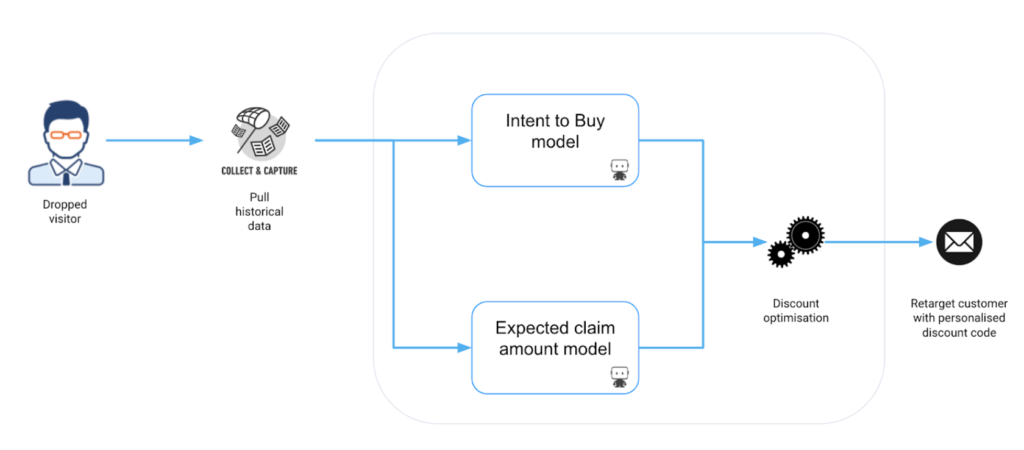

By utilizing models to predict the propensity for a customer’s conversion, businesses have an additional lens through which they can personalize the price for a prospect, either by giving discounts in real-time or through retargeting. The premium quoted for a policy is traditionally based purely on claim’s experience and growth strategies. However, if an organization only looks at historical books and a technical cost model, prices might end up being set too low or too high when compared to the competition. In order to win the right prospective customers, an AI approach that combines both claims modeling and intent to buy is required.

Important: this adaptation of dynamic pricing is only feasible outside the United States. View the use case on loss cost modeling to understand how you can leverage AI to create more accurate loss cost models.

Value Estimation

How would I measure ROI for my use case?

For an insurer that sees X session dropouts of average Y premium, the baseline opportunity can be calculated as follows:

Baseline = X * Y

The actual ROI will be the money saved in terms of intercepted dropouts. If the insurer saves 5% of the sessions, the ROI will be 5% * X * Y

Technical Implementation

Problem Framing

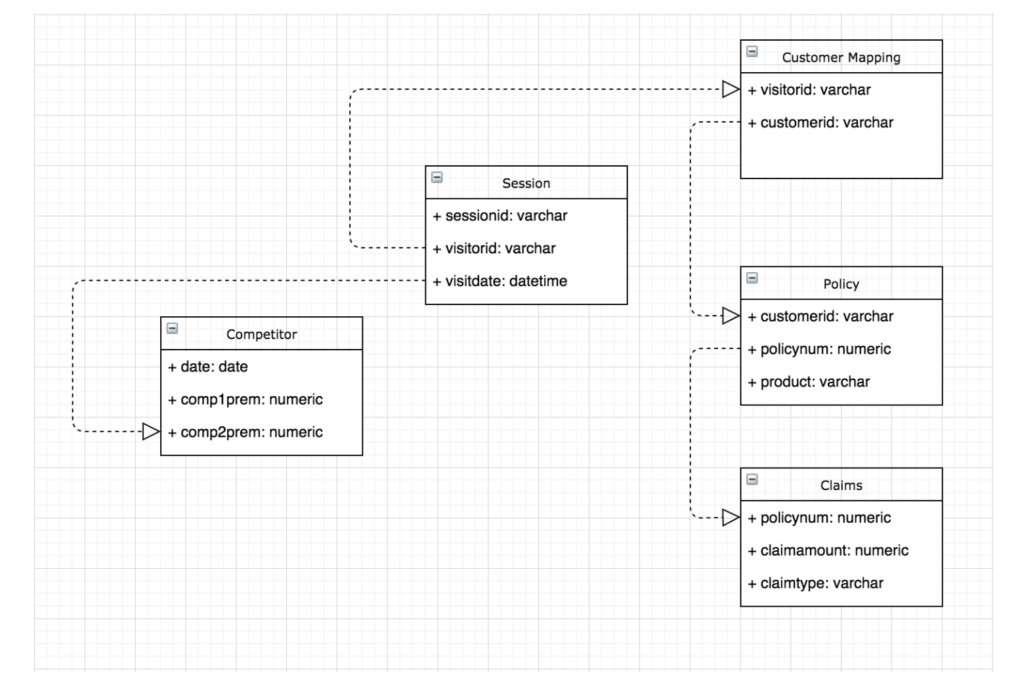

This is a two-model approach, which incorporates predictions for both expected claim amount and intent to buy at a session level. While we understand that datasets may vary from customer to customer, one common theme we see is that the datasets for the two models will often reside in many systems. Policy and claim data may sit in an enterprise data warehouse while customer engagement may reside in CRM like Salesforce or web analytics platforms like Google Cloud.

The target variables for the two models are:

- Predict the expected claim amount for a customer (‘expected claim amount’ model).

- Predict the likelihood a customer will accept an offer (‘intent to buy’ model).

For the modeling of conversion rate, we can potentially also create two models: one for new customers and one for existing customers. However, modeling for new customers will not be extensively discussed in this guide. The features and behaviors between the two segments are likely to be different.

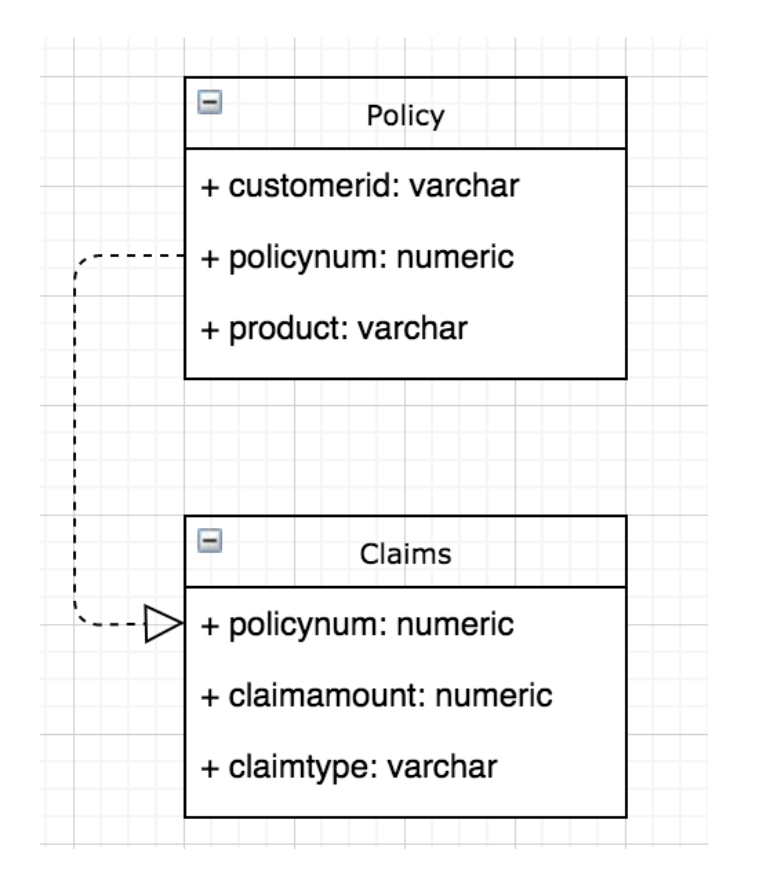

1) The data required to train the ‘expected claim amount’ model looks like the following. This model will use features at a policy level that are captured at the time of quote, with a regression target representing claim amount.

Policy details data:

- This includes a customer’s historical policy details. This may be across products, and different in force periods.

- The dataset is at the policy level but can be mapped to a session level using a session-to-customer mapping and subsequent customer-to-policy mapping. Note this will require snapshots and timestamps in order to ensure details are correct as of the time of the session.

Claims data:

- This includes a customer’s past claims.

- The dataset is at the claim level. There can be multiple claims per policy depending on the product type, and multiple policies for a customer. Features may need to be aggregated when mapped back to a session through a session -> customer -> policy -> claim join.

2) The data required to train the ‘intent to buy’ model includes Policy and Claims data, but also incorporates additional session (Google Analytics) and competitor data. The unit of analysis for the intent model is at a session level. For every customer session we create a binary target, depending on whether or not the quote ended in conversion.

Session details data:

- Session details like time spent, pages visited, etc.

- This would serve as our base table. Our unit of analysis is at a session level.

- Historical page views from Google Analytics data. We can create different lags like page views over the last day, 7 days, 30 days etc. When creating this dataset, be mindful to keep the lags large enough to reflect system update intervals. For example, if Google Analytics data generally arrives every 15 minutes, and features are engineered based on a 15 minute lag, then at the time of prediction the necessary data may not be available.

Competitor data:

- A dataset containing information about competitor premiums.

- This should be snapshotted and at a product level. For example, every day premiums for specific products may be scraped from competitor websites. The frequency of scraping may vary from product to product.

- We can create features like: what were the competitor premiums yesterday, what was the rank of competitors in terms of premium size, the difference between our premium and competitors.

*Policy

- For every session, we can generate lagged features describing how many policies of potentially different products were bought by the customer in the last month, 3 month, 6 months, 1 year, etc.

*Claim

- Similar to the policy table, we can generate features describing the historical claim severity and number of claims for customers.

*These tables are only applicable for existing customers, and so are most relevant for renewals or repeat customers as opposed to new business.

Model Training

DataRobot Automated Machine Learning automates many parts of the modeling pipeline. Instead of hand-coding and manually testing dozens of models to find the one that best fits your needs, DataRobot automatically runs dozens of models and finds the most accurate one for you, all in a matter of minutes. In addition to training the models, DataRobot automates other steps in the modeling process such as processing and partitioning the dataset.

We will jump straight to interpreting the model prediction post-processing and deployment. Watch demos to see how to use DataRobot from start to finish and how to understand the data science methodologies embedded in its automation.

Post-Processing

The actual decision to be made in the business is not actually the target of either of the two models that we’ve built. Instead, we want to vary the level of discount in order to maximize the expected return from the two models. As a result, we need to make predictions for different scenarios and optimize on the output of the two models.

A standard approach involves calculating a profit metric and setting up your optimization process to maximize profit. Typically, as the level of a discount increases, intent to buy will go up; however, the premium amount will go down and so there is a tradeoff with a worse expected loss ratio. A business will also often impose constraints on minimum and maximum acceptable discount values, and use additional inputs to better estimate profit. By running a variety of different pricing scenarios, we can find the best price to offer to a customer in order to maximize expected return.

For example, a business may decide to offer up to five different levels of discounts. This would involve scoring a customer’s intent to buy five times, with each different discount level, resulting in five predictions of conversion rate. For each of these the expected return can be calculated the same way as a function of the conversion rate and the new discounted premium, and so the best offer can be selected accordingly.

Business Implementation

Decision Environment

After you finalize a model, DataRobot makes it easy to deploy the model into your desired decision environment. Decision environments are the methods by which predictions will ultimately be used for decision-making.

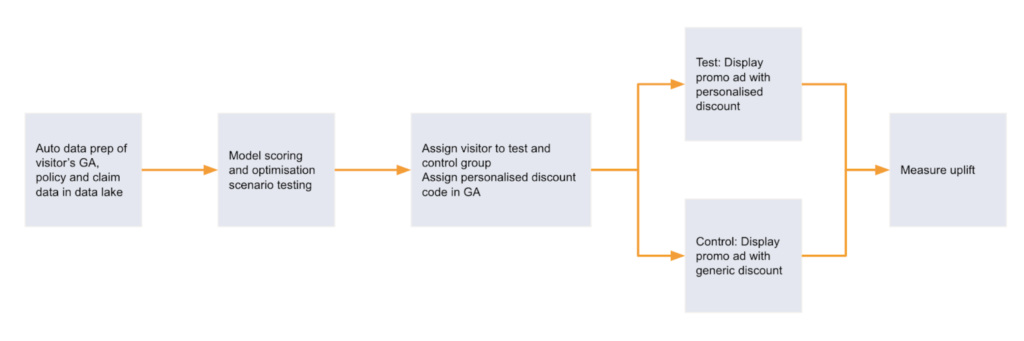

In addition to the post processing involved with claims discounting, a business should consider running continuous experimentation in order to capture data on the effectiveness of pricing, and potential shifts in client behavior. By having a control group with generic or randomized discounts, data can be captured to help build future iterations of the intent to buy model.

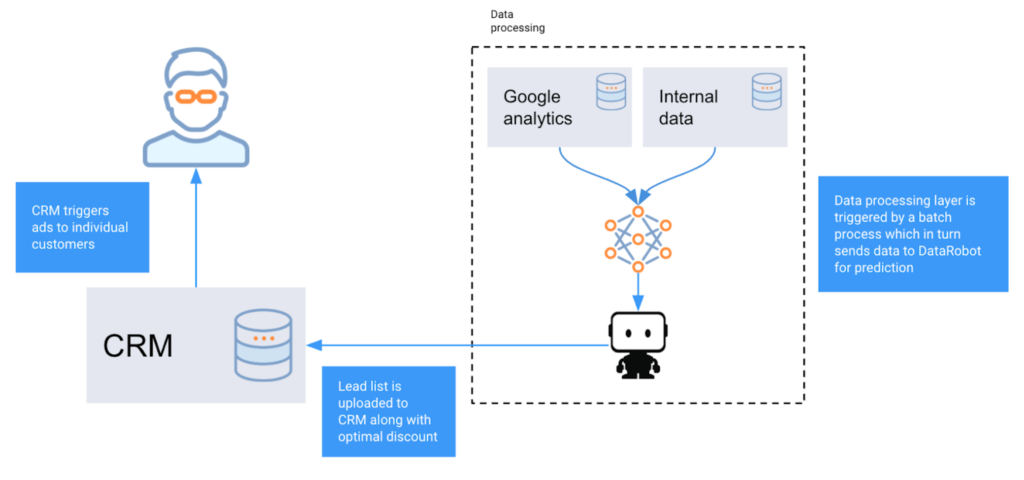

An example decision environment is shown in the following diagram:

Decision Maturity

Automation | Augmentation | Blend

Ultimately, the goal would be to automate the entire workflow and allow a machine to personalize pricing. For customers interacting via a web page or app, where real-time scoring can be implemented, a custom discount can be made available as soon as someone enters sufficient details for a quote.

In some cases, real-time decision making is not possible. In such cases, a business may decide to score batches of prospects who drop out offline, and use retargeting via ads to surface new discounts. In this use case we will focus on the retargeting workflow.

Model Deployment

An example deployment flow may look like the following:

As illustrated above, we periodically trigger a batch process that takes all dropped sessions since the last run and prepares the relevant data to make predictions. This file is then sent to DataRobot for scoring at different discount levels. After completion, user details along with the corresponding best discount level is pushed to the insurer’s Customer Relationship Management system (CRM). This system can then be configured to trigger campaigns that are personalized for each potential customer.

Decision Stakeholders

Decision Executors

Campaign managers will ultimately define the scope of campaigns, however pricing teams will likely need to be involved to help define business rules for different discount levels that can potentially be optimized.

Decision Managers

Marketing manager will oversee the campaign performance.

Decision Authors

Data scientists in marketing teams will likely own the modeling process and be responsible for setting up the optimization procedure to decide on an optimal discount.

In addition to data scientists, members of an insurer’s IT team will often need to be involved when setting up the pipeline to call predictions.

Underwriting teams should also be involved closely as the resulting pricing strategies may impact reserving.

Model Monitoring

The models that we’ve created need to be monitored regularly and frequently, in near real time. Data drift monitoring is also quite crucial: if the types of quotes coming into the system change in unexpected ways, a large number of quotes may end up being given a discount. Furthermore, features like competitor premiums may also change over time, which might merit model recalibration.

There is also a risk of models being influenced by bot activity, with competitors or third parties generating artificial quotes. Having the ability to capture data drift here is crucial in understanding the effects and in monitoring the system behavior.

Implementation Risks

- There is a lot of automation involved here. A typical implementation will require strong collaboration between IT, CRM, Marketing, and Data Science teams. Having the right project planning and checkpoints during the entire use case life cycle is crucial.

- Make sure that the live data and DataRobot environment can talk to each other, if not using Scoring Code/Codegen.

- Make sure the features used are captured by the system at time intervals that are needed for modeling. For example, Google Analytics data should be real time or near real time. Quote data for unsuccessful quotes also should be available. For performance, it may be useful to create a feature mart with pre-calculated views of historical aggregations.

- Monotonic constraints may be very useful for discount optimization. Use them if possible.

- Make sure the data has good enough variability in discount levels.

- Be mindful of regulatory constraints before pitching this project; not all countries allow insurers to price based on elasticity.

Experience the DataRobot AI Platform

Less Friction, More AI. Get Started Today With a Free 30-Day Trial.

Sign Up for FreeExplore More Use Cases

-

InsurancePredict Policy Churn For New Customers

Ensure the long term profitability of incoming members by predicting whether they will churn within the first 12 months of a policy application.

Learn More -

InsurancePredict Which Insurance Products to Offer

Predict which products are best for cross-selling to drive successful next best offer campaigns

Learn More -

InsurancePredict Claims Litigation

Predict from first notice of loss which claims have a high risk of going to litigation.

Learn More -

InsurancePredict Individual Loss Development

Predict loss development on every individual claim based on their unique attributes.

Learn More

-

InsurancePredict Policy Churn For New Customers

Ensure the long term profitability of incoming members by predicting whether they will churn within the first 12 months of a policy application.

Learn More -

InsurancePredict Which Insurance Products to Offer

Predict which products are best for cross-selling to drive successful next best offer campaigns

Learn More -

InsurancePredict Claims Litigation

Predict from first notice of loss which claims have a high risk of going to litigation.

Learn More -

InsurancePredict Individual Loss Development

Predict loss development on every individual claim based on their unique attributes.

Learn More