As great as your AI agents may be in your POC environment, that same success may not make its way to production. Often, those perfect demo experiences don’t translate to the same level of reliability in production, if at all.

Key takeaways

- Production-ready agentic AI requires evaluation, monitoring, and governance across the entire lifecycle, not just strong proof-of-concept results.

- Agentic systems must be evaluated on trajectories, decision-making, and constraints adherence, not just final outputs.

- Continuous monitoring and execution tracing are essential to detect drift, diagnose failures, and iterate safely in production.

- Governance must address security, operational, and regulatory risks as built-in requirements rather than post-deployment controls.

- Economic metrics such as token usage and cost per task are critical to sustaining agentic AI at enterprise scale.

- Organizations that engineer reliability through metrics, observability, and governance are far more likely to succeed with agentic AI in production.

The fundamental challenges

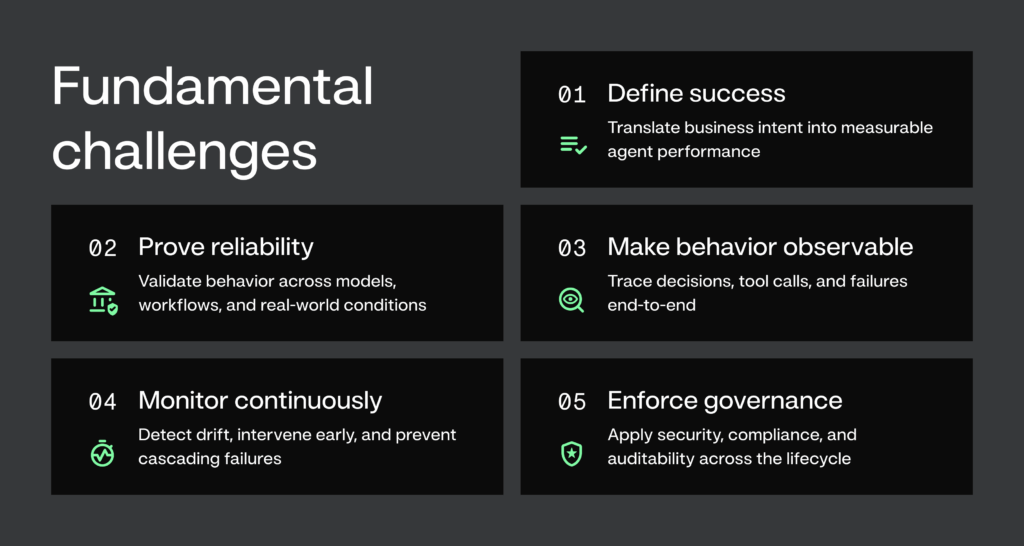

Taking your agents from POC to production requires overcoming these five fundamental challenges:

- Defining success by translating business intent into measurable agent performance.

Building a reliable agent starts by converting vague business goals, such as “improve customer service,” into concrete, quantitative evaluation thresholds. The business context determines what you should evaluate and how you will monitor it.

For example, a financial compliance agent typically requires 99.9% functional accuracy and strict governance adherence, even if that comes at the expense of speed. In contrast, a customer support agent may prioritize low latency and economic efficiency, accepting a “good enough” 90% resolution rate to balance performance with cost.

- Proving your agents work across models, workflows, and real-world conditions.

To reach production readiness, you need to evaluate multiple agentic workflows across different combinations of large language models (LLMs), embedding strategies, and guardrails, while still meeting strict quality, latency, and cost objectives.

Evaluation extends beyond functional accuracy to cover corner cases, red-teaming for toxic prompts and responses, and defenses against threats such as prompt injection attacks.

This effort combines LLM-based evaluations with human review, using both synthetic data and real-world use cases. In parallel, you assess operational performance, including latency, throughput at hundreds or thousands of requests per second, and the ability to scale up or down with demand.

- Ensuring agent behavior is observable so you can debug and iterate with confidence.

Tracing the execution of agent workflows step by step allows you to understand why an agent behaves the way it does. By making each decision, tool call, and handoff visible, you can identify root causes of unexpected behavior, debug failures quickly, and iterate toward the desired agentic workflow before deployment.

- Monitoring agents continuously in production and intervening before failures escalate.

Monitoring deployed agents in production with real-time alerting, moderation, and the ability to intervene when behavior deviates from expectations is crucial. Signals from monitoring, along with periodic reviews, should trigger re-evaluation so you can iterate on or restructure agentic workflows as agents drift from desired behavior over time. And trace root causes of these easily.

- Enforce governance, security, and compliance across the entire agent lifecycle.

You need to apply governance controls at every stage of agent development and deployment to manage operational, security, and compliance risks. Treating governance as a built-in requirement, rather than a bolt-on at the end, ensures agents remain safe, auditable, and compliant as they evolve.

Letting success hinge on hope and good intentions isn’t good enough. Strategizing around this framework is what separates successful enterprise artificial intelligence initiatives from those that get stuck as a proof of concept.

Why agentic systems require evaluation, monitoring, and governance

As Agentic AI moves beyond POCs to production systems to automate enterprise workflows, their execution and outcomes will directly impact business operations. The waterfall effects of agent failures can significantly impact business processes, and it can all happen very fast, preventing the ability of humans to intervene.

For a comprehensive overview of the principles and best practices that underpin these enterprise-grade requirements, see The Enterprise Guide to Agentic AI

Evaluating agentic systems across multiple reliability dimensions

Before rolling out agents, organizations need confidence in reliability across multiple dimensions, each addressing a different class of production risk.

Functional

Reliability at the functional level depends on whether an agent correctly understands and carries out the task it was assigned. This involves measuring accuracy, assessing task adherence, and detecting failure modes such as hallucinations or incomplete responses.

Operational

Operational reliability depends on whether the underlying infrastructure can consistently support agent execution at scale. This includes validating scalability, high availability, and disaster recovery to prevent outages and disruptions.

Operational reliability also depends on the robustness of integrations with existing enterprise systems, CI/CD pipelines, and approval workflows for deployments and updates. In addition, teams must assess runtime performance characteristics such as latency (for example, time to first token), throughput, and resource utilization across CPU and GPU infrastructure.

Security

Secure operation requires that agentic systems meet enterprise security standards. This includes validating authentication and authorization, enforcing role-based access controls aligned with organizational policies, and limiting agent access to tools and data based on least-privilege principles. Security validation also includes testing guardrails against threats such as prompt injection and unauthorized data access.

Governance and Compliance

Effective governance requires a single source of truth for all agentic systems and their associated tools, supported by clear lineage and versioning of agents and components.

Compliance readiness further requires real-time monitoring, moderation, and intervention to address risks such as toxic or inappropriate content and PII leakage. In addition, agentic systems must be tested against applicable industry and government regulations, with audit-ready documentation readily available to demonstrate ongoing compliance.

Economic

Sustainable deployment depends on the economic viability of agentic systems. This includes measuring execution costs such as token consumption and compute usage, assessing architectural trade-offs like dedicated versus on-demand models, and understanding overall time to production and return on investment.

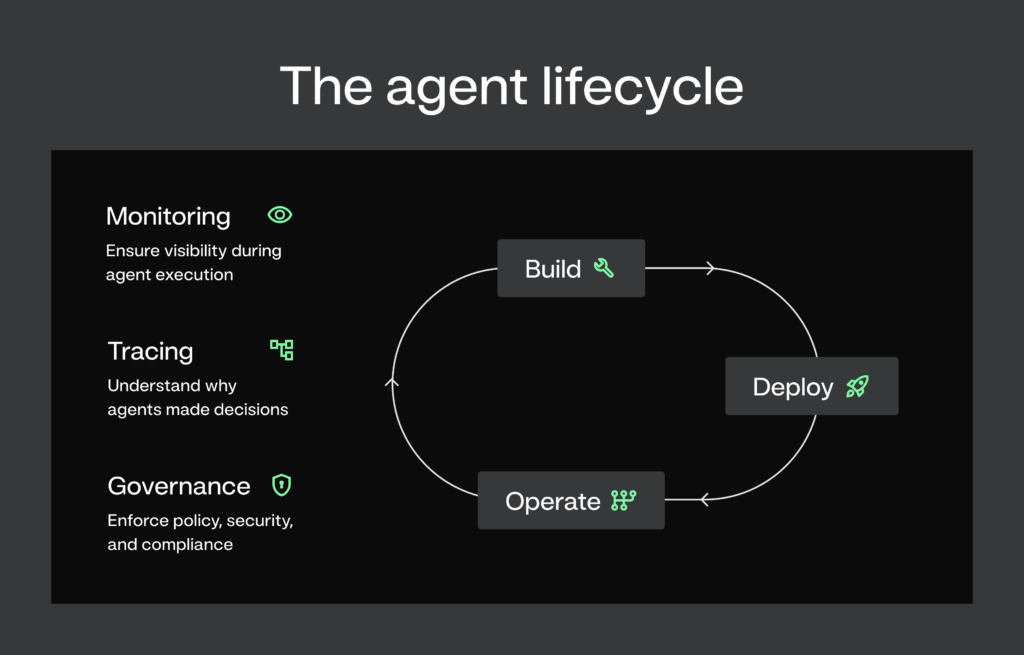

Monitoring, tracing, and governance across the agent lifecycle

Pre-deployment evaluation alone is not sufficient to ensure reliable agent behavior. Once agents operate in production, continuous monitoring becomes essential to detect drift from expected or desired behavior over time.

Monitoring typically focuses on a subset of metrics drawn from each evaluation dimension. Teams configure alerts on predefined thresholds to surface early signals of degradation, anomalous behavior, or emerging risk. Monitoring provides visibility into what is happening during execution, but it does not on its own explain why an agent produced a particular outcome.

To uncover root causes, monitoring must be paired with execution tracing. Execution tracing exposes:

- How an agent arrived at a result by capturing the sequence of reasoning steps it followed

- The tools or functions it invoked

- The inputs and outputs at each stage of execution.

This visibility extends to relevant metrics such as accuracy or latency at both the input and output of each step, enabling effective debugging, faster iteration, and more confident refinement of agentic workflows.

And finally, governance is necessary at every phase of the agent lifecycle, from building and experimentation to deployment in production.

Governance can be classified broadly into 3 categories:

- Governance against security risks: Ensures that agentic systems are protected from unauthorized or unintended actions by enforcing robust, auditable approval workflows at every stage of the agent build, deployment, and update process. This includes strict role-based access control (RBAC) for all tools, resources, and enterprise systems an agent can access, as well as custom alerts applied throughout the agent lifecycle to detect and prevent accidental or malicious deployments.

- Governance against operational risks: Focuses on maintaining safe and reliable behavior during runtime by implementing multi-layer defense mechanisms that prevent unwanted or harmful outputs, including PII or other confidential information leakage. This governance layer relies on real-time monitoring, notifications, intervention, and moderation capabilities to identify issues as they occur and enable rapid response before operational failures propagate.

- Governance against regulatory risks: Ensures that all agentic solutions remain compliant with applicable industry-specific and government regulations, policies, and standards while maintaining strong security controls across the entire agent ecosystem. This includes validating agent behavior against regulatory requirements, enforcing compliance consistently across deployments, and supporting auditability and documentation needed to demonstrate adherence to evolving regulatory frameworks.

Together, monitoring, tracing, and governance form a continuous control loop for operating agentic systems reliably in production.

Monitoring and tracing provide the visibility needed to detect and diagnose issues, while governance ensures ongoing alignment with security, operational, and regulatory requirements. We will examine governance in more detail later in this article.

Differences between agentic tool evaluation and monitoring vs classic ML systems

Many of the evaluation and monitoring practices used today were designed for traditional machine learning systems, where behavior is largely deterministic and execution paths are well defined. Agentic systems break these assumptions by introducing autonomy, state, and multi-step decision-making. As a result, evaluating and operating agentic tools requires fundamentally different approaches than those used for classic ML models.

From deterministic models to autonomous agentic systems

Classic ML system evaluation is rooted in determinism and bounded behavior, as the system’s inputs, transformations, and outputs are largely predefined. Metrics such as accuracy, precision/recall, latency, and error rates assume a fixed execution path: the same input reliably produces the same output. Observability focuses on known failure modes, such as data drift, model performance decay, and infrastructure health, and evaluation is typically performed against static test sets or clearly defined SLAs.

By contrast, agentic tool evaluation must account for autonomy and decision-making under uncertainty. An agent does not simply produce an output; it decides what to do next: which tool to call, in what order, and with what parameters.

As a result, evaluation shifts from single-output correctness to trajectory-level correctness, measuring whether the agent selected appropriate tools, followed intended reasoning steps, and adhered to constraints while pursuing a goal.

State, context, and compounding failures

Agentic systems by design are complex multi-component systems, consisting of a combination of large language models and other tools, which may include predictive AI models. They achieve their outcomes using a sequence of interactions with these tools, and through autonomous decision-making by the LLMs based on tool responses. Across these steps and interactions, agents maintain state and make decisions from accumulated context.

These factors make agentic evaluation significantly more complex than that of predictive AI systems. Predictive AI systems are evaluated simply based on the quality of their predictions, whether the predictions were accurate or not, and there is no preservation of state. Agentic AI systems, on the other hand, need to be judged on quality of reasoning, consistency of decision-making, and adherence to the assigned task. Additionally, there is always a risk of errors compounding across multiple interactions due to state preservation.

Governance, safety, and economics as first-class evaluation dimensions

Agentic evaluation also places far greater emphasis on governance, safety, and cost. Because agents can take actions, access sensitive data, and operate continuously, evaluation must track lineage, versioning, access control, and policy compliance across entire workflows.

Economic metrics, such as token usage, tool invocation cost, and compute consumption, become first-class signals, since inefficient reasoning paths translate directly into higher operational cost.

Agentic systems preserve state across interactions and use it as context in future interactions. For example, to be effective, a customer support agent needs access to previous conversations, account history, and ongoing issues. Losing context means starting over and degrading the user experience.

In short, while traditional evaluation asks, “Was the answer correct?”, agentic tool evaluation asks, “Did the system act correctly, safely, efficiently, and in alignment with its mandate while reaching the answer?”

Metrics and frameworks to evaluate and monitor agents

As enterprises adopt complex, multi-agent autonomous AI workflows, effective evaluation requires more than just accuracy. Metrics and frameworks must span functional behavior, operational efficiency, security, and economic cost.

Below, we define four key categories for agentic workflow evaluation necessary to establish visibility and control.

Functional metrics

Functional metrics measure whether the agentic workflow performs the task it was designed for and adheres to its expected behavior.

Core functional metrics:

- Agent goal accuracy: Evaluates the performance of the LLM in identifying and achieving the goals of the user. Can be evaluated with reference datasets where “correct” goals are known or without them.

- Agent task adherence: Assesses whether the agent’s final response satisfies the original user request.

- Tool call accuracy: Measures whether the agent correctly identifies and calls external tools or functions required to complete a task (e.g., calling a weather API when asked about weather).

- Response quality (correctness / faithfulness): Beyond success/failure, evaluates whether the output is accurate and corresponds to ground truth or external data sources. Metrics such as correctness and faithfulness assess output validity and reliability.

Why these matter: Functional metrics validate whether agentic workflows solve the problem they were built to solve and are often the first line of evaluation in playgrounds or test environments.

Operational metrics

Operational metrics quantify system efficiency, responsiveness, and the use of computational resources during execution.

Key operational metrics

- Time to first token (TTFT): Measures the delay between sending a prompt to the agent and receiving the first model response token. This is a common latency measure in generative AI systems and critical for user experience.

- Latency & throughput: Measures of total response time and tokens per second that indicate responsiveness at scale.

- Compute utilization: Tracks how much GPU, CPU, and memory the agent consumes during inference or execution. This helps identify bottlenecks and optimize infrastructure usage.

Why these matter: Operational metrics ensure that workflows not only work but do so efficiently and predictably, which is critical for SLA compliance and production readiness.

Security and safety metrics

Security metrics evaluate risks related to data exposure, prompt injection, PII leakage, hallucinations, scope violation, and control access within agentic environments.

Security controls & metrics

- Safety metrics: Real-time guards evaluating if agent outputs comply with safety and behavioral expectations, including detection of toxic or harmful language, identification and prevention of PII exposure, prompt-injection resistance, adherence to topic boundaries (stay-on-topic), and emotional tone classification, among other safety-focused controls.

- Access management and RBAC: Role-based access control (RBAC) ensures that only authorized users can view or modify workflows, datasets, or monitoring dashboards.

- Authentication compliance (OAuth, SSO): Enforcing secure authentication (OAuth 2.0, single sign-on) and logging access attempts supports audit trails and reduces unauthorized exposure.

Why these matter: Agents often process sensitive data and can interact with enterprise systems; security metrics are essential to prevent data leaks, abuse, or exploitation.

Economic & cost metrics

Economic metrics quantify the cost efficiency of workflows and help teams monitor, optimize, and budget agentic AI applications.

Common economic metrics

- Token usage: Tracking the number of prompt and completion tokens used per interaction helps understand billing impact since many providers charge per token.

- Overall cost and cost per task: Aggregates performance and cost metrics (e.g., cost per successful task) to estimate ROI and identify inefficiencies.

- Infrastructure costs (GPU/CPU Minutes): Measures compute cost per task or session, enabling teams to attribute workload costs and align budget forecasting.

Why these matter: Economic metrics are crucial for sustainable scale, cost governance, and showing business value beyond engineering KPIs.

Governance and compliance frameworks for agents

Governance and compliance measures ensure workflows are traceable, auditable, compliant with regulations, and governed by policy. Governance can be classified broadly into 3 categories.

Governance in the face of:

- Security Risks

- Operational Risks

- Regulatory Risks

Fundamentally, they have to be ingrained in the entire agent development and deployment process, as opposed to being bolted on afterwards.

Security risk governance framework

Ensuring security policy enforcement requires tracking and adhering to organizational policies across agentic systems.

Tasks include, but are not limited to, validation and enforcement of access management through authentication and authorization that mirror broader organizational access permissions for all tools and enterprise systems that agents access.

It also includes setting up and enforcing robust, auditable approval workflows to prevent unauthorized or unintended deployments and updates to agentic systems within the enterprise.

Operational risk governance framework

Ensuring operational risk governance requires tracking, evaluating, and enforcing adherence to organizational policies such as privacy requirements, prohibited outputs, fairness constraints, and red-flagging instances where policies are violated.

Beyond alerting, operational risk governance systems for agents should provide effective real-time moderation and intervention capabilities to address undesired inputs or outputs.

Finally, a critical component of operational risk governance involves lineage and versioning, including tracking versions of agents, tools, prompts, and datasets used in agentic workflows to create an auditable record of how decisions were made and to prevent behavioral drift across deployments.

Regulatory risk governance framework

Ensuring regulatory risk governance requires validating that all agentic systems comply with applicable industry-specific and government regulations, policies, and standards.

This includes, but is not limited to, testing for compliance with frameworks such as the EU AI Act, NIST RMF, and other country- or state-level guidelines to identify risks including bias, hallucinations, toxicity, prompt injection, and PII leakage.

Why governance metrics matter

Governance metrics reduce legal and reputational exposure while meeting growing regulatory and stakeholder expectations around trustworthiness and fairness. They provide enterprises with the confidence that agentic systems operate within defined security, operational, and regulatory boundaries, even as workflows evolve over time.

By making policy enforcement, access controls, lineage, and compliance continuously measurable, governance metrics enable organizations to scale agentic AI responsibly, maintain auditability, and respond quickly to emerging risks without slowing innovation.

Turning agentic AI into reliable, production-ready systems

Agentic AI introduces a fundamentally new operating model for enterprise automation, one where systems reason, plan, and act autonomously at machine speed.

This enhanced power comes with risk. Organizations that succeed with agentic AI are not the ones with the most impressive demos, but the ones that rigorously evaluate behavior, monitor systems continuously in production, and embed governance across the entire agent lifecycle. Reliability, safety, and scale are not accidental outcomes. They are engineered through disciplined metrics, observability, and control.

If you’re working to move agentic AI from proof of concept into production, adopting a full-lifecycle approach can help reduce risk and improve reliability. Platforms such as DataRobot support this by bringing together evaluation, monitoring, tracing, and governance to give teams better visibility and control over agentic workflows.

To see how these capabilities can be applied in practice, you can explore a free DataRobot demo.

FAQs

What makes agentic AI different from traditional machine learning systems in production?

Agentic AI systems are autonomous and stateful, meaning they make multi-step decisions, invoke tools, and adapt behavior over time rather than producing a single deterministic output. This introduces new risks around compounding errors, reasoning quality, and unintended actions that traditional ML evaluation and monitoring practices are not designed to handle.

Why is pre-deployment evaluation not enough for agentic AI?

Agent behavior can change once exposed to real users, live data, and evolving system conditions. Continuous monitoring, tracing, and periodic re-evaluation are required to detect behavioral drift, emerging failure modes, and performance degradation after deployment.

What dimensions should enterprises evaluate before putting agents into production?

Production readiness requires evaluation across functional correctness, operational performance, security and safety, governance and compliance, and economic viability. Focusing on accuracy alone ignores critical risks related to scale, cost, access control, and regulatory exposure.

How do monitoring and tracing work together in agentic systems?

Monitoring surfaces when something goes wrong by tracking metrics and thresholds, while tracing explains why it happened by exposing each reasoning step, tool call, and intermediate output. Together, they enable faster debugging, safer iteration, and more confident refinement of agentic workflows.

Why is governance a first-class requirement for agentic AI?

Agentic systems can take actions, access sensitive data, and operate continuously at machine speed. Governance ensures security, operational safety, and regulatory compliance are enforced consistently across the entire lifecycle, not added reactively after issues occur.

How should enterprises think about cost and ROI for agentic AI?

Economic evaluation must account for token usage, compute consumption, infrastructure costs, and cost per successful task. Inefficient reasoning paths or poorly governed agents can quickly erode ROI even if functional performance appears acceptable.

How do platforms help operationalize agentic AI at scale?

Enterprise platforms such as DataRobot bring evaluation, monitoring, tracing, and governance into a unified system, making it easier to operate agentic workflows reliably, securely, and cost-effectively in production environments.

Get Started Today.