Since launching our generative AI platform offering just a few short months ago, we’ve seen, heard, and experienced intense and accelerated AI innovation, with remarkable breakthroughs. As a long-time machine learning advocate and industry leader, I’ve witnessed many such breakthroughs, perfectly represented by the steady excitement around ChatGPT, released almost a year ago.

And just as ecosystems thrive with biological diversity, the AI ecosystem benefits from multiple providers. Interoperability and system flexibility have always been key to mitigating risk – so that organizations can adapt and continue to deliver value. But the unprecedented speed of evolution with generative AI has made optionality a critical capability.

The market is changing so rapidly that there are no sure bets – today or in the near future. This is a statement that we’ve heard echoed by our customers and one of the core philosophies that underpinned many of the innovative new generative AI capabilities announced in our recent Launch.

Relying too heavily upon any one AI provider could pose a risk as rates of innovation are disrupted. Already, there are over 180+ different open source LLM models. The pace of change is evolving much faster than teams can apply it.

DataRobot’s philosophy has been that organizations need to build flexibility into their generative AI strategy based on performance, robustness, costs, and adequacy for the specific LLM task being deployed.

As with all technologies, many LLMs come with trade offs or are more tailored to specific tasks. Some LLMs may excel at particular natural language operations like text summarization, provide more diverse text generation, or even be cheaper to operate. As a result, many LLMs can be best-in-class in different but useful ways. A tech stack that provides flexibility to select or blend these offerings ensures organizations maximize AI value in a cost-efficient manner.

DataRobot operates as an open, unified intelligence layer that lets organizations compare and select the generative AI components that are right for them. This interoperability leads to better generative AI outputs, improves operational continuity, and decreases single-provider dependencies.

With such a strategy, operational processes remain unaffected if, say, a provider is experiencing internal disruption. Plus, costs can be managed more efficiently by enabling organizations to make cost-performance tradeoffs around their LLMs.

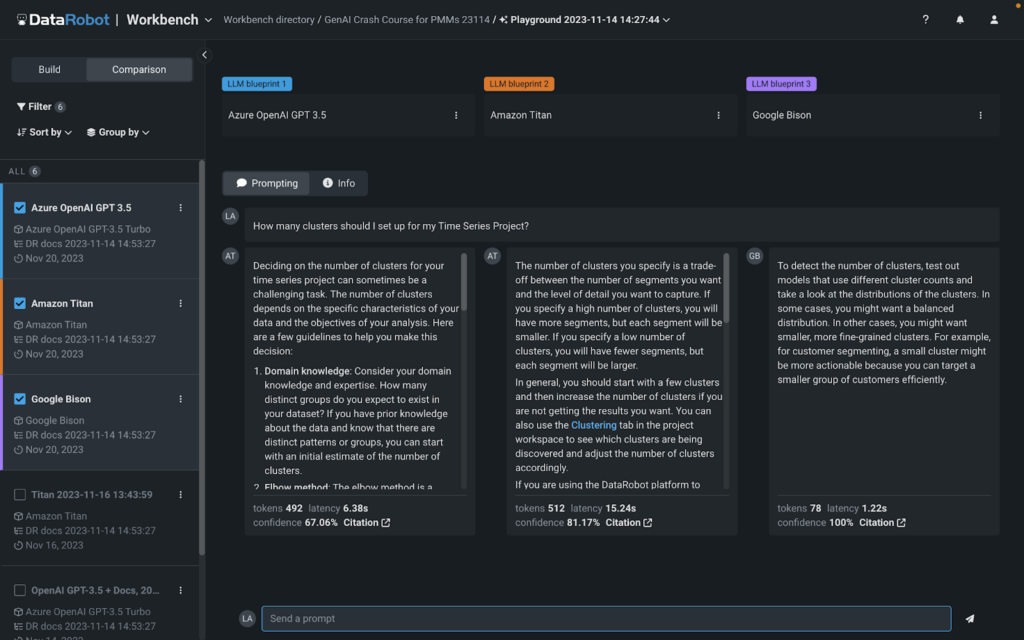

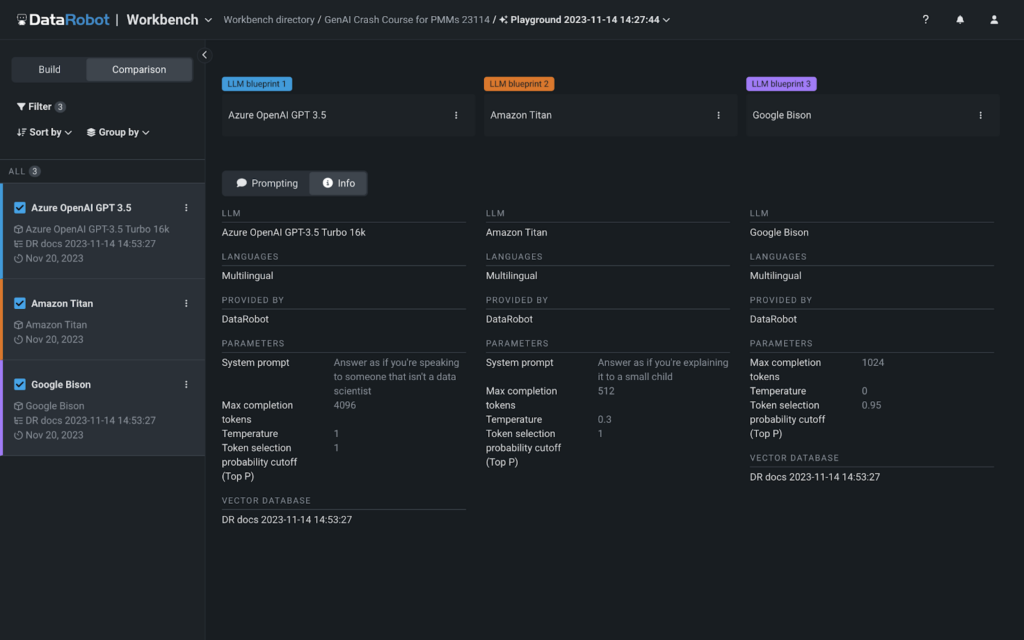

During our Launch, we announced our new multi-provider LLM Playground. The first-of-its-kind visual interface provides you with built-in access to Google Cloud Vertex AI, Azure OpenAI, and Amazon Bedrock models to easily compare and experiment with different generative AI ‘recipes.’ You can use any of the built-in LLMs in our playground or bring your own. Access to these LLMs is available out-of-the-box during experimentation, so there are no additional steps needed to start building GenAI solutions in DataRobot.

With our new LLM Playground, we’ve made it easy to try, test, and compare different GenAI “recipes” in terms of style/tone, cost, and relevance. We’ve made it easy to evaluate any combination of foundational model, vector database, chunking strategy, and prompting strategy. You can do this whether you prefer to build with the platform UI or using a notebook. Having the LLM playground makes it easy for you to flip back and forth from code to visualizing your experiments side by side.

With DataRobot, you can also hot-swap underlying components (like LLMs) without breaking production, if your organization’s needs change or the market evolves. This not only lets you calibrate your generative AI solutions to your exact requirements, but also ensures you maintain technical autonomy with all of the best of breed components right at your fingertips.

You can see below exactly how easy it is to compare different generative AI ‘recipes’ with our LLM Playground.

Once you’ve selected the right ’recipe’ for you, you can quickly and easily move it, your vector database, and prompting strategies into production. Once in production, you get full end-to-end generative AI lineage, monitoring, and reporting.

With DataRobot’s generative AI offering, organizations can easily choose the right tools for the job, safely extend their internal data to LLMs, while also measuring outputs for toxicity, truthfulness, and cost among other KPIs. We like to say, “we’re not building LLMs, we’re solving the confidence problem for generative AI.”

The generative AI ecosystem is complex – and changing every day. At DataRobot, we ensure that you have a flexible and resilient approach – think of it as an insurance policy and safeguards against stagnation in an ever-evolving technological landscape, ensuring both data scientists’ agility and CIOs’ peace of mind. Because the reality is that an organization’s strategy shouldn’t be constrained to a single provider’s world view, rate of innovation, or internal turmoil. It’s about building resilience and speed to evolve your organization’s generative AI strategy so that you can adapt as the market evolves – which it can quickly do!

You can learn more about how else we’re solving the ‘confidence problem’ by watching our Launch event on-demand.

Related posts

See other posts in Inside the ProductLearn how to build and scale agentic AI with NVIDIA and DataRobot. Streamline development, optimize workflows, and deploy AI faster with a production-ready AI stack.

What is an AI gateway? And why does your enterprise need one? Discover how it keeps Agentic AI scalable, secure, and cost-efficient. Read the full blog.

Explore syftr, an open source framework for discovering Pareto-optimal generative AI workflows. Learn how to optimize for accuracy, cost, and latency in real-world use cases.

Related posts

See other posts in Inside the ProductGet Started Today.