Maximizing Existing Snowflake Investments

Some businesses have spent significant money on tools to remain innovative and competitive. While this can be an excellent strategy for a future-oriented company, it can prove futile if you don’t maximize the value of your investment. According to Flexera1, 92% of enterprises have a multi-cloud strategy, while 80% have a hybrid cloud strategy.

Integrating different systems, data sources, and technologies within an ecosystem can be difficult and time-consuming, leading to inefficiencies, data silos, broken machine learning models, and locked ROI.

The DataRobot AI Platform and the Snowflake Data Cloud provide an interoperable, scalable AI/ML solution and unique services that integrate with diverse ecosystems so that data-driven enterprises can focus on delivering trusted and impactful results.

Extending Snowflake Integration: New Capabilities and Improvements

To help customers maximize their Snowflake investment, DataRobot is extending its Snowflake integration to help customers quickly iterate, improve models, and complete the ML lifecycle without repeated configuration.

This includes:

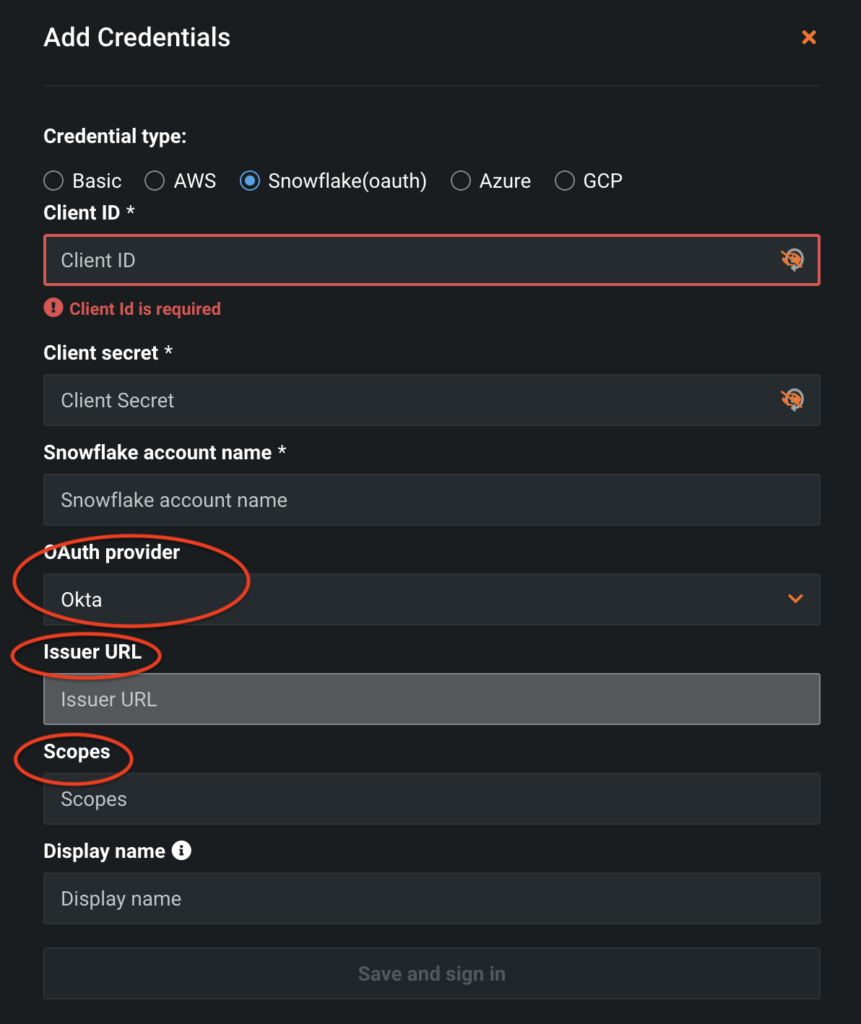

- Supporting Snowflake External OAuth configuration

- Leveraging Snowpark for exploratory data analysis with DataRobot-hosted Notebooks and model scoring

- A seamless user experience when deploying and monitoring DataRobot models to Snowflake

- Monitoring service health, drift, and accuracy of DataRobot models in Snowflake

“Organizations are looking for mature data science platforms that can scale to the size of their entire business. With the latest capabilities launched by DataRobot, customers can now guarantee the security and governance of their data used for ML, while simultaneously increasing the accessibility, performance, and efficiency of data preparation, model training, and model observability by their users,” said Miles Adkins, Data Cloud Principal, AI/ML at Snowflake. “By bringing the unmatched AutoML capabilities of DataRobot to the data in Snowflake’s Data Cloud, customers get a seamless and comprehensive enterprise-grade data science platform.”

Complete the Machine Learning Lifecycle, Without Repeated Configuration

Connecting to Snowflake

Connect to Snowflake through external identity providers using Snowflake External OAuth without providing user and password credentials to DataRobot. Reduce your security perimeter by reusing your existing Snowflake security policies with DataRobot.

Learn more about Snowflake External OAuth.

Exploratory Data Analysis

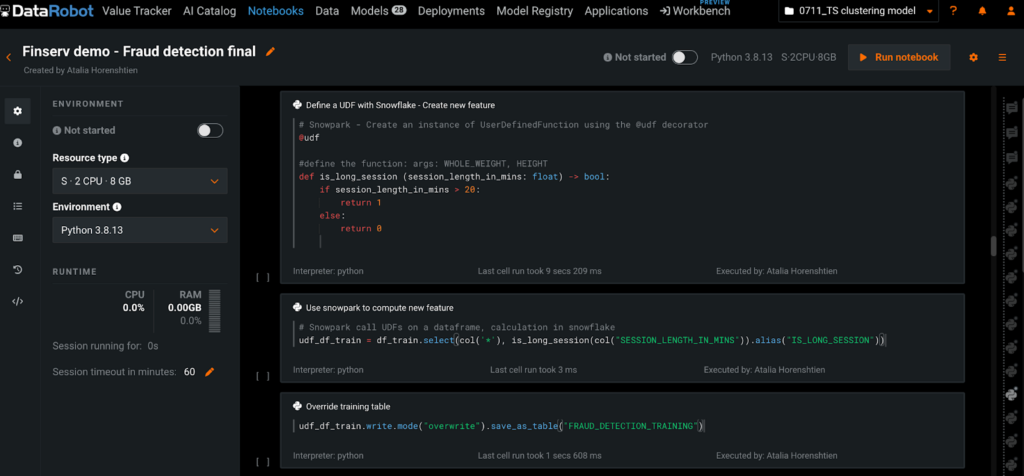

After we connect to Snowflake, we can start our ML experiment.

We recently announced DataRobot’s new Hosted Notebooks capability.

For our joint solution with Snowflake, this means that code-first users can use DataRobot’s hosted Notebooks as the interface and Snowpark processes the data directly in the data warehouse. This allows users to work with familiar Python syntax that gets pushed down to Snowflake to run seamlessly in a highly secure and elastic processing engine. They can enjoy a hosted experience with code snippets, versioning, and simple environment management for rapid AI experimentation.

Learn more about DataRobot hosted notebooks.

Model Training

Once the data is prepared, users choose their preferred approach for model development using DataRobot AutoML through the GUI, hosted Notebooks, or both.

When the training process is complete, DataRobot will recommend the best-performing model for production based on the selected metric and provide an explanation.

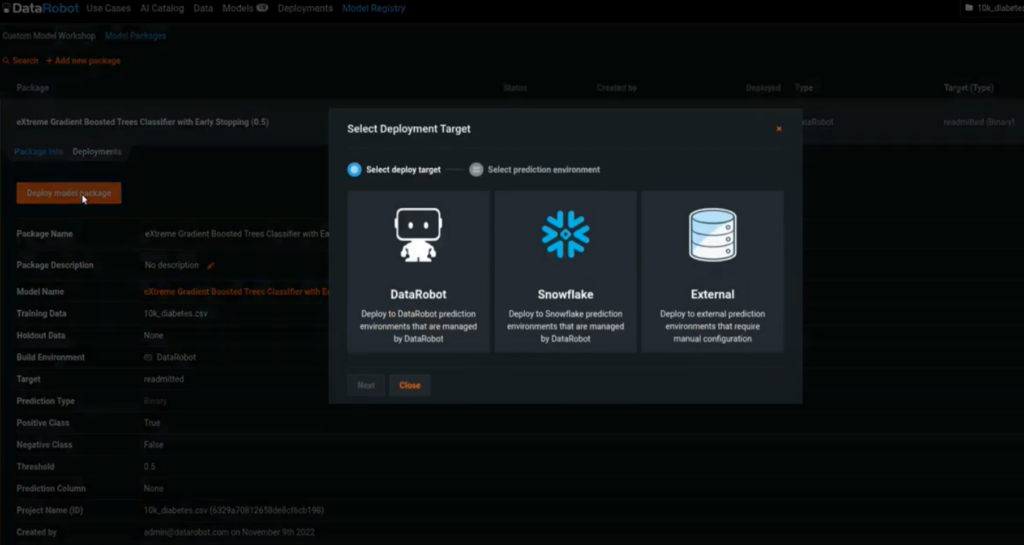

Model Deployment

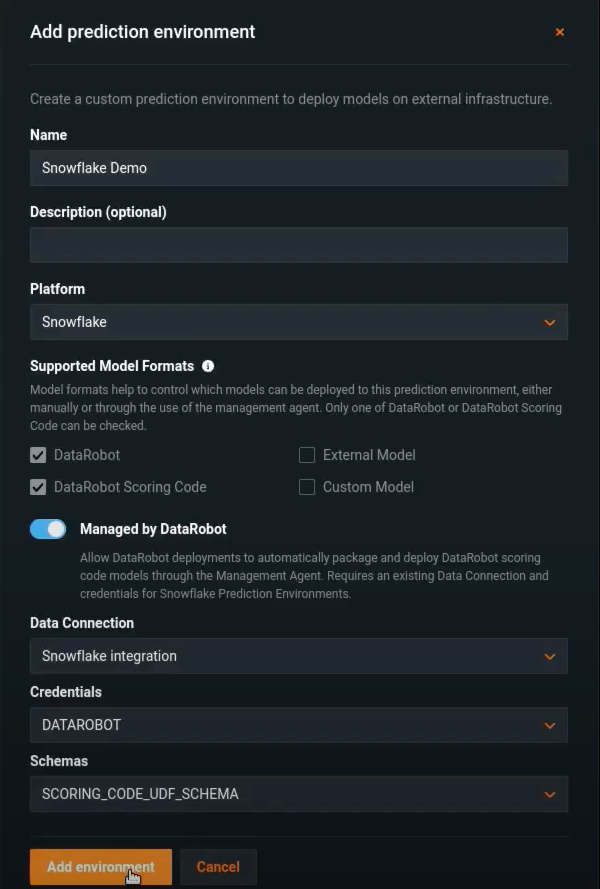

Customers need the flexibility to deploy models into different environments. Deploying to Snowflake reduces infrastructure operations complexity, data transfer latency and associated costs, while improving efficiency and providing near limitless scale.

A new Snowflake prediction environment configured by DataRobot will automatically manage and control the environment, including model deployment and replacement.

When deploying a DataRobot model to Snowflake, this new seamless integration significantly improves the user experience, reduces time and effort, and eliminates user errors.

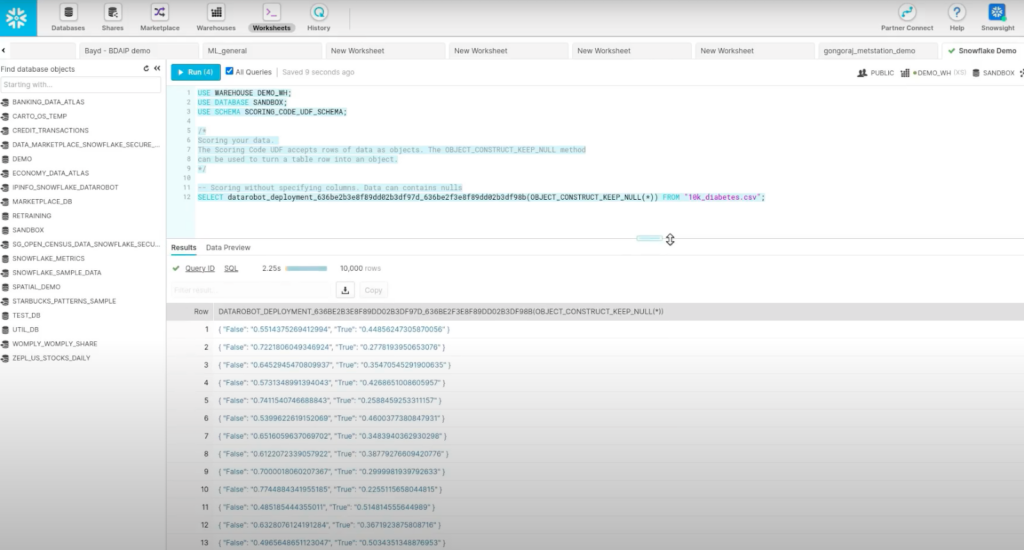

The automated deployment pushes trained models as Java UDFs, running scalable inference inside Snowflake, and leveraging Snowpark to score the data for speed and elasticity, while keeping data in place.

Model Monitoring

Internal and external factors affect models’ performance.

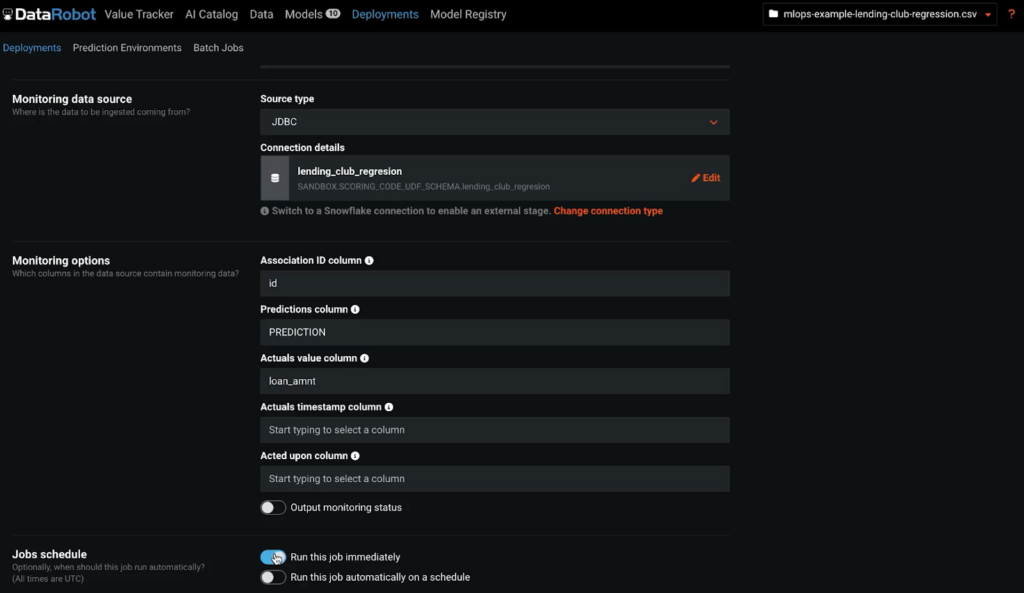

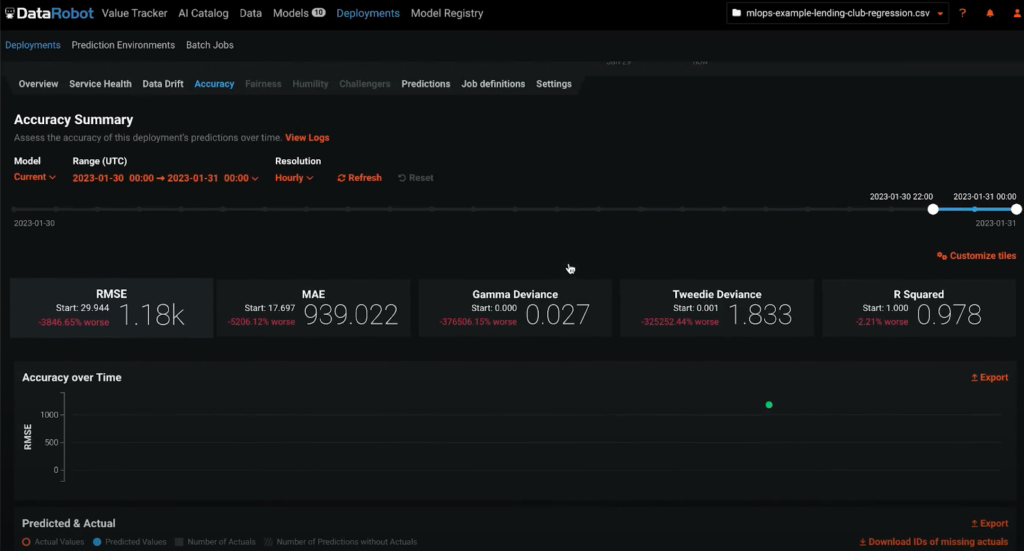

The new monitoring job capability, which is run seamlessly from the DataRobot GUI helps customers make business decisions based on predictions and actual data changes and govern their models at scale.

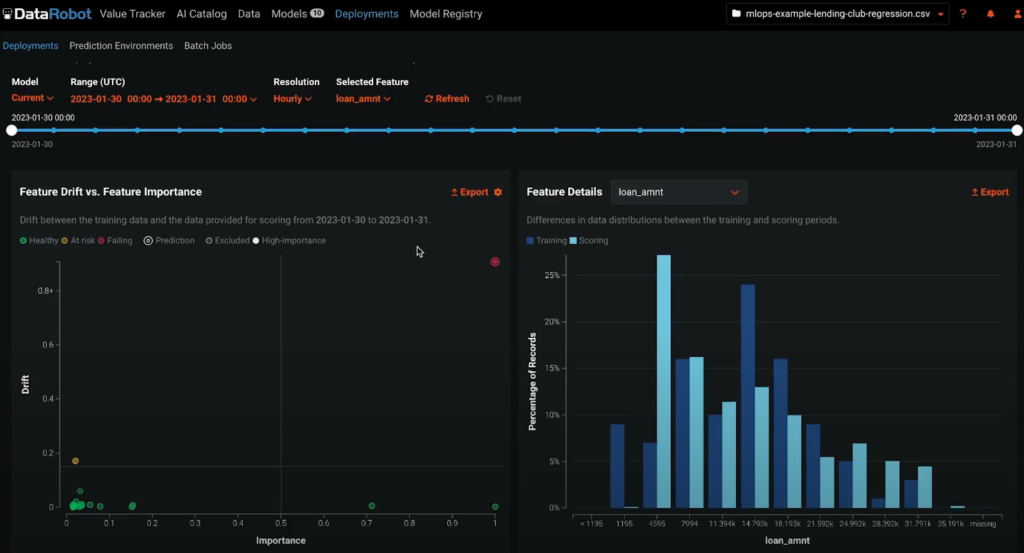

Over time models degrade and require replacement or retraining. The DataRobot AI Platform dashboards present the model’s health, data drift, and accuracy over time and can help determine model accountability.

Learn more about the new monitoring job and automated deployment.

There’s more coming

If you are already a customer of Snowflake and DataRobot, reach out to your account team to get up to speed on these new features.

Getting Started with DataRobot AI and Snowflake, the Data Cloud

DataRobot and Snowflake together offer an end-to-end enterprise-grade AI experience and expertise to enterprises by reducing complexity and productionizing ML models at scale, unlocking business value. Learn more at DataRobot.com/Snowflake.

1 Source: Flexera 2021 State of the Cloud Report

Related posts

See other posts in AI PartnersRelated posts

See other posts in AI PartnersGet Started Today.