The ability to test models for algorithmic bias is an important part of ensuring that models are fair and balanced. Many platforms, including DataRobot’s Bias and Fairness suite, allow you to do this. However, correcting the biased behavior behind the models is more challenging. We’re excited to share that we’ve now extended our Bias and Fairness capabilities to include automated Bias Mitigation. With Bias Mitigation, you can make your models behave more fairly towards a feature of your choosing, which we’ll review in this post.

Mitigation Workflows

DataRobot offers two workflows for mitigating bias:

- Mitigate individual models of your choosing after starting Autopilot.

- Mitigate the top three models found on the leaderboard by configuring mitigation to run automatically as part of Autopilot.

We’ll start with the individual model workflow and then move on to the automatic workflow. Many of the concepts between the two workflows are similar.

The Use Case

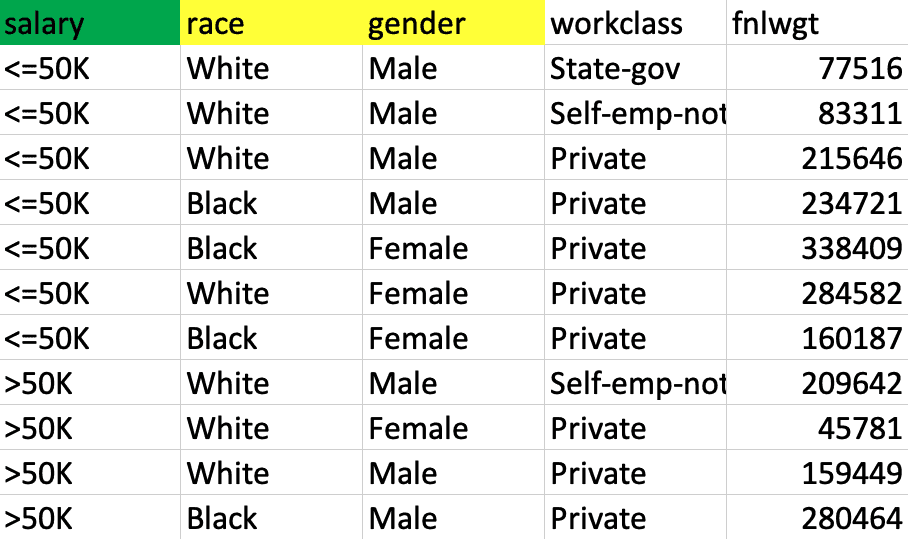

We’ll be using a use case that predicts income levels for individuals. The dataset includes features for race and gender. We want to make sure the model is not unfairly predicting low income levels for these groups. In the example below, we see a portion of our training data, including the target (green) and the features we’re examining bias towards (yellow).

Checking for Bias

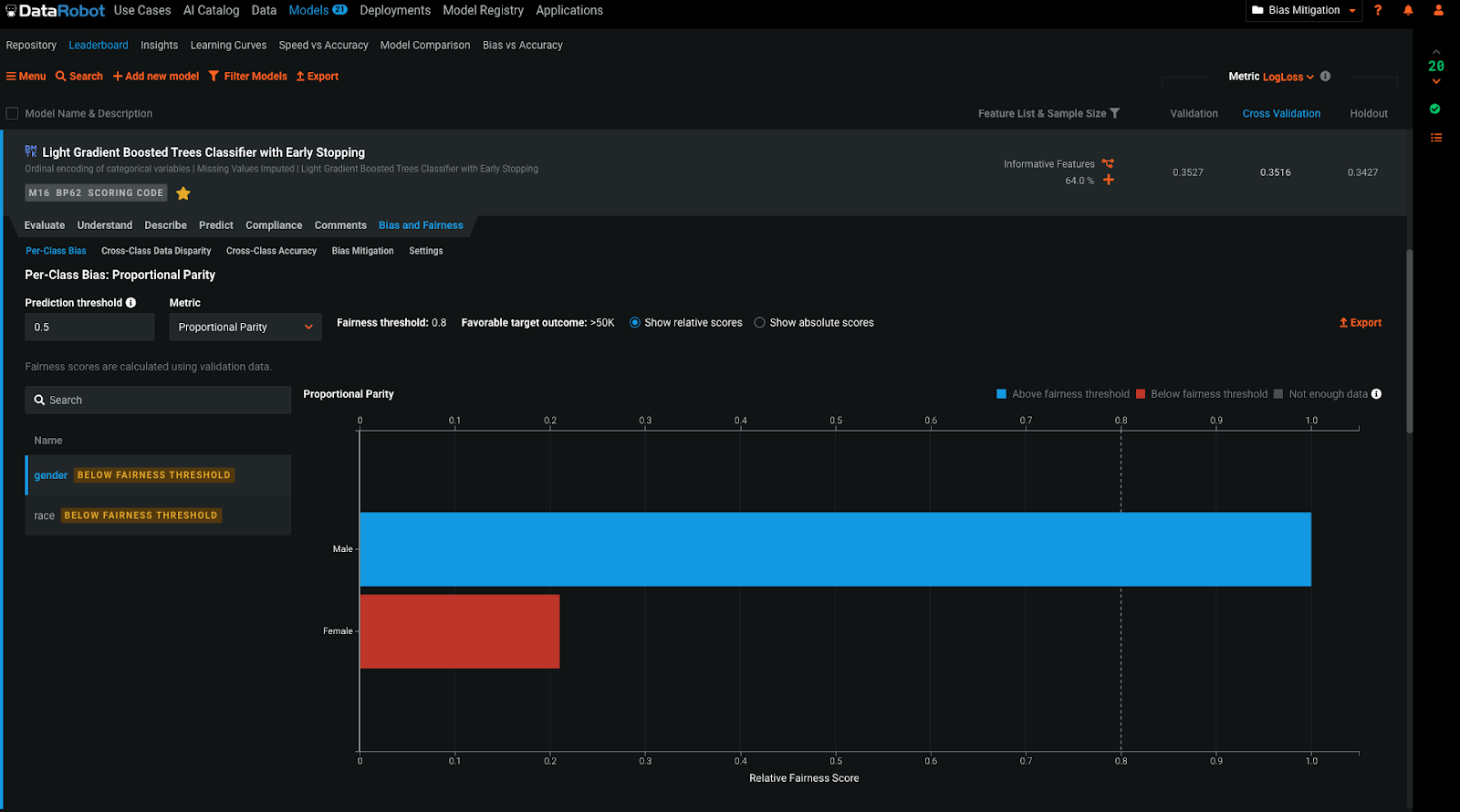

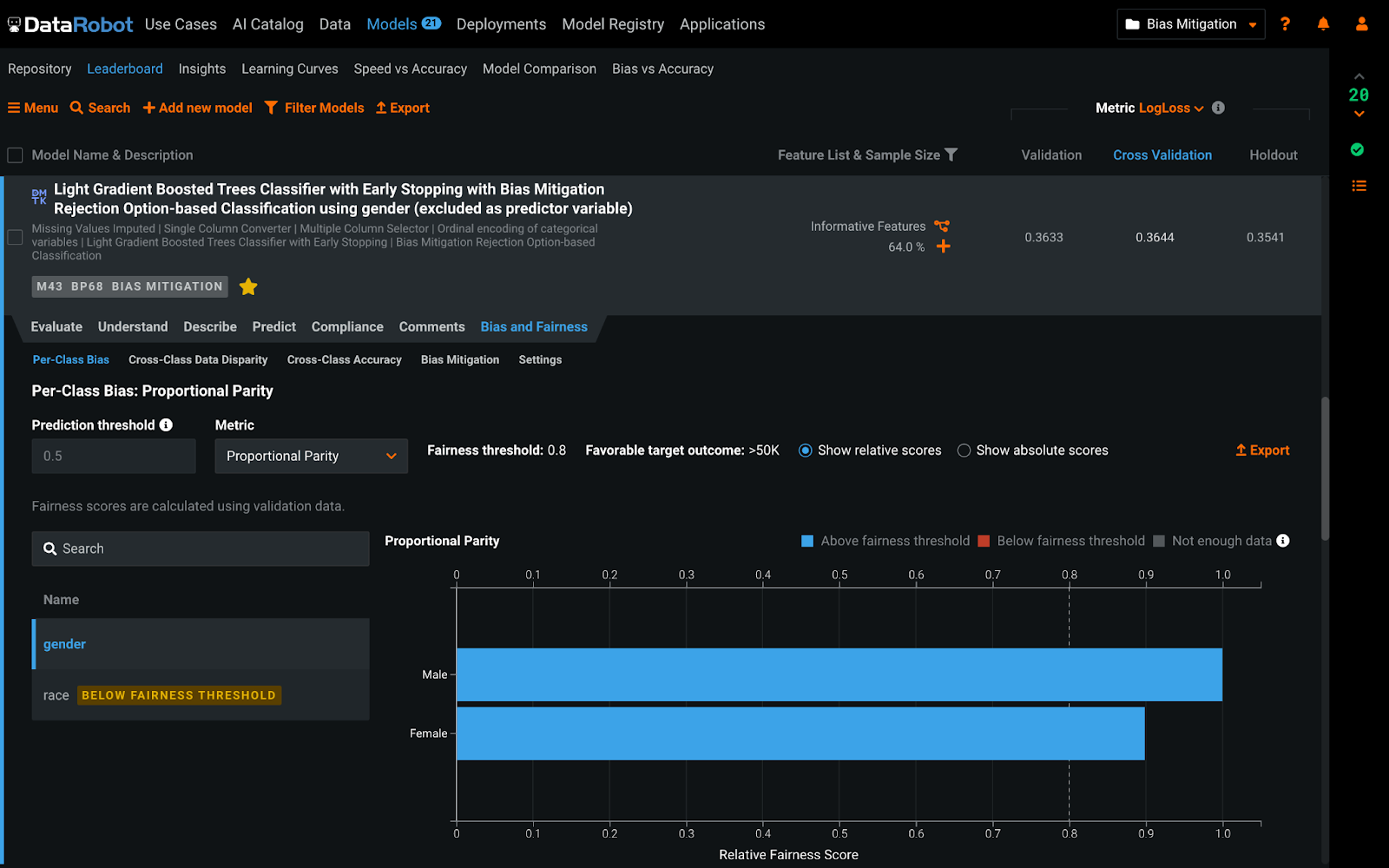

Using DataRobot’s AutoML platform, we’ve developed a number of models and are now ready to evaluate them for bias. We’ve previously configured the Bias and Fairness tool, which is required to enable Bias Mitigation. Let’s take a look at this Light Gradient Boosted Trees Classifier.

The Per-Class Bias insight tells us if classes in a feature are receiving different outcomes. Right now, we’re looking at the feature gender. Looking at the bar chart, we see that the classes are “male” and “female.” The chart tells us the ratio of women that receive the positive outcome— having an income over $50K—to that of men. We see that women are receiving the positive outcome at a ratio of 0.21 to that of men. This means that for every man that is predicted to have an income of over $50K, only 0.21 women are. Clearly, this model is biased and we need to apply corrective measures to it if we wish to deploy it in the future.

Mitigating Bias

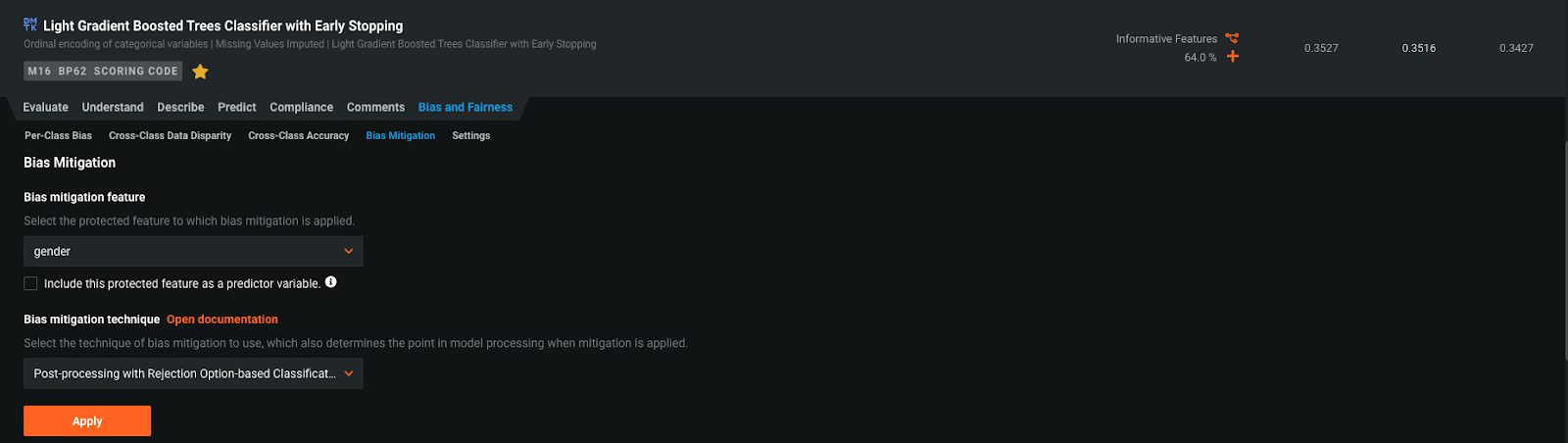

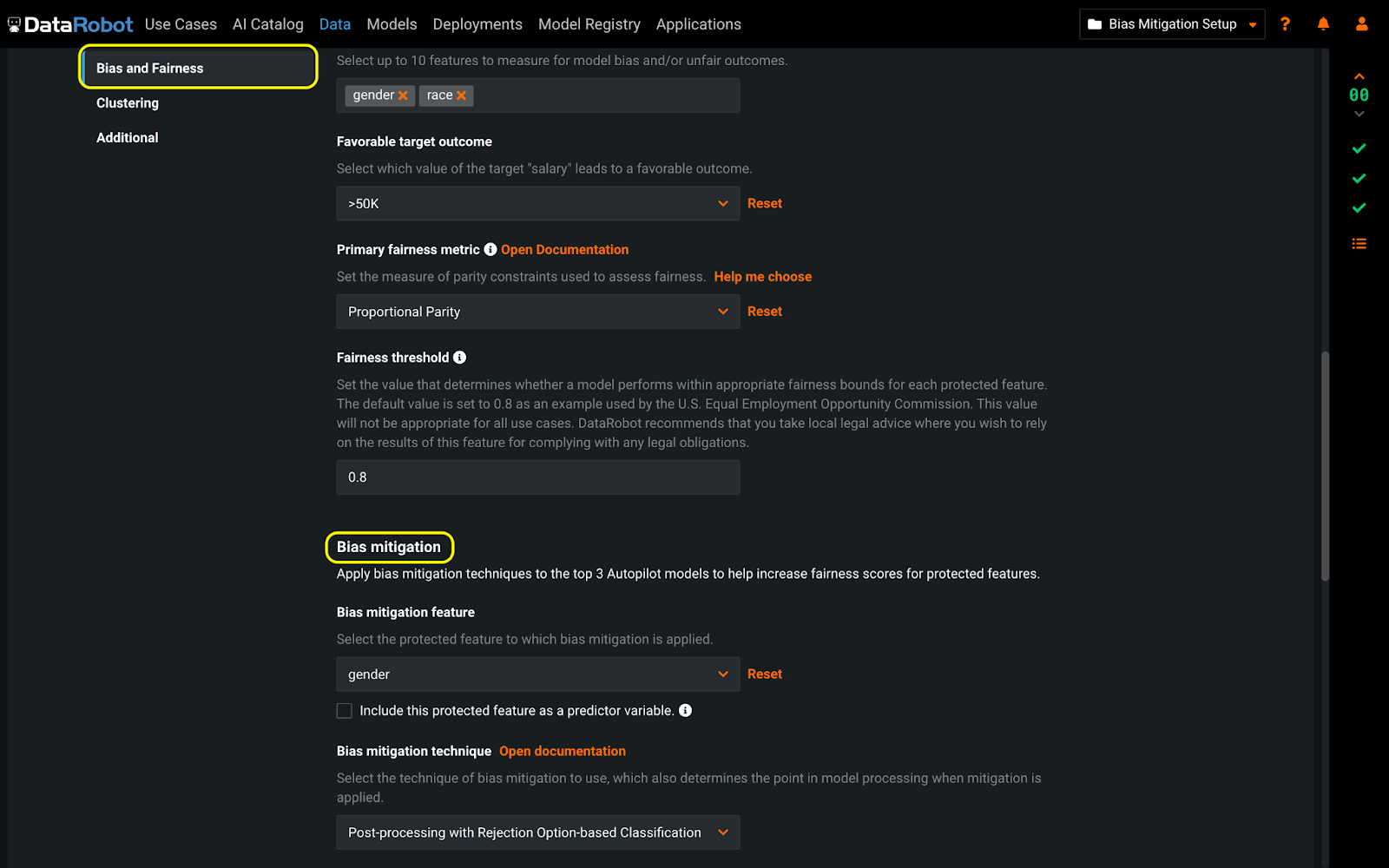

Using Bias Mitigation, we can make this model behave more fairly towards women. Let’s navigate to the Bias Mitigation section of the Bias and Fairness tool to configure Bias Mitigation. First, we need to choose which feature we’d like the model to behave more fairly towards. In our case, that will be the feature gender.

Next, we need to choose which mitigation technique to use. DataRobot offers two options: pre-processing reweighing and post-processing rejection option. Depending on the metric you are using to measure bias, these techniques will have different effects. Consulting the Bias and Fairness documentation will help guide you on the right mitigation technique to use. We’ll use post-processing rejection option for this use case.

Finally, we have the option to include the feature we are mitigating on as a training input to the model. This means you can mitigate a model without it learning from the mitigation feature. This is useful in scenarios where you are prohibited from using certain features in a model. We will not include gender in this example.

Selecting Apply will cause a mitigated version of our Light Gradient Boosted Tree Classifier to start running.

Evaluating the Mitigated Model

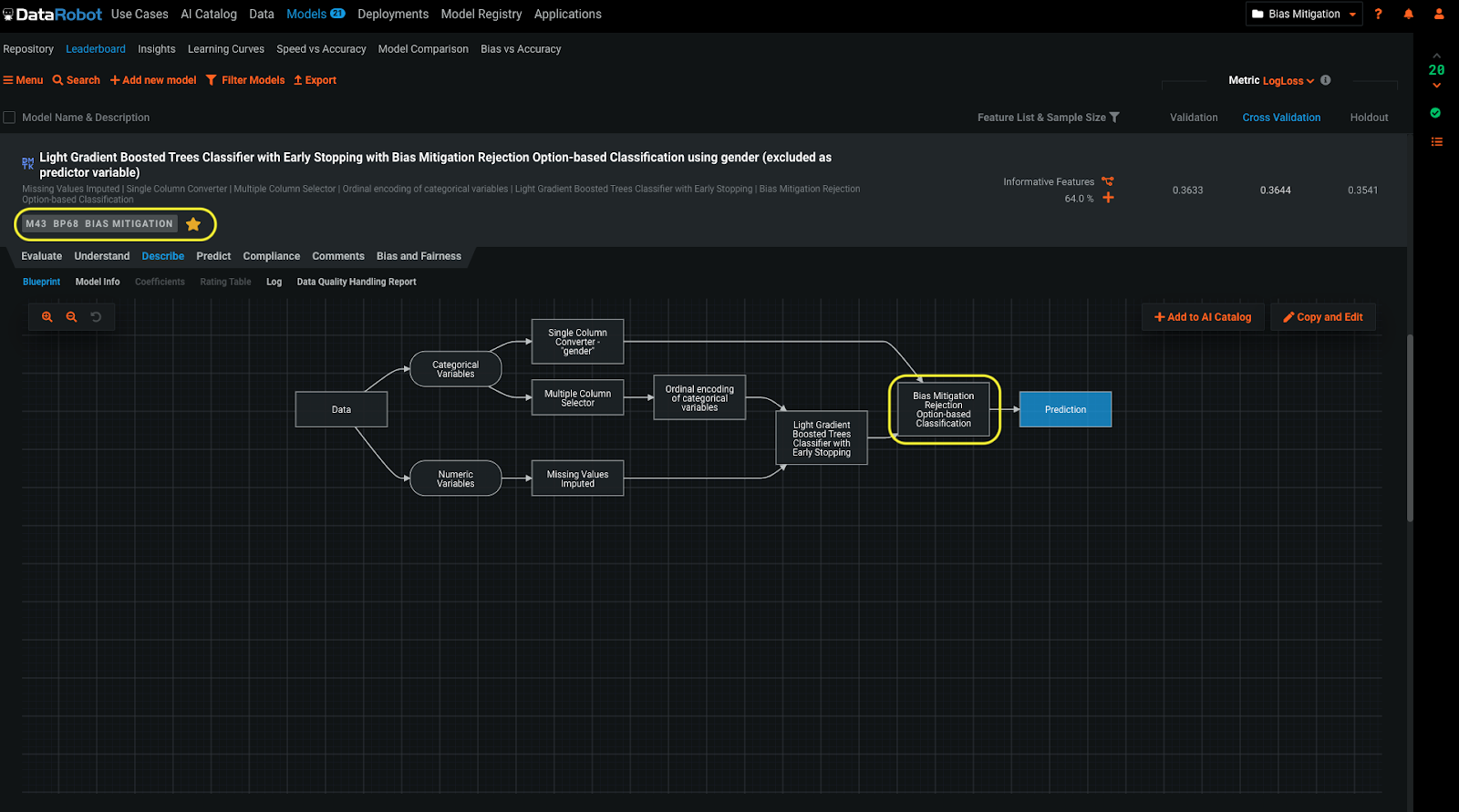

Our mitigated model can be identified on the leaderboard by the Bias Mitigation badge. If we look at the Blueprint, we see there is a new Bias Mitigation task. Otherwise, it is the same as the original model.

Looking at Per-Class Bias under Bias and Fairness will show us just how much more fair this model has become. Now women are receiving much fairer outcomes and are almost at parity with men. You will recall that previously the ratio of women receiving the positive outcome to men was 0.21. Now it is 0.89.

We can set thresholds of bias that are acceptable to us. A commonly used heuristic is the four-fifths rule, meaning classes need to receive the positive outcome at a ratio of 4/5 (or 0.80) to that of the most favored class. In the example above, the dotted line represents this fairness threshold. We see that mitigation has caused the predictions for women to cross this threshold and become acceptable to us.

Automatic Mitigation

To configure mitigation to run automatically, we need to navigate to Bias and Fairness under Advanced Options prior to starting a project. Looking at the Bias Mitigation section, we see the same options as before. We’ll use the same settings so we can see the result of automatic mitigation for our use case. Also shown in this screenshot are some of the settings required to configure Bias and Fairness.

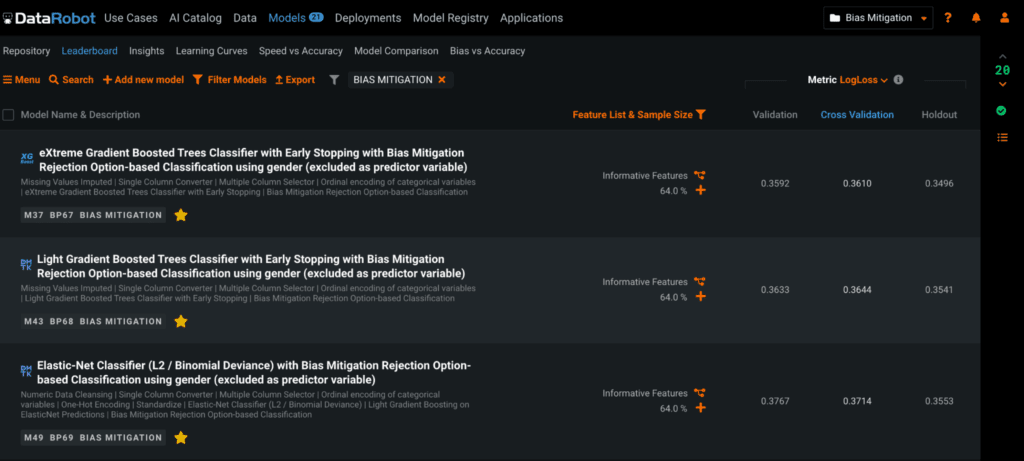

With these settings, we are ready to start Autopilot. DataRobot will automatically mitigate the top three models found and attempt to make them behave more fairly towards women.

We’ve filtered the leaderboard on the Bias Mitigation badge. We see three mitigated models, which represent the mitigated versions of the top three models.

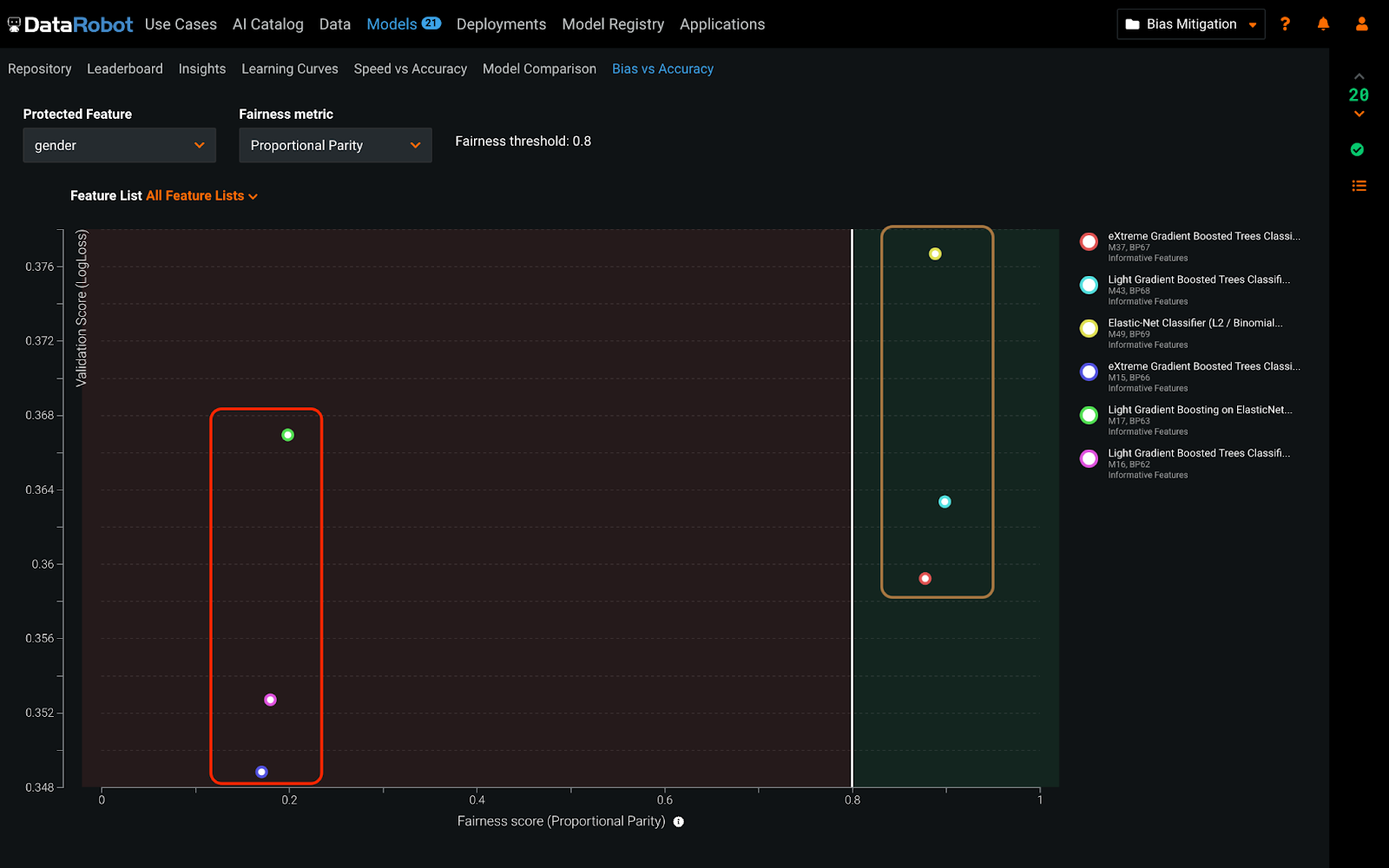

To compare all the mitigated vs. non-mitigated models together, we can use the Bias vs Accuracy insight. This feature plots the accuracy of the models against their bias score. In the red box are the original non-mitigated versions of the top three models. In the green box are the mitigated versions of those models. We see that they have become much more fair and have even crossed the fairness threshold of 0.8 that we set for this model.

Conclusion

Evaluating AI bias is an important part of making sure your models are not perpetuating harm. With DataRobot Bias and Fairness, you can test your models for bias and take corrective action to make them behave more fairly.

Learn how your team can develop, deliver, and govern AI apps and AI agents with DataRobot.

Request a DemoRelated posts

See other posts in Inside the ProductAI adoption in supply chains isn’t about chasing trends. Learn the strategic framework that’s helping leaders build real, lasting impact. Read the full post.

Learn how to build and scale agentic AI with NVIDIA and DataRobot. Streamline development, optimize workflows, and deploy AI faster with a production-ready AI stack.

What is an AI gateway? And why does your enterprise need one? Discover how it keeps Agentic AI scalable, secure, and cost-efficient. Read the full blog.

Related posts

See other posts in Inside the ProductGet Started Today.