Generative AI

Accelerate development with pre-built generative AI applications and components. Test, iterate, and ship 10X more use cases with pre-built generative AI applications that contain a complete set of customizable components needed to run in production.

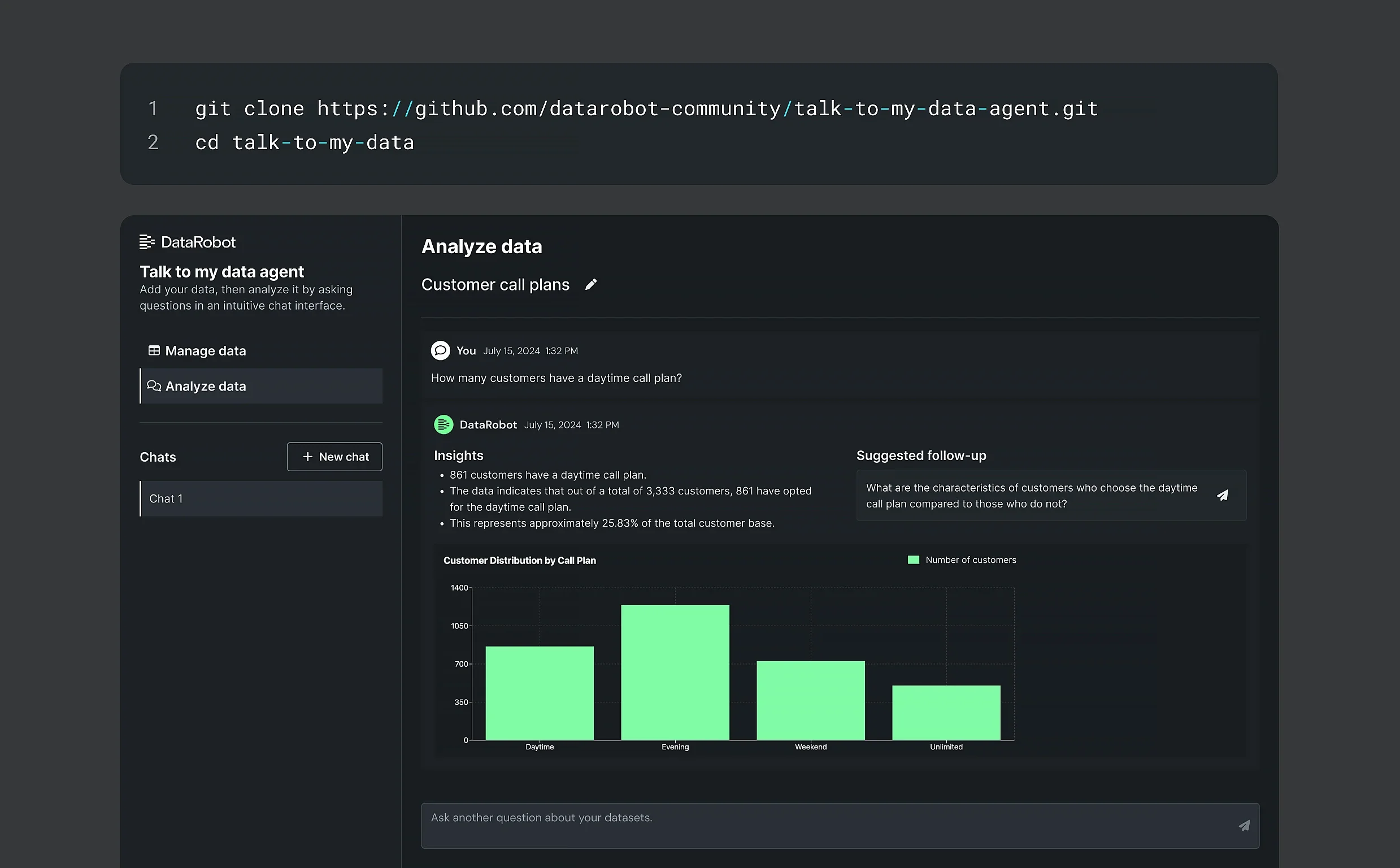

Empower data decision makers to securely gather the deep insights they need about company tabular data, and documents.

Scale business operations by repeatedly creating content based on customer data and predictions.

Enable better understanding of predictive analytics by augmenting time series forecasts with generative AI-driven summaries and predictive explanations.

Develop across ecosystems with GUI- and code-based generative AI tooling. Rapidly develop, experiment and deploy any LLM, SLM, vector database, or embedding model, with DataRobot handling all credentials.

Mix and match over 70 generative AI models and embedding models without having to secure API keys or manage accounts.

Optimize generative AI workflow latency, cost and availability by running large workloads across multiple cloud resources.

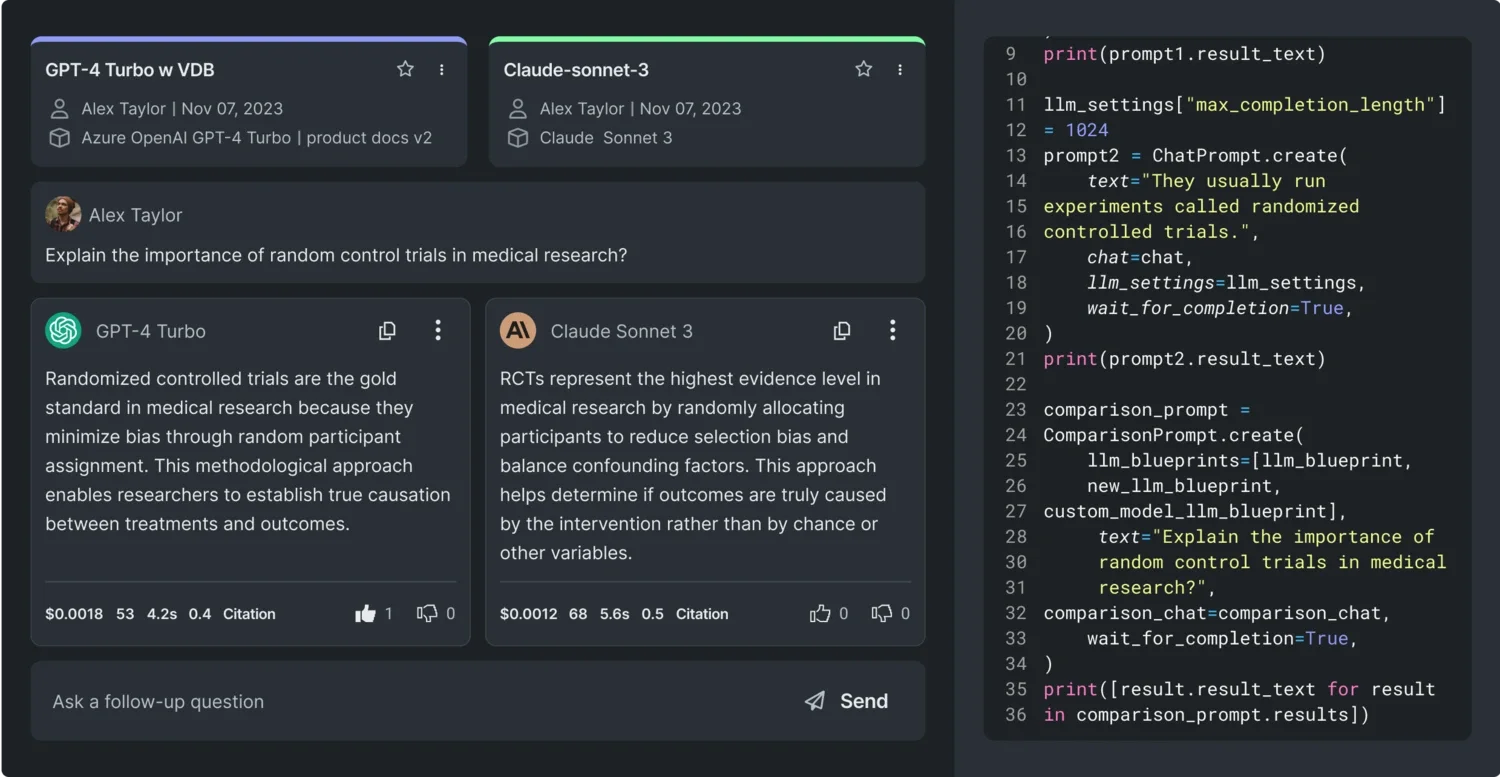

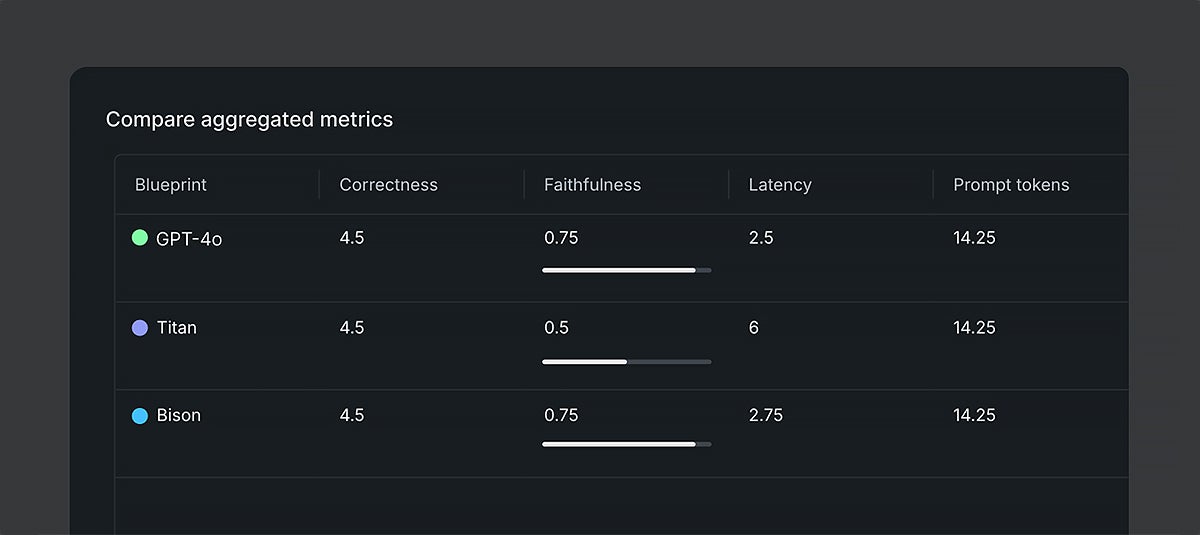

Test prompts, tweak settings, and compare outputs to find the best-performing configuration. Select settings and rapidly experiment with different prompts to test which model produces the best output.

Connect your LLM vector DB, retrievers, and prompts into a single endpoint you can call from anywhere.

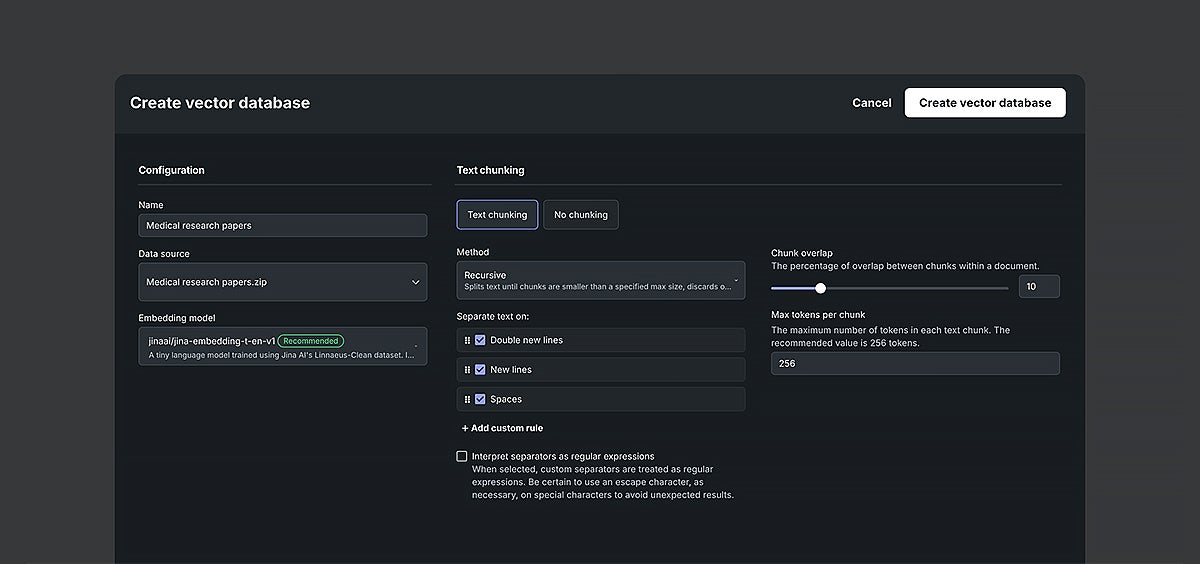

Build advanced RAG systems. Seamlessly incorporate RAG into your generative AI workflows by bringing in your own external vector database, like Pinecone or Elasticsearch, or rapidly experimenting with multiple chunking strategies and context features to develop your own.

Remove barriers to rapid exploration by seamlessly changing chunk sizes or splitting text semantically by phrase.

Improve retrieval speeds and overall accuracy by indexing vectors with metadata.

Boost context understanding by rewriting prompts and splitting queries based on chat history.

Leverage on-demand GPUs to host your own embedding model or generate embeddings with DataRobot.

Seamlessly evaluate workflow performance. Rapidly benchmark and compare models using specialized LLMs from any ecosystem or using ground truth datasets.

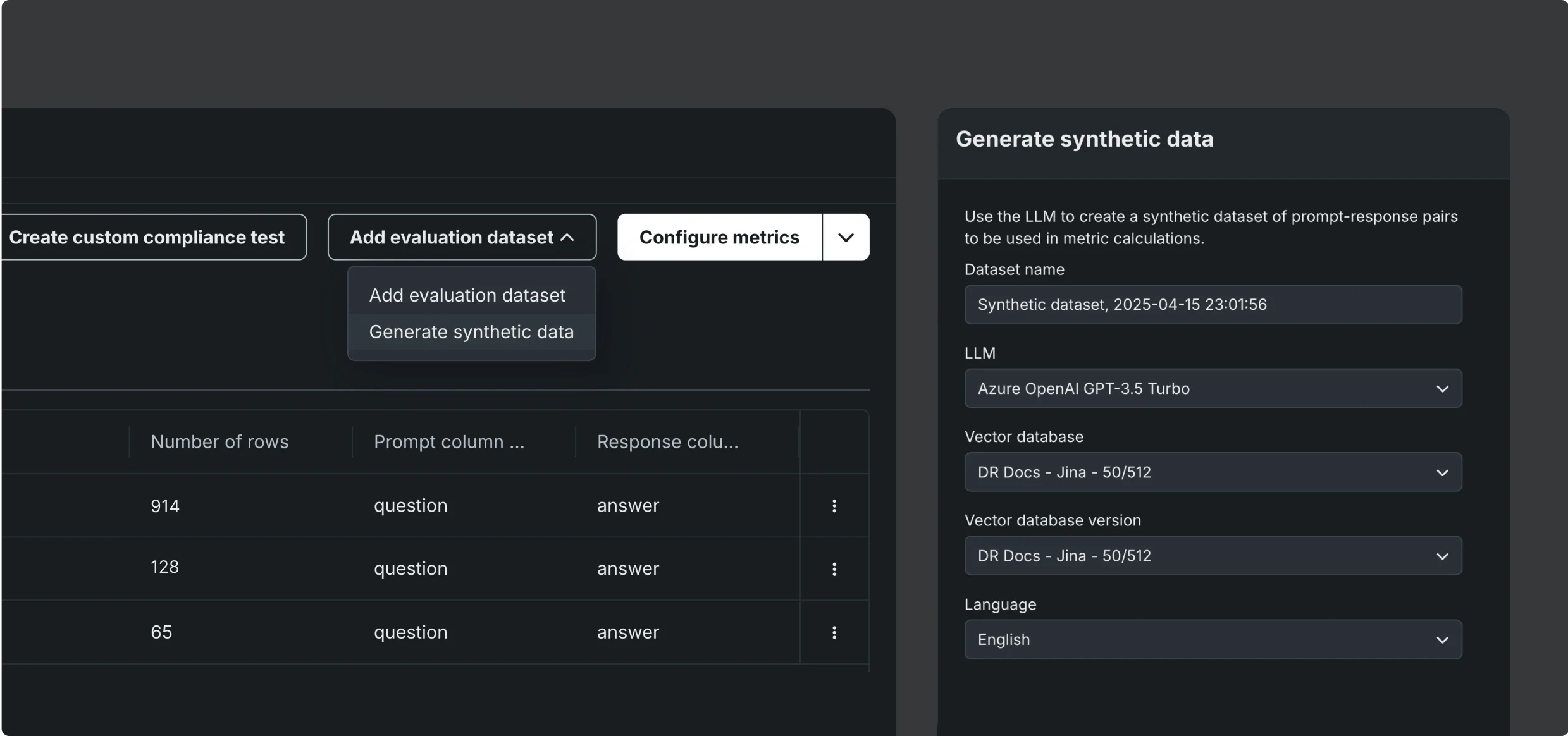

Synthetically generate thousands of question answer pairs in seconds. Jumpstart generative AI workflow evaluation by automatically building ground truth datasets across the entirety of your existing vector databases that are fully customizable to meet your needs.

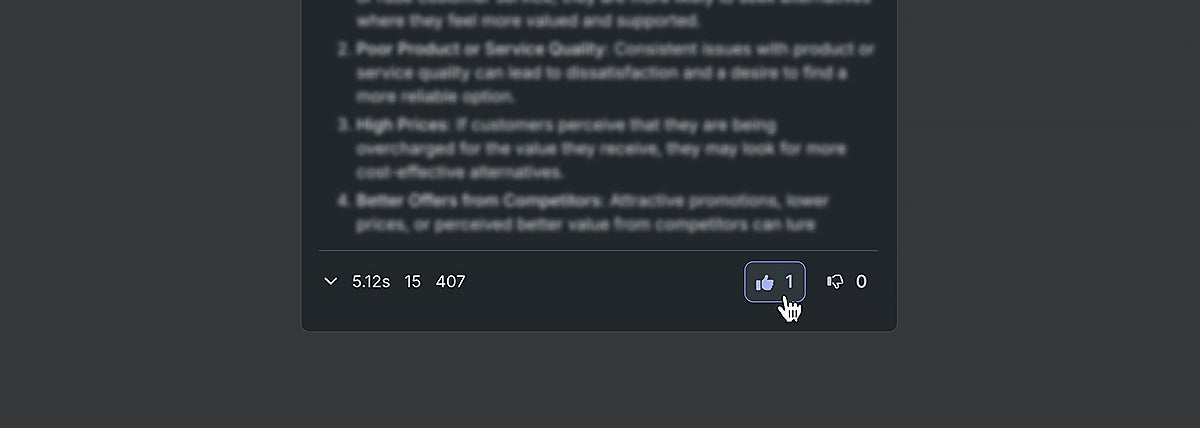

Facilitate real-time stakeholder feedback. Ensure your generative AI model is meeting users needs by automatically collecting human feedback on app outputs to fine-tune responses. Tailor evaluation criteria to focus on what matters most to your use case.

Enable quick model adoption via QA experience. With a single click, create a polished Q&A prototype app you can share with key stakeholders via URL or collect internal feedback from your team.

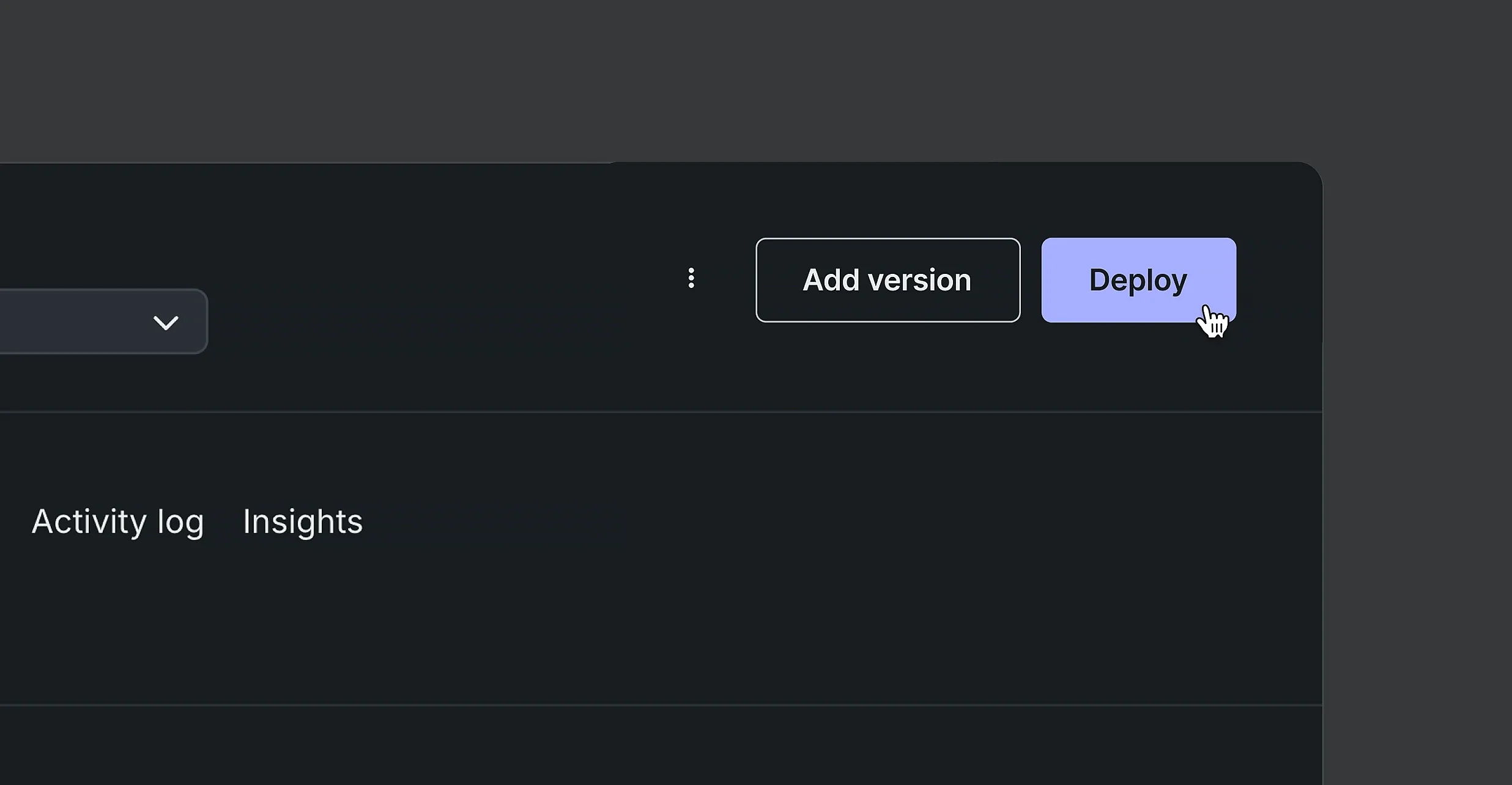

Trivially easy deployment. Instantly ship generative AI with an infrastructure as code workflow, declarative API, or GUI button that is automatically managed and configured for NVIDIA NIM. Seamlessly hot swap new versions, without breaking pipelines or interrupting production workflows.

Infuse AI apps into your business. Seamlessly integrate generative AI applications with existing chat applications to meet business stakeholders where they already work.

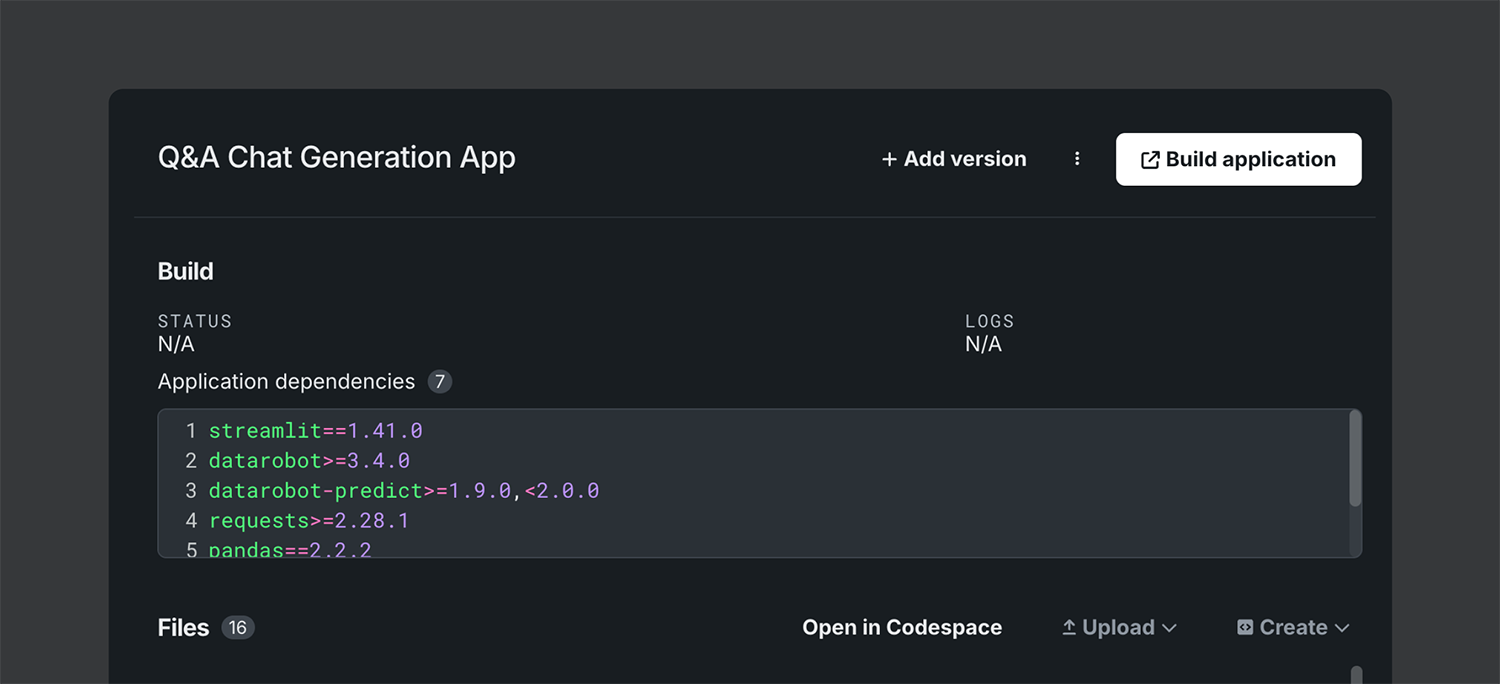

Build app UIs from scratch with your favorite development tools. Remove the complexity of setting up development environments for your favorite python visualization frameworks.

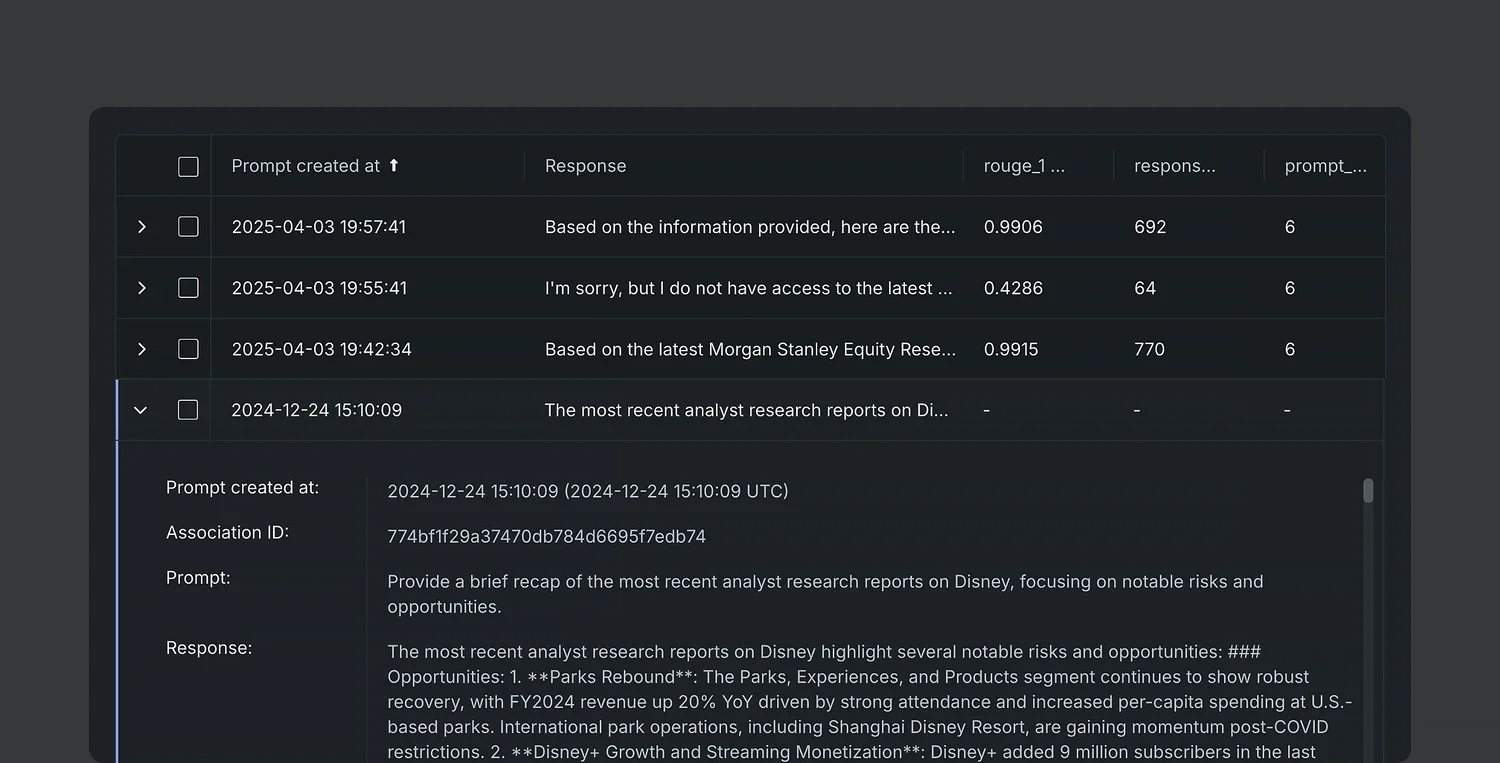

Ensure quality by tracing every node of your generative AI workflow. Quickly debug issues and trace workflow thinking and outputs with out-of-the-box LLM lineage that includes every prompt and vector input.

Automatically intervene when issues are detected. Select from a library of LLM guards, or bring your own, to continuously monitor for safety, quality, and cost risks and prevent harmful outputs in real time.

Ensure quality and prevent hallucinations with an extensive library of guards, including specialized LLM-as-a-judge.

Natively select, register, and deploy NVIDIA NeMo guards from the NVIDIA gallery on premise or on any cloud.

Track prompt and response tokens to calculate and minimize production costs.

Prevent misuse including prompt injection and PII leakage with automated response monitoring and intervention.

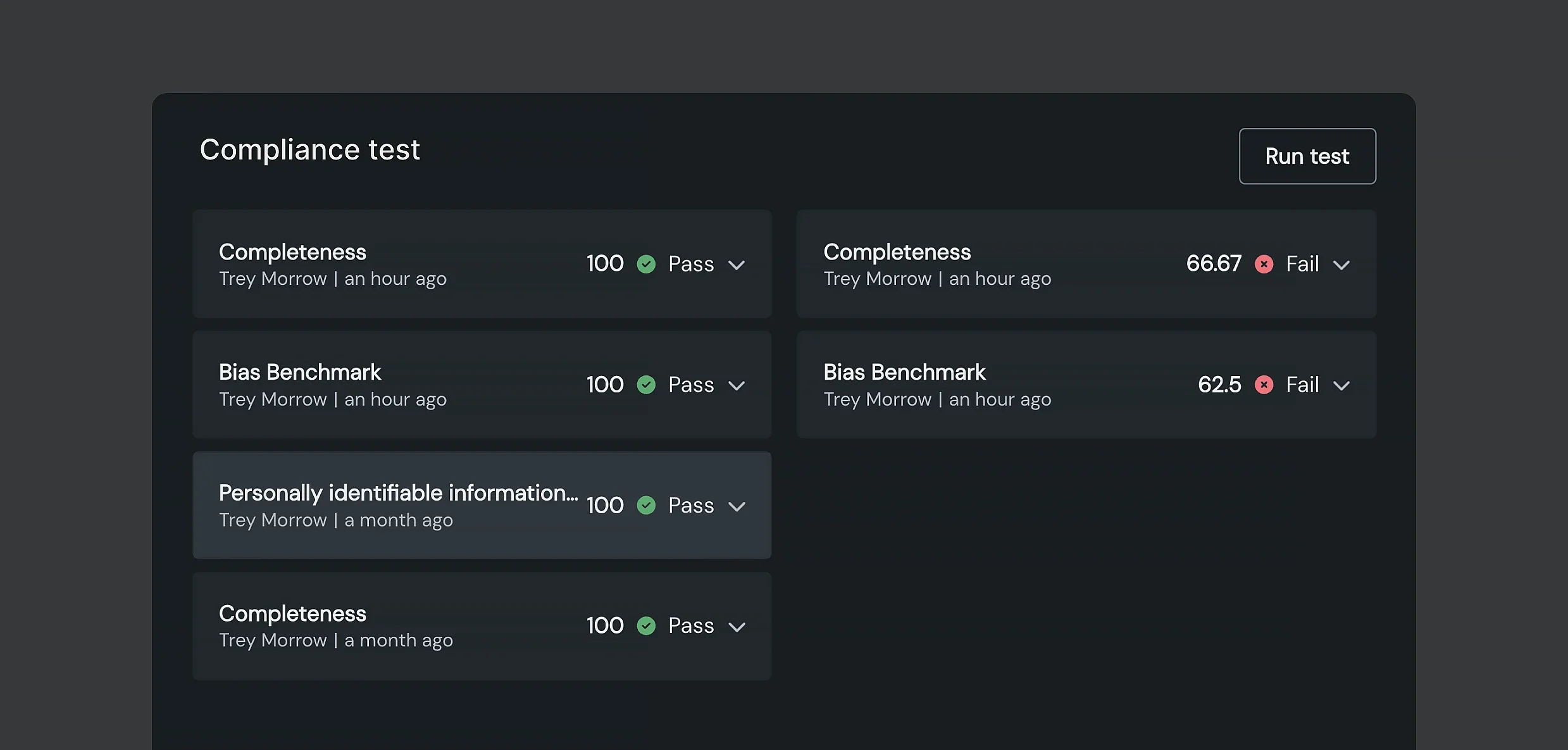

Reduce regulatory risks with automated compliance checks. Stay ahead of evolving regulations with pre-built compliance checks or build custom tests using third-party data to meet your organization’s requirements.

Ensure all workflows include identified risks and required documentation to meet regulatory requirements.

Ensure your workflows are safe to use under the EU AI Act, NIST RMF, EU AI Act, NYC Law No. 144, Colorado Law SB21-169, California Law AB-2013, SB-1047, and more.

Click and run tests for completeness, PII detection, toxicity, bias, and jailbreak attempts. Create custom tests based on your organization’s specific internal or external requirements.

Automated compliance documentation. Reduce manual efforts and generate compliance ready documentation with a click.

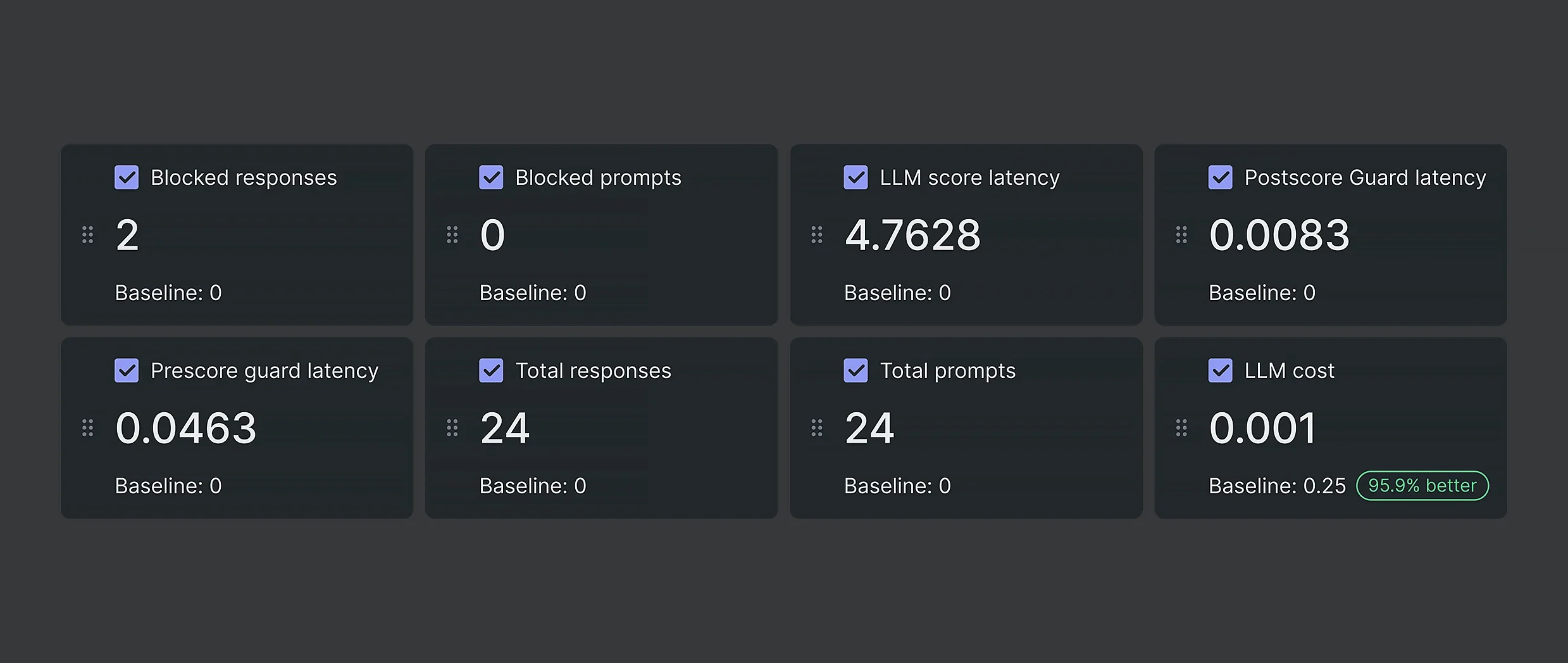

Track the metrics that matter most. Have peace of mind knowing your models are continuously monitored in production with out-of-the-box and customizable metrics tailored to your needs.