What’s your AI risk mitigation plan? Just as you wouldn’t set off on a journey without checking the roads, knowing your route, and preparing for possible delays or mishaps, you need a model risk management plan in place for your machine learning projects. A well-designed model combined with proper AI governance can help minimize unintended outcomes like AI bias. With a mix of the right people, processes, and technology in place, you can minimize the risks associated with your AI projects.

Is There Such a Thing as Unbiased AI?

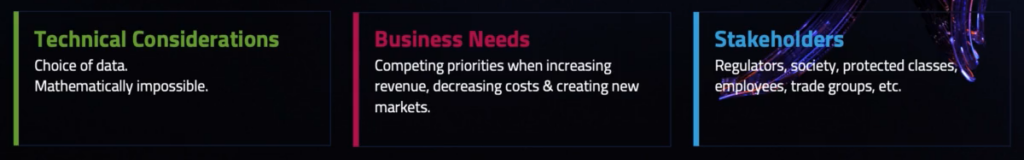

A common concern with AI when discussing governance is bias. Is it possible to have an unbiased AI model? The hard truth is no. You should be wary of anyone who tells you otherwise. While there are mathematical reasons a model can’t be unbiased, it’s just as important to recognize that factors like competing business needs can also contribute to the problem. This is why good AI governance is so important.

So, rather than looking to create a model that’s unbiased, instead look to create one that is fair and behaves as intended when deployed. A fair model is one where results are measured alongside sensitive aspects of the data (e.g., gender, race, age, disability, and religion.)

Validating Fairness Throughout the AI Lifecycle

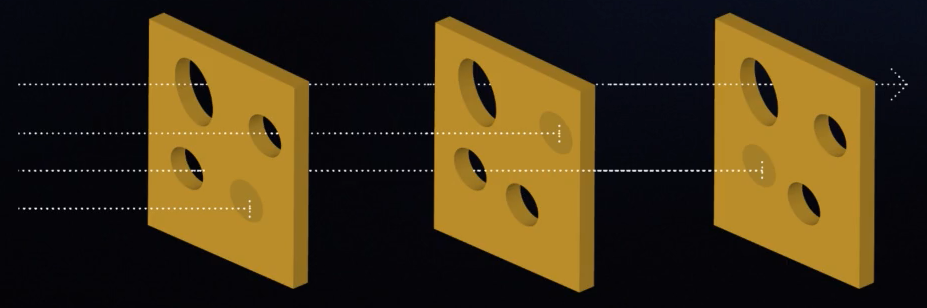

One risk mitigation method is a three-pronged approach to mitigating risk among multiple dimensions of the AI lifecycle. The Swiss cheese framework recognizes that no single set of defenses will ensure fairness by removing all hazards. But with multiple lines of defense, the overlapping are a powerful form of risk management. It’s a proven model that’s worked in aviation and healthcare for decades, but it’s still valid for use on enterprise AI platforms.

The first slice is about getting the right people involved. You need to have people who can identify the need, construct the model, and monitor its performance. A diversity of voices helps the model align to an organization’s values.

The second slice is having MLOps processes in place that allow for repeatable deployments. Standardized processes make tracking model updates, maintaining model accuracy through continual learning, and enforcing approval workflows possible. Workflow approval, monitoring, continuous learning, and version control are all part of a good system.

The third slice is the MLDev technology that allows for common practices, auditable workflows, version control, and consistent model KPIs. You need tools to evaluate the model’s behavior and confirm its integrity. They should come from a limited and interoperable set of technologies to identify risks, such as technical debt. The more custom components in your MLDev environment you have, the more likely you are to introduce unnecessary complexity and unintended consequences and bias.

The Challenge of Complying with New Regulations

And all these layers need to be considered against the landscape of regulation. In the U.S., for example, regulation can come from local, state, and federal jurisdictions. The EU and Singapore are taking similar steps to codify regulations concerning AI governance.

There is an explosion of new models and techniques yet flexibility is needed to adapt as new laws are implemented. Complying with these proposed regulations is becoming increasingly more of a challenge.

In these proposals, AI regulation isn’t limited to fields like insurance and finance. We’re seeing regulatory guidance reach into fields such as education, safety, healthcare, and employment. If you’re not prepared for AI regulation in your industry now, it’s time to start thinking about it—because it’s coming.

Document Design and Deployment For Regulations and Clarity

Model risk management will become commonplace as regulations increase and are enforced. The ability to document your design and deployment choices will help you move quickly—and make sure you’re not left behind. If you have the layers mentioned above in place, then explainability should be easy.

- People, process, and technology are your internal lines of defense when it comes to AI governance.

- Be sure you understand who all of your stakeholders are, including the ones that might get overlooked.

- Look for ways to have workflow approvals, version control, and significant monitoring.

- Make sure you think about explainable AI and workflow standardization.

- Look for ways to codify your processes. Create a process, document the process, and stick to the process.

Learn how your team can develop, deliver, and govern AI apps and AI agents with DataRobot.

Request a DemoRelated posts

See other posts in AI for PractitionersWhat is an AI gateway? And why does your enterprise need one? Discover how it keeps Agentic AI scalable, secure, and cost-efficient. Read the full blog.

Explore syftr, an open source framework for discovering Pareto-optimal generative AI workflows. Learn how to optimize for accuracy, cost, and latency in real-world use cases.

There’s a new operational baseline for secure AI delivery in highly regulated environments. Learn what IT leaders need to deliver outcomes without trade-offs.

Related posts

See other posts in AI for PractitionersGet Started Today.