Large language models, also known as foundation models, have gained significant traction in the field of machine learning. These models are pre-trained on large datasets, which allows them to perform well on a variety of tasks without requiring as much training data. Learn how you can easily deploy a pre-trained foundation model using the DataRobot AI Platform capabilities, then put the model into production. By leveraging the power of a pre-trained model, you can save time and resources while still achieving high performance in your machine learning applications.

What Are Large Language Models?

The creation of foundation models is one of the key developments in the field of large language models that is creating a lot of excitement and interest amongst data scientists and machine learning engineers. These models are trained on massive amounts of text data using deep learning algorithms. They have the ability to generate human-like language that is coherent and relevant in a given context and to process and understand natural language at a level that was previously thought to be impossible. As a result, they have the potential to revolutionize the way that we interact with machines and solve a wide range of machine learning problems.

These developments have allowed researchers to create models that can perform a wide range of natural language processing tasks, such as machine translation, summarization, question answering and even dialogue generation. They can also be used for creative tasks, such as generating realistic text, which can be useful for a variety of applications, such as generating product descriptions or creating news articles.

Overall, the recent developments in large language models are very exciting, and have the potential to greatly improve our ability to solve machine learning problems and interact with machines in a more natural and intuitive way.

Get Started with Language Models Using Hugging Face

As many machine learning practitioners already know, one easy way to get started with language models is by using Hugging Face. Hugging Face model hub is a platform offering a collection of pre-trained models that can be easily downloaded and used for a wide range of natural language processing tasks.

To get started with a language model from the Hugging Face model hub, you simply need to install the Hugging Face library in your local notebook or DataRobot Notebooks if that’s what you use. If you already run your experiments on the DataRobot GUI, you could even add it as a custom task.

Once installed, you can choose a model that suits your needs. Then you can use the model to perform tasks such as text generation, classification, and translation. The models are easy to use and can be fine-tuned to your specific needs, making them a powerful tool for solving a variety of natural language processing problems.

If you don’t want to set up a local runtime environment, you can get started with a Google Colab notebook on a CPU/GPU/TPU runtime, download your model, and get the model predictions in just a few lines.

As an example, getting started with a BERT model for question answering (bert-large-uncased-whole-word-masking-finetuned-squad) is as easy as executing these lines:

!pip install transformers==4.25.1

from transformers import AutoTokenizer, TFBertForQuestionAnswering

MODEL = "bert-large-uncased-whole-word-masking-finetuned-squad"

tokenizer = AutoTokenizer.from_pretrained(MODEL)

model = TFBertForQuestionAnswering.from_pretrained(MODEL)Deploying Language Models to Production

After you try out some models, possibly further fine-tune them for your specific use cases, and get them ready for production, you’ll need a serving environment to host your artifacts. Besides just an environment to serve the model, you’ll need to monitor its performance, health, data and prediction drift, and an easy way of retraining it without disturbing your production workflows and your downstream applications that consume your model’s output.

This is where the DataRobot AI Platform comes into play. DataRobot services provide a platform for hosting and deploying custom model packages in various ML frameworks such as PyTorch, Tensorflow, ONNX, and sk-learn, allowing organizations to easily integrate their pre-trained models into their existing applications and consume them for their business needs.

To host a pre-trained language model on DataRobot services, you simply need to upload the model to the platform, build its runtime environment with your custom dependency packages, and deploy it on DataRobot servers. Your deployment will be ready in a few minutes, and then you can send your prediction requests to your deployment endpoint and enjoy your model in production.

While you can do all these operations from the DataRobot UI, here we’ll show you how to implement the end-to-end workflow, using the Datarobot API in a notebook environment. So, let’s get started!

You can follow along this tutorial by creating a new Google Colab notebook or by copying our notebook from our DataRobot Community Repository and running the copied notebook on Google Colab.

Install dependencies

!pip install transformers==4.25.1 datarobot==3.0.2

from transformers import AutoTokenizer, TFBertForQuestionAnswering

import numpy as npDownload the BERT model from HuggingFace on the notebook environment

MODEL = "bert-large-uncased-whole-word-masking-finetuned-squad"

tokenizer = AutoTokenizer.from_pretrained(MODEL)

model = TFBertForQuestionAnswering.from_pretrained(MODEL)

BASE_PATH = "/content/datarobot_blogpost"

tokenizer.save_pretrained(BASE_PATH)

model.save_pretrained(BASE_PATH)

Deploy to DataRobot

Create the inference (glue) script, ie. the custom.py file.

This inference script (custom.py file) acts as the glue between your model artifacts and the Custom Model execution in DataRobot. If this is the first time you’re creating a custom model on DataRobot, our public repository will be a great starting point, with many more examples for model templates in different ML frameworks and for different model types, such as binary or multiclass classification, regression, anomaly detection, or unstructured models like the one we’ll be building in our example.

%%writefile $BASE_PATH/custom.py

"""

Copyright 2021 DataRobot, Inc. and its affiliates.

All rights reserved.

This is proprietary source code of DataRobot, Inc. and its affiliates.

Released under the terms of DataRobot Tool and Utility Agreement.

"""

import json

import os.path

import os

import tensorflow as tf

import pandas as pd

from transformers import AutoTokenizer, TFBertForQuestionAnswering

import io

def load_model(input_dir):

tokenizer = AutoTokenizer.from_pretrained(input_dir)

tf_model = TFBertForQuestionAnswering.from_pretrained(

input_dir, return_dict=True

)

return tf_model, tokenizer

def log_for_drum(msg):

os.write(1, f"\n{msg}\n".encode("UTF-8"))

def _get_answer_in_text(output, input_ids, idx, tokenizer):

answer_start = tf.argmax(output.start_logits, axis=1).numpy()[idx]

answer_end = (tf.argmax(output.end_logits, axis=1) + 1).numpy()[idx]

answer = tokenizer.convert_tokens_to_string(

tokenizer.convert_ids_to_tokens(input_ids[answer_start:answer_end])

)

return answer

def score_unstructured(model, data, query, **kwargs):

global model_load_duration

tf_model, tokenizer = model

# Assume batch input is sent with mimetype:"text/csv"

# Treat as single prediction input if no mimetype is set

is_batch = kwargs["mimetype"] == "text/csv"

if is_batch:

input_pd = pd.read_csv(io.StringIO(data), sep="|")

input_pairs = list(zip(input_pd["abstract"], input_pd["question"]))

start = time.time()

inputs = tokenizer.batch_encode_plus(

input_pairs, add_special_tokens=True, padding=True, return_tensors="tf"

)

input_ids = inputs["input_ids"].numpy()

output = tf_model(inputs)

responses = []

for i, row in input_pd.iterrows():

answer = _get_answer_in_text(output, input_ids[i], i, tokenizer)

response = {

"abstract": row["abstract"],

"question": row["question"],

"answer": answer,

}

responses.append(response)

pred_duration = time.time() - start

to_return = json.dumps(

{

"predictions": responses,

"pred_duration": pred_duration,

}

)

else:

data_dict = json.loads(data)

abstract, question = data_dict["abstract"], data_dict["question"]

start = time.time()

inputs = tokenizer(

question,

abstract,

add_special_tokens=True,

padding=True,

return_tensors="tf",

)

input_ids = inputs["input_ids"].numpy()[0]

output = tf_model(inputs)

answer = _get_answer_in_text(output, input_ids, 0, tokenizer)

pred_duration = time.time() - start

to_return = json.dumps(

{

"abstract": abstract,

"question": question,

"answer": answer,

"pred_duration": pred_duration,

}

)

return to_returnCreate the requirements file

%%writefile $BASE_PATH/requirements.txt

transformersUpload model artifacts and inference script to DataRobot

import datarobot as dr

def deploy_to_datarobot(folder_path, env_name, model_name, descr):

API_TOKEN = "YOUR_API_TOKEN" #Please refer to https://docs.datarobot.com/en/docs/platform/account-mgmt/acct-settings/api-key-mgmt.html to get your token

dr.Client(token=API_TOKEN, endpoint='https://app.datarobot.com/api/v2/')

onnx_execution_env = dr.ExecutionEnvironment.list(search_for=env_name)[0]

custom_model = dr.CustomInferenceModel.create(

name=model_name,

target_type=dr.TARGET_TYPE.UNSTRUCTURED,

description=descr,

language='python'

)

print(f"Creating custom model version on {onnx_execution_env}...")

model_version = dr.CustomModelVersion.create_clean(

custom_model_id=custom_model.id,

base_environment_id=onnx_execution_env.id,

folder_path=folder_path,

maximum_memory=4096 * 1024 * 1024,

)

print(f"Created {model_version}.")

versions = dr.CustomModelVersion.list(custom_model.id)

sorted_versions = sorted(versions, key=lambda v: v.label)

latest_version = sorted_versions[-1]

print("Building the execution environment with dependency packages...")

build_info = dr.CustomModelVersionDependencyBuild.start_build(

custom_model_id=custom_model.id,

custom_model_version_id=latest_version.id,

max_wait=3600,

)

print(f"Environment build completed with {build_info.build_status}.")

print("Creating model deployment...")

default_prediction_server = dr.PredictionServer.list()[0]

deployment = dr.Deployment.create_from_custom_model_version(latest_version.id,

label=model_name,

description=descr,

default_prediction_server_id=default_prediction_server.id,

max_wait=600,

importance=None)

print(f"{deployment} is ready!")

return deploymentCreate the model deployment

deployment = deploy_to_datarobot(BASE_PATH,

"Keras",

"blog-bert-tf-questionAnswering",

"Pretrained BERT model, fine-tuned on SQUAD for question answering")Test with prediction requests

The following script is designed to make predictions against your deployment, and you can grab the same script by opening up your DataRobot account, going to the Deployments tab, opening the deployment you just created, going to the Predictions tab, and then opening up the Prediction API Scripting Code -> Single section.

It will look like the example below where you’ll see your own API_KEY and DATAROBOT_KEY filled in.

"""

Usage:

python datarobot-predict.py <input-file> [mimetype] [charset]

This example uses the requests library which you can install with:

pip install requests

We highly recommend that you update SSL certificates with:

pip install -U urllib3[secure] certifi

"""

import sys

import json

import requests

API_URL = 'https://mlops-dev.dynamic.orm.datarobot.com/predApi/v1.0/deployments/{deployment_id}/predictionsUnstructured'

API_KEY = 'YOUR_API_KEY'

DATAROBOT_KEY = 'YOUR_DATAROBOT_KEY'

# Don't change this. It is enforced server-side too.

MAX_PREDICTION_FILE_SIZE_BYTES = 52428800 # 50 MB

class DataRobotPredictionError(Exception):

"""Raised if there are issues getting predictions from DataRobot"""

def make_datarobot_deployment_unstructured_predictions(data, deployment_id, mimetype, charset):

"""

Make unstructured predictions on data provided using DataRobot deployment_id provided.

See docs for details:

https://app.datarobot.com/docs/predictions/api/dr-predapi.html

Parameters

----------

data : bytes

Bytes data read from provided file.

deployment_id : str

The ID of the deployment to make predictions with.

mimetype : str

Mimetype describing data being sent.

If mimetype starts with 'text/' or equal to 'application/json',

data will be decoded with provided or default(UTF-8) charset

and passed into the 'score_unstructured' hook implemented in custom.py provided with the model.

In case of other mimetype values data is treated as binary and passed without decoding.

charset : str

Charset should match the contents of the file, if file is text.

Returns

-------

data : bytes

Arbitrary data returned by unstructured model.

Raises

------

DataRobotPredictionError if there are issues getting predictions from DataRobot

"""

# Set HTTP headers. The charset should match the contents of the file.

headers = {

'Content-Type': '{};charset={}'.format(mimetype, charset),

'Authorization': 'Bearer {}'.format(API_KEY),

'DataRobot-Key': DATAROBOT_KEY,

}

url = API_URL.format(deployment_id=deployment_id)

# Make API request for predictions

predictions_response = requests.post(

url,

data=data,

headers=headers,

)

_raise_dataroboterror_for_status(predictions_response)

# Return raw response content

return predictions_response.content

def _raise_dataroboterror_for_status(response):

"""Raise DataRobotPredictionError if the request fails along with the response returned"""

try:

response.raise_for_status()

except requests.exceptions.HTTPError:

err_msg = '{code} Error: {msg}'.format(

code=response.status_code, msg=response.text)

raise DataRobotPredictionError(err_msg)

def datarobot_predict_file(filename, deployment_id, mimetype='text/csv', charset='utf-8'):

"""

Return an exit code on script completion or error. Codes > 0 are errors to the shell.

Also useful as a usage demonstration of

`make_datarobot_deployment_unstructured_predictions(data, deployment_id, mimetype, charset)`

"""

data = open(filename, 'rb').read()

data_size = sys.getsizeof(data)

if data_size >= MAX_PREDICTION_FILE_SIZE_BYTES:

print((

'Input file is too large: {} bytes. '

'Max allowed size is: {} bytes.'

).format(data_size, MAX_PREDICTION_FILE_SIZE_BYTES))

return 1

try:

predictions = make_datarobot_deployment_unstructured_predictions(data, deployment_id, mimetype, charset)

return predictions

except DataRobotPredictionError as exc:

pprint(exc)

return None

def datarobot_predict(input_dict, deployment_id, mimetype='application/json', charset='utf-8'):

"""

Return an exit code on script completion or error. Codes > 0 are errors to the shell.

Also useful as a usage demonstration of

`make_datarobot_deployment_unstructured_predictions(data, deployment_id, mimetype, charset)`

"""

data = json.dumps(input_dict).encode(charset)

data_size = sys.getsizeof(data)

if data_size >= MAX_PREDICTION_FILE_SIZE_BYTES:

print((

'Input file is too large: {} bytes. '

'Max allowed size is: {} bytes.'

).format(data_size, MAX_PREDICTION_FILE_SIZE_BYTES))

return 1

try:

predictions = make_datarobot_deployment_unstructured_predictions(data, deployment_id, mimetype, charset)

return json.loads(predictions)['answer']

except DataRobotPredictionError as exc:

pprint(exc)

return NoneNow that we have the auto-generated script to make our predictions, it’s time to send a test prediction request. Let’s create a JSON to ask a question to our question-answering BERT model. We will give it a long abstract for the information, and the question based on this abstract.

test_input = {"abstract": "Healthcare tasks (e.g., patient care via disease treatment) and biomedical research (e.g., scientific discovery of new therapies) require expert knowledge that is limited and expensive. Foundation models present clear opportunities in these domains due to the abundance of data across many modalities (e.g., images, text, molecules) to train foundation models, as well as the value of improved sample efficiency in adaptation due to the cost of expert time and knowledge. Further, foundation models may allow for improved interface design (§2.5: interaction) for both healthcare providers and patients to interact with AI systems, and their generative capabilities suggest potential for open-ended research problems like drug discovery. Simultaneously, they come with clear risks (e.g., exacerbating historical biases in medical datasets and trials). To responsibly unlock this potential requires engaging deeply with the sociotechnical matters of data sources and privacy as well as model interpretability and explainability, alongside effective regulation of the use of foundation models for both healthcare and biomedicine.", "question": "Where can we use foundation models?"}

datarobot_predict(test_input, deployment.id)And see that our model returns the answer in the model response, as we expected.

> both healthcare and biomedicine

Easily Monitor Machine Learning Models with DataRobot MLOps

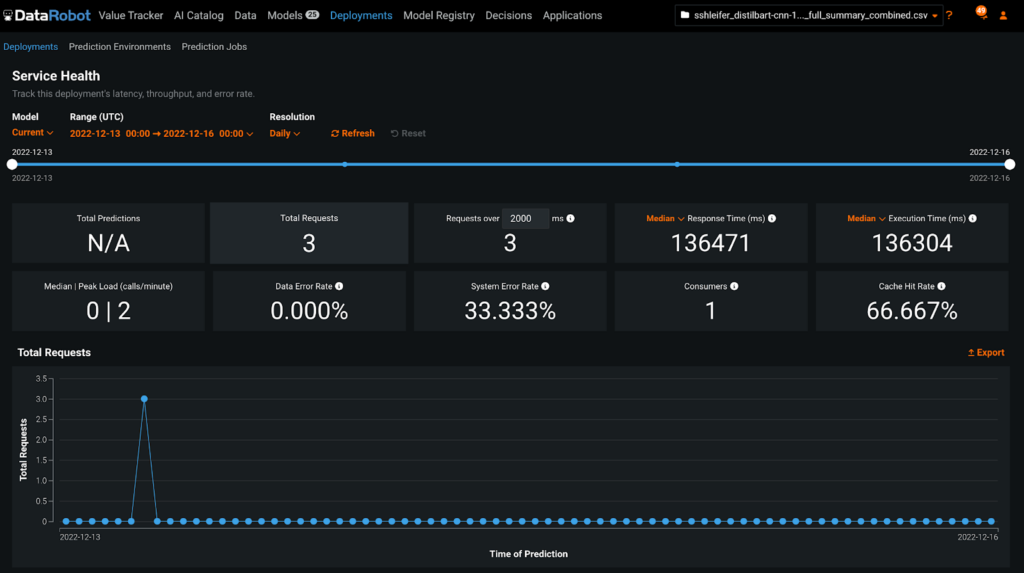

Now that we have our question-answering model up and running successfully, let’s observe our service health dashboard in DataRobot. As we send prediction requests to our model, the Service Health tab will reflect the newly received requests and let us keep an eye on our model’s metrics.

Later, if we want to update our deployment with a newer version of the pretrained model artifact or update our custom inference script, we use the API or the Custom Model Workshop UI again to make any necessary changes on our deployment flawlessly.

Start Using Large Language Models

By hosting a language model with DataRobot, organizations can take advantage of the power and flexibility of large language models without having to worry about the technical details of managing and deploying the model.

In this blog post, we showed how easy it is to host a large language model as a DataRobot custom model in only a few minutes by running an end-to-end script. You can find the end-to-end notebook in the DataRobot community repository, make a copy of it to edit for your needs, and get up to speed with your own model in production.

Related posts

See other posts in AI for PractitionersAI adoption in supply chains isn’t about chasing trends. Learn the strategic framework that’s helping leaders build real, lasting impact. Read the full post.

Learn how to build and scale agentic AI with NVIDIA and DataRobot. Streamline development, optimize workflows, and deploy AI faster with a production-ready AI stack.

What is an AI gateway? And why does your enterprise need one? Discover how it keeps Agentic AI scalable, secure, and cost-efficient. Read the full blog.

Related posts

See other posts in AI for PractitionersGet Started Today.