Here’s a painful truth: generative AI has taken off, but AI production processes haven’t kept up. In fact, they are increasingly being left behind. And that’s a huge problem for teams everywhere. There’s a desire to infuse large language models (LLMs) into a broad range of business initiatives, but teams are blocked from bringing them to production safely. Delivery leaders now face creating even more frankenstein stacks across generative and predictive AI—separate tech and tooling, more data silos, more models to track, and more operational and monitoring headaches. It hurts productivity and creates risk with a lack of observability and clarity around model performance, as well as confidence and correctness.

It’s incredibly hard for already tapped out machine learning and data science teams to scale. They are now not only being overloaded with LLM demands, but face being hamstrung with LLM decisions that may risk future headaches and maintenance, all while juggling existing predictive models and production processes. It’s a recipe for production madness.

This is all exactly why we’re announcing our expanded AI production product, with generative AI, to enable teams to safely and confidently use LLMs, unified with their production processes. Our promise is to enable your team with the tools to manage, deploy, and monitor all your generative and predictive models, in a single production management solution that always stays aligned with your evolving AI/ML stack. With the 2023 Summer Launch, DataRobot unleashed an “all-in-one” generative AI and predictive AI platform and now you can monitor and govern both enterprise-scale generative AI deployments side-by-side with predictive AI. Let’s dive into the details!

AI Teams Must Address the LLM Confidence Problem

Unless you have been hiding under a very large rock or only consuming 2000s reality TV over the last year, you’ve heard about the rise and dominance of large language models. If you are reading this blog, chances are high that you are using them in your everyday life or your organization has incorporated them into your workflow. But LLMs unfortunately have the tendency to provide confident, plausible-sounding misinformation unless they are closely managed. It’s why deploying LLMs in a managed way is the best strategy for an organization to get real, tangible value from them. More specifically, making them safe and controlled in order to avoid legal or reputational risks is of paramount importance. That’s why LLMOps is critical for organizations seeking to confidently drive value from their generative AI projects. But in every organization, LLMs don’t exist in a vacuum, they’re just one type of model and part of a much larger AI and ML ecosystem.

It’s Time to Take Control of Monitoring All Your Models

Historically, organizations have struggled to monitor and manage their growing number of predictive ML models and ensure they are delivering the results the business needs. But now with the explosion of generative AI models, it’s set to compound the monitoring problem. As predictive and now generative models proliferate across the business, data science teams have never been less equipped to efficiently and effectively hunt down low-performing models that are delivering subpar business outcomes and poor or negative ROI.

Simply put, monitoring predictive and generative models, at every corner of the organization is critical, to reduce risk and to ensure they are delivering performance—not to mention cut manual effort that often comes with keeping tabs on increasing model sprawl.

Uniquely LLMs introduce a brand new problem: managing and mitigating hallucination risk. Essentially, the challenge is to manage the LLM confidence problem, at scale. Organizations risk their productionized LLM being rude, providing misinformation, perpetuating bias, or including sensitive information in its response. All of that makes monitoring models’ behavior and performance paramount.

This is where DataRobot AI Production shines. Its extensive set of LLM monitoring, integration, and governance features allows users to quickly deploy their models with full observability and control. While using our full suite of model management tools, utilizing the model registry for automated model versioning along with our deployment pipelines, you can stop worrying about your LLM (or even your classic logistic regression model) going off the rails.

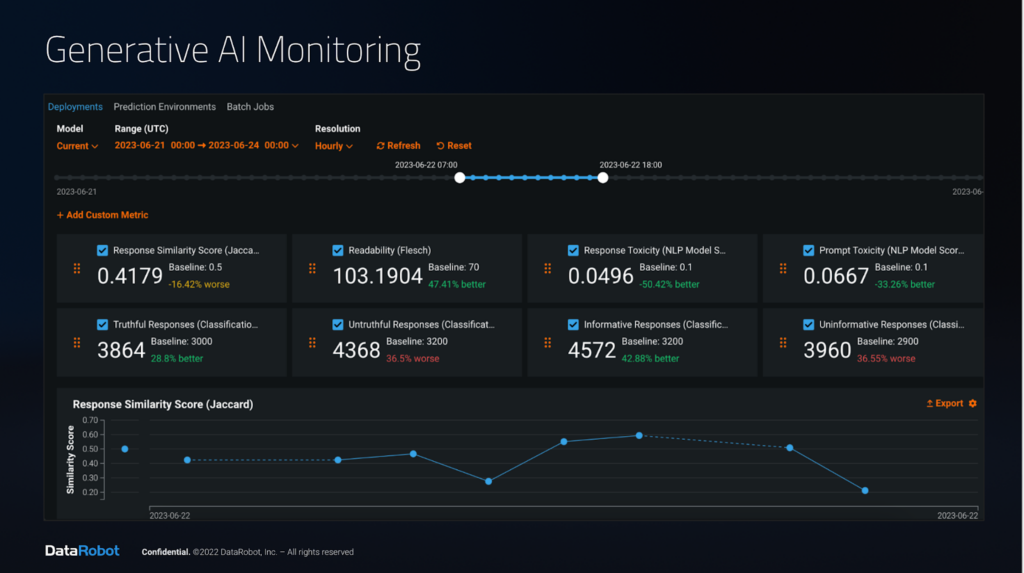

We’ve expanded monitoring capabilities of DataRobot to provide insights into LLM behavior and help identify any deviations from expected outcomes. It also allows businesses to track model performance, adhere to SLAs, and comply with guidelines, ensuring ethical and guided use for all models, regardless of where they are deployed, or who built them.

In fact, we offer robust monitoring support for all model types, from predictive to generative, including all LLMs, enabling organizations to track:

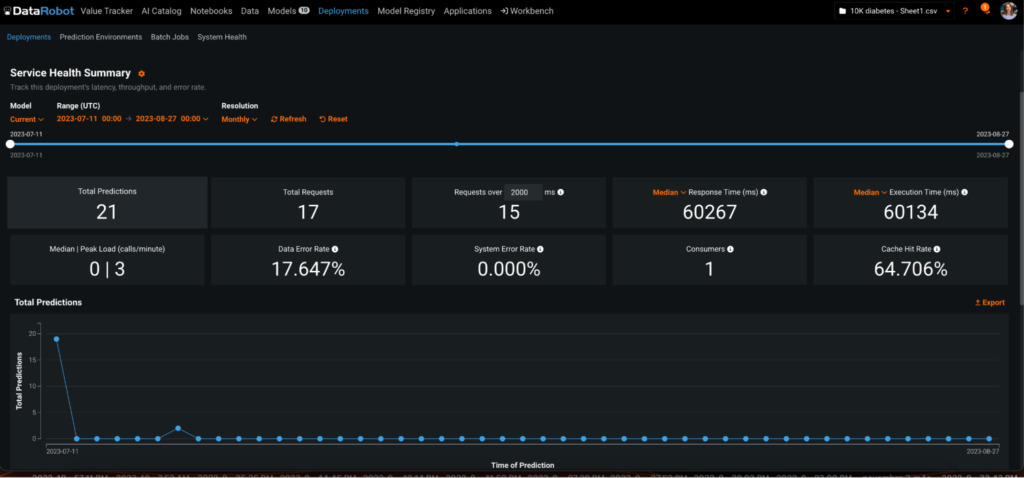

- Service Health: Important to track to ensure there aren’t any issues with your pipeline. Users can track total number of requests, completions and prompts, response time, execution time, median and peak load, data and system errors, number of consumers and cache hit rate.

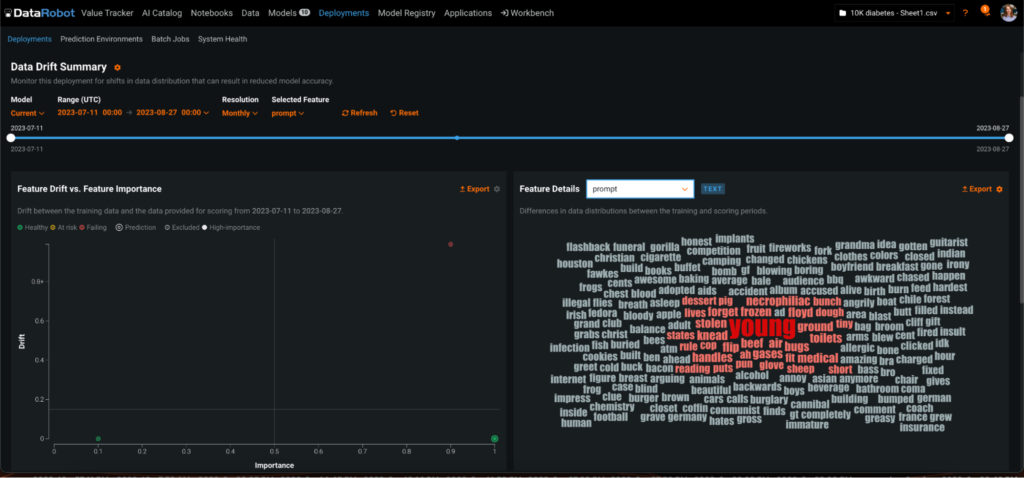

- Data Drift Tracking: Data changes over time and the model you trained a few months ago may already be dropping in performance, which can be costly. Users can track data drift and performance over time and can even track completion, temperature and other LLM specific parameters.

- Custom metrics: Using custom metrics framework, users can create their own metrics, tailored specifically to their custom build model or LLM. Metrics such as toxicity monitoring, cost of LLM usage, and topic relevance can not only protect a business’s reputation but also ensure that LLMs is staying “on-topic”.

By capturing user interactions within GenAI apps and channeling them back into the model building phase, the potential for improved prompt engineering and fine-tuning is vast. This iterative process allows for the refinement of prompts based on real-world user activity, resulting in more effective communication between users and AI systems. Not only does it empower AI to respond better to user needs, but it also helps to make better LLMs.

Command and Control Over All Your Generative and Production Models

With the rush to embrace LLMs, data science teams face another risk. The LLM you choose now may not be the LLM you use in six months time. In two years time, it may be a whole different model, that you want to run on a different cloud. Because of the sheer pace of LLM innovation that’s underway, the risk of accruing technical debt becomes relevant in the space of months not years And with the rush for teams to deploy generative AI, it’s never been easier for teams to spin up rogue models that expose the company to risk.

Organizations need a way to safely adopt LLMs, in addition to their existing models, and manage them, track them, and plug and play them. That way, teams are insulated from change.

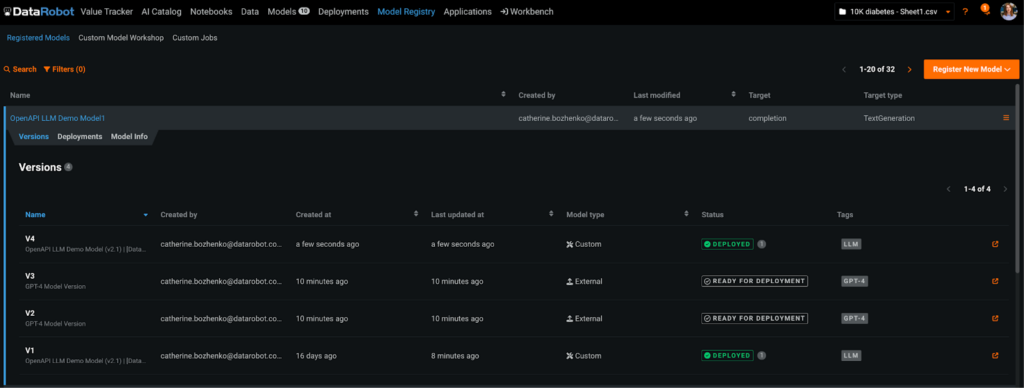

It’s why we’ve upgraded the Datarobot AI Production Model Registry, that’s a fundamental component of AI and ML production to provide a completely structured and managed approach to organize and track both generative and predictive AI, and your overall evolution of LLM adoption. The Model Registry allows users to connect to any LLM, whether popular versions like GPT-3.5, GPT-4, LaMDA, LLaMa, Orca, or even custom-built models. It provides users with a central repository for all their models, no matter where they were built or deployed, enabling efficient model management, versioning, and deployment.

While all models evolve over time due to changing data and requirements, the versioning built into the Model Registry helps users to ensure traceability and control over these changes. They can confidently upgrade to newer versions and, if necessary, effortlessly revert to a previous deployment. This level of control is essential in ensuring that any models, but especially LLMs, perform optimally in production environments.

With DataRobot Model Registry, users gain full control over their classic predictive models and LLMs: assembling, testing, registering, and deploying these models become hassle-free, all from a single pane of glass.

Unlocking a Versatility and Flexibility Advantage

Adapting to change is crucial, because different LLMs are emerging all the time that are fit for different purposes, from languages to creative tasks.

You need versatility in your production processes to adapt to it and you need the flexibility to plug and play the right generative or predictive model for your use case rather than trying to force-fit one. So, in DataRobot AI Production, you can deploy your models remotely or in DataRobot, so your users get versatile options for predictive and generative tasks.

We’ve also taken it a step further with DataRobot Prediction APIs that enable users the flexibility to integrate their custom-built models or preferred LLMs into their applications. For example, it now makes it simple to quickly add real-time text generation or content creation to your applications.

You can also leverage our Prediction APIs to allow users to run batch jobs with LLMs. For example, if you need to automatically generate large volumes of content, like articles or product descriptions, you can leverage DataRobot to handle the batch processing with the LLM.

And because LLMs can even be deployed on edge devices that have limited internet connectivity, you can leverage DataRobot to facilitate generating content directly on those devices too.

Datarobot AI Production is Designed to Enable You to Scale Generative and Predictive AI Confidently, Efficiently, and Safely

DataRobot AI Production provides a new way for leaders to unify, manage, harmonize, monitor results, and future-proof their generative and predictive AI initiatives so they can be successful for today’s needs and meet tomorrow’s changing landscape. It enables teams to scalably deliver more models, no matter whether generative or predictive, monitoring them all to ensure they’re delivering the best business outcomes, so you can grow your models in a business sustainable way. Teams can now centralize their production processes across their entire range of AI initiatives, and take control of all their models, to enable both stronger governance, and also to reduce cloud vendor or LLM model lock-in.

More productivity, more flexibility, more competitive advantage, better results, and less risk, it’s about making every AI initiative, value-driven at the core.

To learn more, you can register for a demo today from one of our applied AI and product experts, so you can get a clear picture of what AI Production can look at your organization. There’s never been a better time to start the conversation and tackle that AI hairball head on.

One of our applied AI and product experts will provide a platform demonstration tailored to your needs.

Request a demoRelated posts

See other posts in AI for PractitionersWhat is an AI gateway? And why does your enterprise need one? Discover how it keeps Agentic AI scalable, secure, and cost-efficient. Read the full blog.

Explore syftr, an open source framework for discovering Pareto-optimal generative AI workflows. Learn how to optimize for accuracy, cost, and latency in real-world use cases.

There’s a new operational baseline for secure AI delivery in highly regulated environments. Learn what IT leaders need to deliver outcomes without trade-offs.

Related posts

See other posts in AI for PractitionersGet Started Today.