Investing in AI/ML is no longer an option but is critical for organizations to remain competitive. However, machine learning utilization is often unpredictable, which makes scaling sometimes a huge challenge. Many engineering teams don’t pay the necessary attention to it. The main reason is that they don’t have a clear plan to scale things up from the beginning. From our experience working with organizations across different industries, we learned about the main challenges related to this process. We combined the resources and expertise of DataRobot MLOps and Algorithmia to achieve the best results.

In this technical post, we’ll focus on some changes we’ve made to allow custom models to operate as an algorithm on Algorithmia, while still feeding predictions, input, and other metrics back to the DataRobot MLOps platform—a true best of both worlds.

Data Science Expertise Meets Scalability

DataRobot AI platform has an absolutely fantastic training pipeline with AutoML and also has a rock-solid inference system. However, there are some reasons why your workflow might not make sense as a typical DataRobot deployment:

- Deep Learning Acceleration (GPU enablements)

- Custom logic, utilizing existing algorithms, acting as part of a larger workflow

- Already have your own training pipeline, have automatic retraining pipelines in development

- Want to save costs by being able to scale to zero workers; don’t need always-on deployments; want to be able to scale to 100 in the event your project becomes popular

But have no fear! Since the integration of DataRobot and Algorithmia, we now have the best of both worlds, and this workflow enables that.

Autoscaling Deployments with Trust

Our team built a workflow that enables the ability to deploy a custom model (or algorithm) to the Algorithmia inference environment, while automatically generating a DataRobot deployment that is connected to the Algorithmia Inference Model (algorithm).

When you call the Algorithmia API endpoint to make a prediction, you’re automatically feeding metrics back to your DataRobot MLOps deployment—allowing you to check the status of your endpoint and monitor for model drift and other failure modes.

The Demo: Autoscaling with MLOps

Here we will demonstrate an end-to-end unattended workflow that:

- trains a new model on the Fashion MNIST Dataset

- uploads it to an Algorithmia Data Collection

- creates a new algorithm on Algorithmia

- creates DataRobot deployment

- Links everything together via the MLOps Agent. The only thing you need to do is to call the API endpoint with the curl command returned at the end of the notebook, and you’re ready to use this in production.

If you want to skip ahead and go straight to the code, a link to the Jupyter notebook can be found here.

Operationalize ML Faster with MLOps Automation

As we know, one of the biggest challenges that data scientists face after exploring and experimenting with a new model is taking it from a workbench and incorporating it into a production environment. This usually requires building automation for both model retraining, drift direction, and compliance/reporting requirements. Many of these can automatically be generated by the DataRobot UI. However, most of the time it can be easier to build your own dashboards specific to your use case.

In this demo, we are completely unattended. There are no web UIs or buttons you need to click. You interact with everything via our Python clients wrapping our API endpoints. If you want to take this demo and rip out a few parts to incorporate into your production code, you’re free to do so.

See Autoscaling with MLOps in Action

Here I will demontstrate an end-to-end unattended workflow, all you need is a machine with a Jupyter notebook server running, an Algorithmia API Key, and a DataRobot API key.

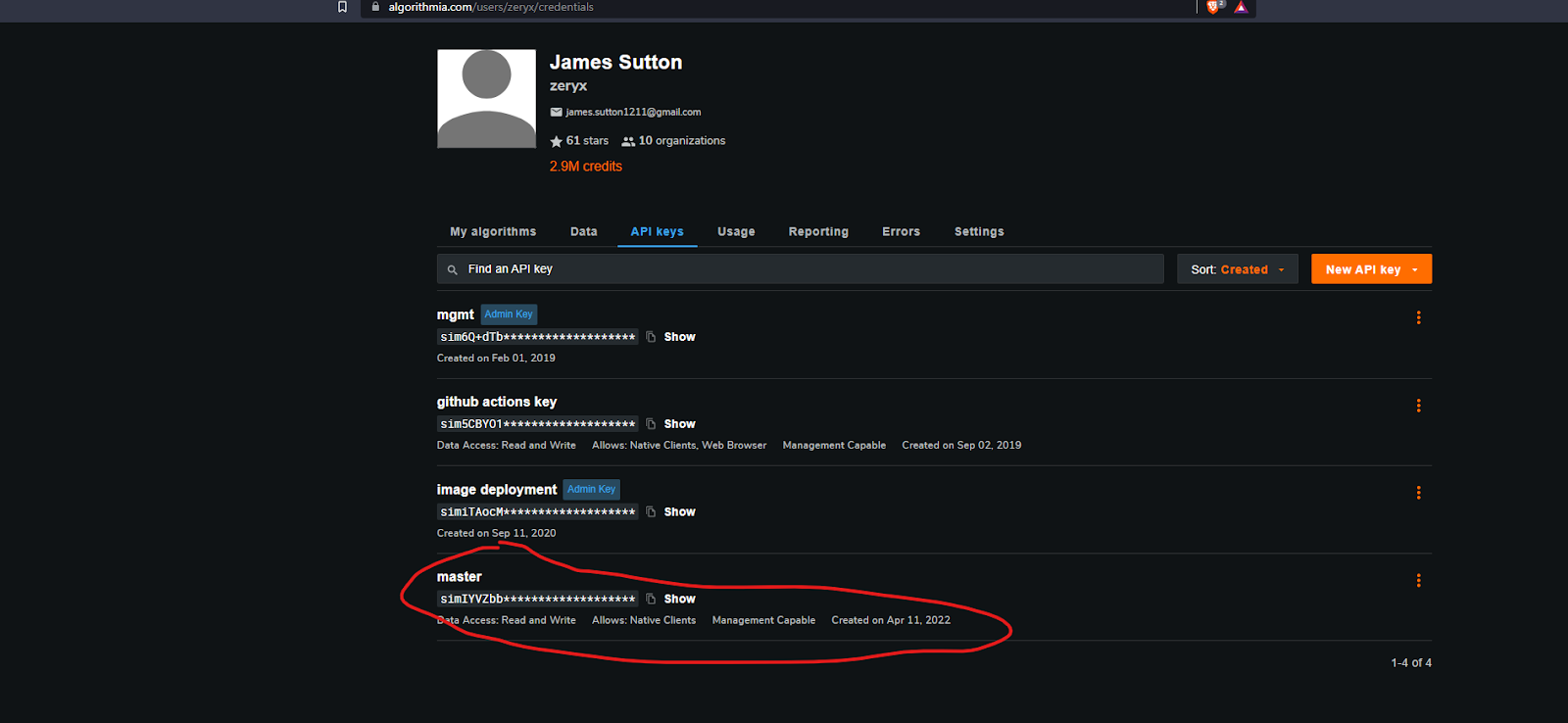

How to Get an Algorithmia API Key

If you’re already an Algorithmia / Algorithmia Enterprise customer, please select your personal workspace and then select API Keys.

You’ll need to select an API key that is management capable. Admin keys are not required for this demo. This may be a different path depending on your Algorithmia Cluster environment, if you’re having difficulties reach out to the DataRobot and Algorithmia team.

If you aren’t an existing Algorithmia / Algorithmia Enterprise customer and would like to see the Algorithmia offering, please reach out to your DataRobot account manager.

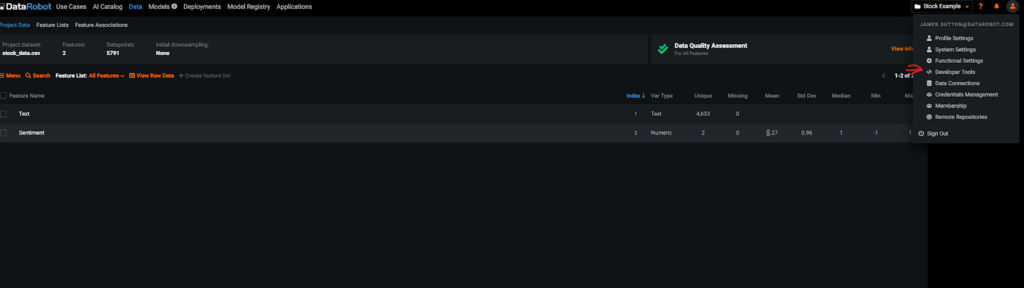

How to Get your DataRobot API Token

To get your DataRobot API token, you first must ensure that MLOps is enabled for your account.

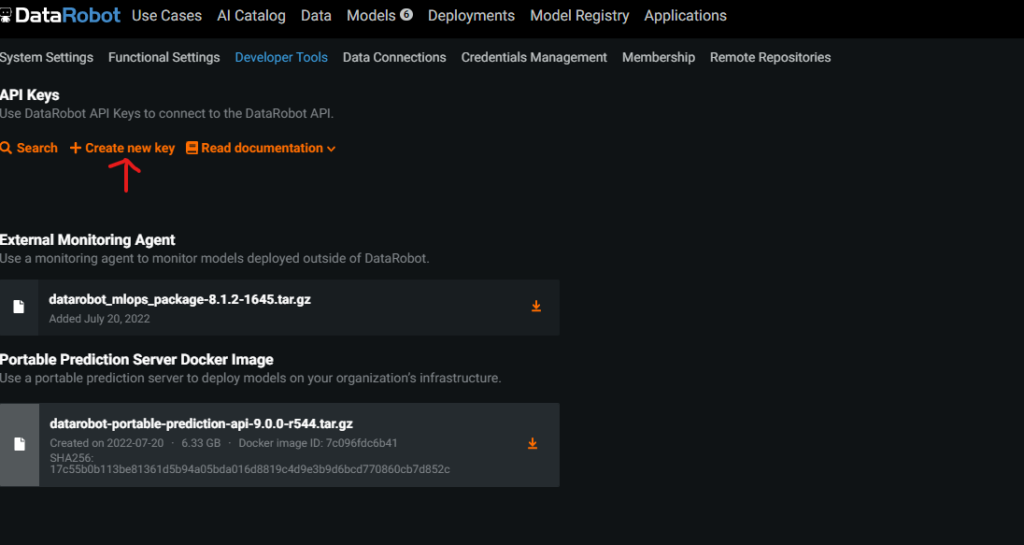

After, under your profile, select developer tools to open the token window.

Then select, Create new key. You should typically create a new API Key for every production model you have so that you can isolate them and disable them if they ever leak.

This process may be different depending on your version of DataRobot. If you have any questions, please reach out to your account manager.

Incorporating Your Tokens into the Notebook

You’ve got your tokens, now lets add them to the notebook.

from datarobot.mlops.connected.client import MLOpsClient

from uuid import uuid4

datarobot_api_token = "DATAROBOT_API_TOKEN"

algorithmia_api_key = "ALGORITHMIA_API_TOKEN"

algorithm_name = "fashion_mnist_mlops"

algorithmia_endpoint = "https://api.algorithmia.com"

datarobot_endpoint = "https://app.datarobot.com"Insert your API Tokens, along with your custom endpoints for DataRobot and Algorithmia. If your Algorithmia url is https://www.enthalpy.click, add https://api.enthalpy.click here to ensure we can connect. Do the same for your DataRobot endpoint.

If you are not sure or you are using the serverless versions of both offerings, leave these as default and we can move on.

Running the Notebook

Now that your credentials have been added, you can train a model, create a DR deployment; create an algorithm on Algorithmia, and finally connect them together, automatically.

Fashion MNIST Automatic Deployment Notebook

Maximize Efficiency and Scale AI Operations

At DataRobot, we’re always trying to build the best development experience and best productionization platform anywhere. This integration was a big step toward helping organizations to maximize efficiency and scale their AI operations; if you want to know more about DataRobot MLOps or have any suggestions on feature enhancements that can improve your workflow, reach out to us.

Related posts

See other posts in Inside the ProductAI adoption in supply chains isn’t about chasing trends. Learn the strategic framework that’s helping leaders build real, lasting impact. Read the full post.

Learn how to build and scale agentic AI with NVIDIA and DataRobot. Streamline development, optimize workflows, and deploy AI faster with a production-ready AI stack.

What is an AI gateway? And why does your enterprise need one? Discover how it keeps Agentic AI scalable, secure, and cost-efficient. Read the full blog.

Related posts

See other posts in Inside the ProductGet Started Today.