- Blog

- AI Thought Leadership

- Top Five Overhyped Predictions for AI in 2022

Top Five Overhyped Predictions for AI in 2022

This is the time of year when it is customary for psychics, economists, and marketers to publish their predictions for the coming year. You are going to read predictions about world leaders, famous celebrities, and AI technology.

Many of these predictions for 2022 will be wrong. Not only is the world more complex and the future quite uncertain (no one in 2019 predicted COVID-19), but humans are subject to many cognitive biases, including a bias to overestimate achievements in the short run and to underestimate what can be achieved in the long run. Niels Bohr, the Nobel laureate in Physics and father of the atomic model, once said, “Prediction is very difficult, especially if it’s about the future!”

Here is a list of the top five AI forecasts for 2022 that would benefit from a healthy dose of critical thinking and skepticism.

Quantum AI

AI systems are notorious for their heavy computing demands, especially when training the system. While traditional computers store information as either zeros or ones, quantum computers use quantum bits, or qubits, which represent and store information in a quantum state that is a complex mix of zeroes and ones. Quantum computing has the potential to sort through vast numbers of possibilities in nearly real time and come up with a probable solution.

It is no wonder that researchers are working on quantum computing technology. On October 18, 2021, Sundar Pichai, chief executive of Google, told the Wall Street Journal that Google “is investing in quantum computing and artificial intelligence to stay ahead of Chinese internet companies that compete with it.” Just days earlier, D-Wave Systems, a quantum computing specialist announced its product roadmap, with upgrades to its commercially available quantum computing services.

With such potential and marketing announcements, it is no wonder that one industry commentator included quantum AI in its predictions for 2022, describing how “quantum computers will assist a variety of businesses in identifying inaccessible challenges and predicting viable remedies.”

But the current generation of quantum computing does not have the scale or reliability to support quantum AI use cases. More realistically, Google aims to have a commercial-grade quantum computer ready by 2029, explaining that “it will need to build a 1-million-qubit machine capable of performing reliable calculations without errors. Its current systems have less than 100 qubits.”

Self-Driving Cars

For several years now, we’ve been sold the vision of self-driving cars. In 2015, the insurance industry was discussing the urgent competitive pressures to adapt to “the dawn of driverless cars.” In January 2016, Elon Musk tweeted that “In ~2 years, summon should work anywhere connected by land & not blocked by borders, eg you’re in LA and the car is in NY.” Several months later, Elon Musk was even more optimistic, tweeting that “Tesla expects to demonstrate self-driven cross-country trip next year.”

Yet here we are in 2021, without driverless cars, and the cars we do have cannot recognize a “Road Closed” sign. Years of disappointment have not stemmed the tide of enthusiastic predictions.

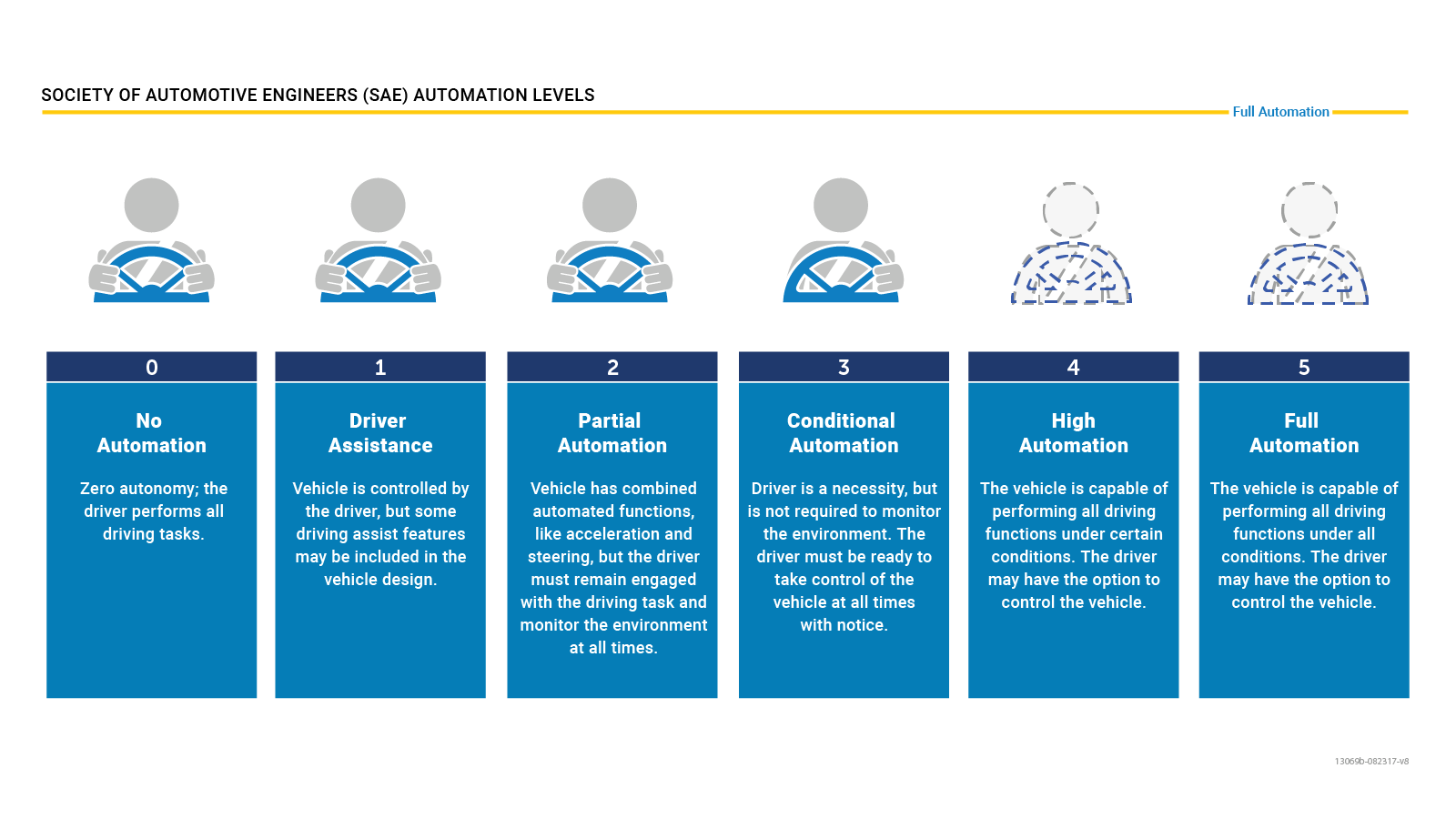

A bit of background before we continue this discussion. The U.S. National Highway Traffic Safety Administration defines five levels of vehicle automation.

We have had Level 1 driver assistance since late last century (e.g., cruise control and anti-lock braking systems). If we fast forward a couple of decades, currently available production vehicles have achieved Level 2, partial automation (e.g., autonomous emergency braking systems) that can sense when another vehicle or pedestrian is too close to the front of your vehicle relative to your speed. Current technology is far from Level 5 automation, “capable of performing all driving functions under all conditions.” In mid-2021, a German court weighed the evidence and banned Tesla from including “full potential for autonomous driving” and “Autopilot inclusive” in its advertising materials. It is incredulous that we could suddenly skip levels three and four to achieve Level 5 automation in 2022.

There are two huge problems holding us back from the age of Level 5 self-driving cars.

Firstly, driving is a complex problem to solve. It takes humans several years of learning and practice to become safe and proficient, and the consequences of driving mistakes are expensive and life threatening. That is why insurers charge such high premiums for young and inexperienced drivers. Currently used AI technology is based upon pattern recognition and cannot make reliable decisions in circumstances it didn’t learn in training. There are many edge cases to solve, such as detours, trucks obscuring a traffic light, emergency vehicles parked by the side of the road, dead end streets, and the unpredictable behavior of other drivers and pedestrians on the road. Regulators will not authorize self-driving vehicles until they are convinced that self-driving vehicles have been rigorously trained and tested across a comprehensive range of edge cases in the real-world.

Secondly, our human cognitive biases cause us to distrust automated systems. For example, the IKEA effect is a cognitive bias where humans overvalue products or services that they have personally created, even in the presence of objective evidence to the contrary. People feel safer when they can directly control the outcomes of processes that affect them. Recently published research on algorithm aversion, the reluctance of people to use superior algorithms that they know to be imperfect, shows that people will choose to use an imperfect algorithm substantially more often when they can modify its behavior. Similarly, the Dunning-Kruger effect is a type of cognitive bias that causes people to overestimate their knowledge and capabilities. Multiple studies have shown the vast majority of drivers believe that they are better than average. This cognitive bias also applies when human drivers compare themselves to a self-driving vehicle. Self-driving vehicles will not be widespread until we overcome our biases.

Recruitment Robots

They’re calling it the “Great Resignation.” During 2021, record numbers of Americans have been voluntarily resigning their jobs, and this phenomenon is starting to be replicated in other countries. And the more resignations, the more vacant roles that must be filled.

An organization’s personnel are key to its success, but the right people can be hard to find. A 2020 survey of recruiters and HR professionals reported that “33% have received 100 or more applications per average requisition.”

Recruiters dealing with high numbers of applicants are forced to process them extremely quickly—the notorious “six seconds” per resume rule—rather than spending time going deeper with the best candidates and using their time to craft a compelling value proposition for the candidate. Automated screenings can also give applicants results more quickly and dramatically speed up the hiring process.

This compelling value proposition has led one industry commenter to predict the popularity of “recruitment robots” replacing human interviews in 2022. Using HireVue, a well-known video interview and assessment vendor, as an example, the commenter described how recruitment robots can ask questions and capture video of applicants to “analyze the movements of applicants, manner of speech, facial expressions, posture.”

While this 2022 prediction seems convincing, a fact check shows that in January 2021, citing its “ongoing commitment to fairness,” HireVue announced its decision to remove visual analysis from their assessment models. In the details of its announcement, HireVue explained that internal research showed that “visual analysis has far less correlation to job performance than other elements.”

With research showing that facial expressions are not universal, academics and ethicists have been increasingly vocal in their criticism of the pseudoscientific use of AI to infer emotions or suitability for employment from facial expressions. AI emotion researchers have called for government regulation, saying “overblown claims give their work a bad name,” and some regulators have already taken action. For example, in January 2020, the state of Illinois signed into law legislation regulating the use of AI in video job interviews.

While we will see increased use of AI for procedural use cases in hiring, active human involvement remains vital for ensuring fairness and trust in the process.

Chipped Employees

The International Monetary Fund has released supply chain data showing that “disruptions have become a major challenge for the global economy since the start of the pandemic.” They point the blame at COVID-19 lockdowns, labor shortages, robust demand for tradable goods, and capacity constraints.

While delays may be unavoidable, retailers and manufacturers have the ability to manage any negative impact that delays have on their supply chain, by foreseeing and mitigating potential disruptions. GPS, barcodes, and radio frequency identification (RFID) tags provide real-time tracking. Through the use of AI and IoT, supply chain managers can proactively anticipate irregularities in the supply chain by predicting whether deliveries will arrive on time for both outbound and inbound shipments.

At least one industry commentator has seen this trend and predicted that it will be extended to people. They predict that 2022 will be the year of “technology for chipping employees,” explaining that the “chip will help track their movements using GPS.”

If the idea of employers putting microchips inside you and tracking your movements is unsettling, then you are not alone. Earlier this year, Amazon changed policy on its controversial employee monitoring system after labor unions targeted the firm, claiming that the tracking and obsession with speed in warehouses led to injuries. People are expressing concern about technological invasions of their civil rights and civil liberties. Regulators around the world have responded by proposing and introducing restrictions on the use of facial recognition. And people do not like the idea of microchips being placed inside their bodies, so much so that fact checking organizations have had to debunk a viral conspiracy theory video claiming that vaccines contain microchips that allow tracking.

This should not need to be said, but microchipping of employees will not happen in 2022.

Federated Learning

We are becoming a data-driven world. According to one estimate, each human created an average of 1.7 MB of data per second in 2020. With so much data available, data breaches are becoming more common, and these data breaches can be quite expensive. Some estimates place the average cost of a data breach in 2021 at more than nine million dollars, but it could be much larger. For example, in 2019, the Federal Trade Commission fined Facebook five billion dollars for violating user privacy.

In this environment of hackers, data breaches, and more stringent regulatory restrictions on data sharing, enterprises are seeking privacy-protecting approaches for AI to analyze and monetize data. Federated learning is “a machine learning technique that trains an algorithm across multiple decentralized edge devices or servers holding local data samples, without exchanging them.” Its contribution to privacy protection is that data is never moved, is never exchanged, and never leaves the organization or individual. For example, an AI could be trained on the data held on millions of app users’ mobile phones, without the AI administrator having access to the specific data on your phone. Some commentators are bullish about the potential for widespread use of federated learning, explaining why they believe it is “getting so popular” and examining how it may “save the world.”

While at first glance, federated learning appears to be an attractive and ethical solution to protect privacy, it has practical issues, primarily relating to weaker AI governance and the new ethical issues it creates. Under a federated learning architecture, the central AI administrator does not control the hardware and cannot access the data. Edge devices may be unsuitable for the task. Some edge devices may even be compromised by malicious entities, feeding deliberately misleading and dangerous data into your AI system. With full privacy, there is no audit trail, no data quality exploration, no explainability, and no accountability. There is no way of knowing what the AI system has learned and whether the AI system will behave in a safe and ethical manner.

When you balance out the costs and benefits, federated learning’s potential is limited to use cases where privacy concerns override other ethical and governance requirements.

AI usage will continue to grow as a mainstream business tool. But be skeptical of hype. As discussed above, these unlikely predictions are often incompatible with society’s trending demands for rigorous AI governance and more ethical AI systems and use cases. If there was one safe prediction for AI in 2022, it would be a continuation of that trend.

Value-Driven AI

DataRobot is the leader in Value-Driven AI – a unique and collaborative approach to AI that combines our open AI platform, deep AI expertise and broad use-case implementation to improve how customers run, grow and optimize their business. The DataRobot AI Platform is the only complete AI lifecycle platform that interoperates with your existing investments in data, applications and business processes, and can be deployed on-prem or in any cloud environment. DataRobot and our partners have a decade of world-class AI expertise collaborating with AI teams (data scientists, business and IT), removing common blockers and developing best practices to successfully navigate projects that result in faster time to value, increased revenue and reduced costs. DataRobot customers include 40% of the Fortune 50, 8 of top 10 US banks, 7 of the top 10 pharmaceutical companies, 7 of the top 10 telcos, 5 of top 10 global manufacturers.

-

How to Choose the Right LLM for Your Use Case

April 18, 2024· 7 min read -

Belong @ DataRobot: Celebrating 2024 Women’s History Month with DataRobot AI Legends

March 28, 2024· 6 min read -

Choosing the Right Vector Embedding Model for Your Generative AI Use Case

March 7, 2024· 8 min read

Latest posts

Related Posts

You’ve just successfully subscribed