This is the third in a series of blog posts inspired by a recent presentation presented at DataVersity 16 in Chicago by Shachar Harussi. Shachar and I discussed the lessons the Paxata development team learned while building the distributed Apache Spark architecture for the Paxata platform. Here is a peek into what we talked about – refer to this post and this post to get some context.

Spark is (almost) enough

We saw that Apache Spark wasn’t quite enough for the scenarios we are designing for — why? Spark is used for a variety of data processing scenarios. For example, data scientists use Spark to build and verify models. Data engineers use Spark to build data pipelines. In both of these scenarios, Spark achieves performance gains by locally caching the results of operations that repeat over and over again, then discards these caches once it is done with the computation.

Why doesn’t this caching strategy work as well for data preparation? Focus on the term interactive. Analysts preparing data are often looking at these datasets for the first time – their eyes are probably the first eyes on data. Figuring out what needs to be done takes experimenting, full of undo, redo, try, delete, try again, and interruptions.

Data preparation does not follow a strict order or a straight line, like Billy going to the mailbox in this Family Circus cartoon. As we said in the session:

Interactive is messy!

What does “interactive” mean for data preparation? Why is it messy? Here are three reasons Spark isn’t enough for interactive data preparation (or why the realities of data preparation cannot take advantage of the native local caching strategy of Spark).

- Data preparation is not a set order of steps. When business analysts start preparing their data, they are exploring through data they have never seen before. The process is messy: they have to experiment, try something, undo an action, try another action, and continue. There is no predefined order or repeated pattern from project to project. Data that is discarded might become useful for a future computation. As one of my colleagues puts it: “Discovery is incremental and the list of transformation can be long. When things go wrong, you may need a lot more than just the final output.”

- Business analysts are busy! Business analysts get interrupted, and have to return to their work after minutes, hours, or days. The local cache strategy in Spark is doesn’t “remember” these interrupted computations.

- Business analysts work with other people. Teams of people work on preparing datasets together, then share data and processes with other business units. Furthermore, in many organizations, large datasets get reused across different business cases. The local cache strategy in Spark is not shared across users.

Paxata’s Caching For Spark

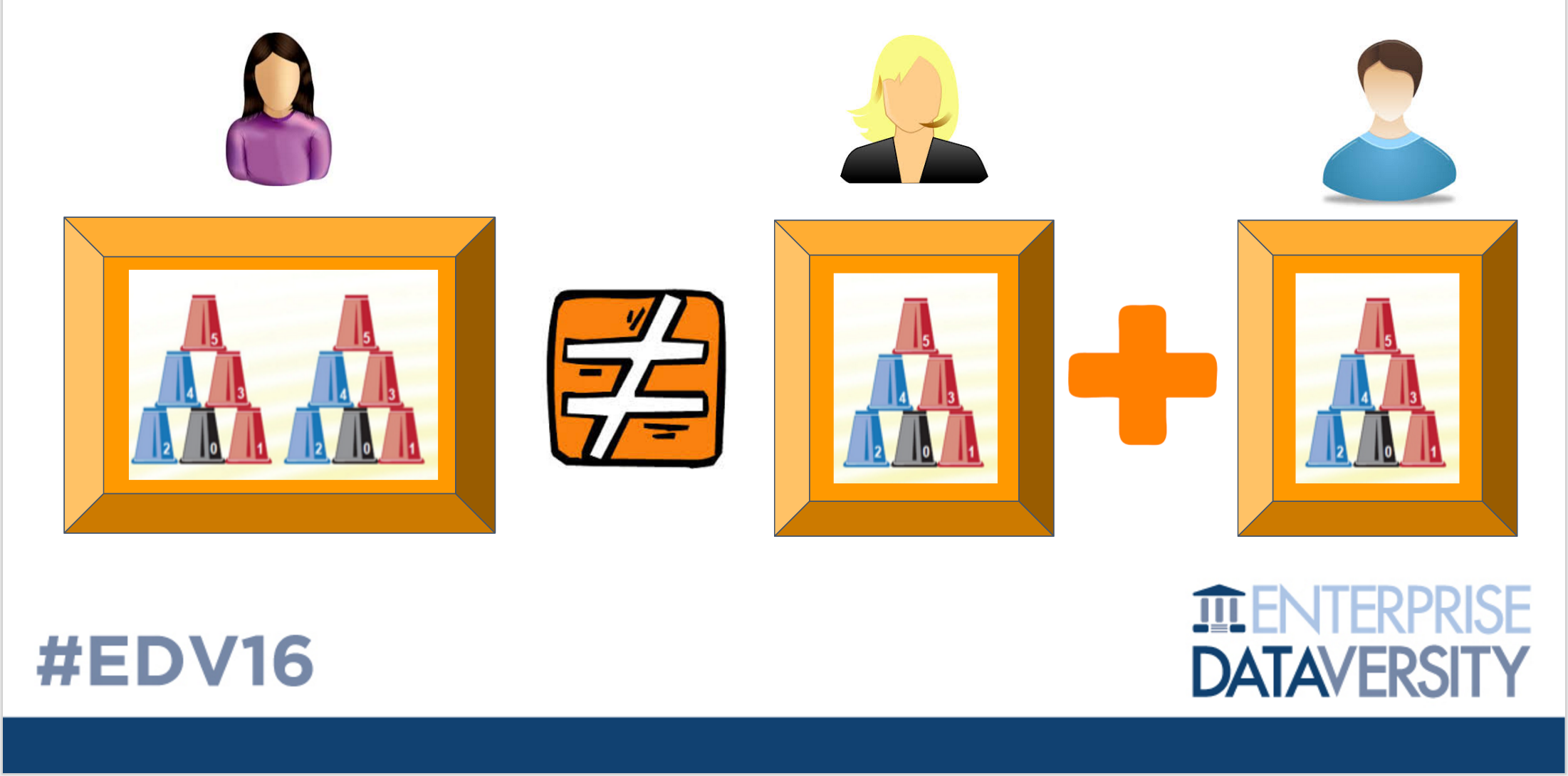

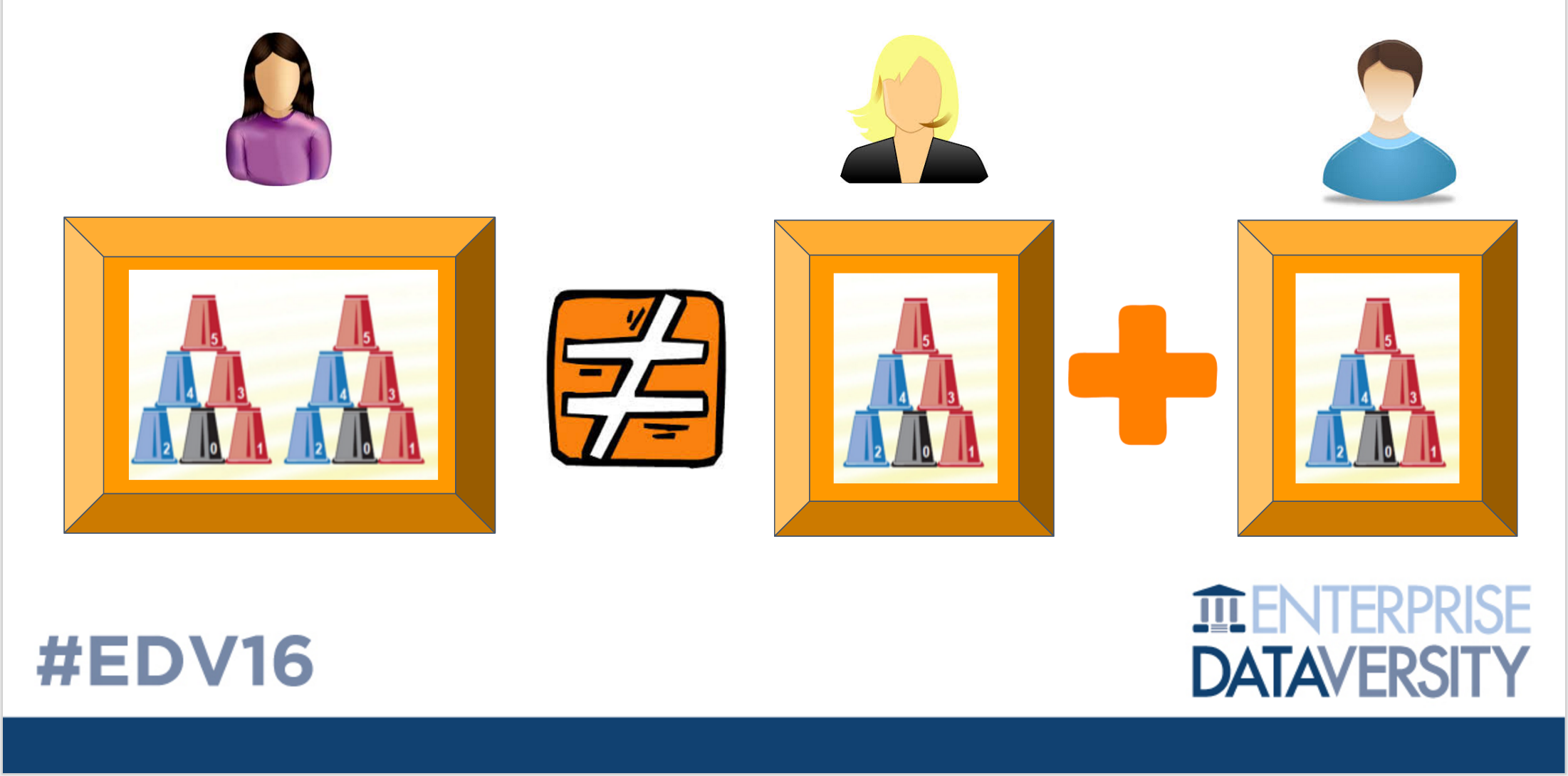

All of these constraints chipped away at the performance of Spark. Spark’s local caching strategy is optimized for situations like one person working on a project in one session, following the same steps over and over again. In the image below, the data scientist on the left repeats their pattern of operations over and over, taking advantage of Spark’s local caching. This is not the same for the situation on the right; two separate people running through identical operations but in separate sessions. This is common in data preparation scenarios, where multiple people from different teams collaborate on data prep over many sessions, many days, and many iterations.

To optimize for more realistic data preparation behavior, the Paxata team developed our own proprietary caching strategies that address the needs of business analysts for collaboration and longer lasting projects.

Have you worked with Apache Spark in an interactive context? I would love to hear about your experiences – tweet at me @superlilia to tell me about optimizations you have created with Spark.

About the author

DataRobot

Value-Driven AI

DataRobot is the leader in Value-Driven AI – a unique and collaborative approach to AI that combines our open AI platform, deep AI expertise and broad use-case implementation to improve how customers run, grow and optimize their business. The DataRobot AI Platform is the only complete AI lifecycle platform that interoperates with your existing investments in data, applications and business processes, and can be deployed on-prem or in any cloud environment. DataRobot and our partners have a decade of world-class AI expertise collaborating with AI teams (data scientists, business and IT), removing common blockers and developing best practices to successfully navigate projects that result in faster time to value, increased revenue and reduced costs. DataRobot customers include 40% of the Fortune 50, 8 of top 10 US banks, 7 of the top 10 pharmaceutical companies, 7 of the top 10 telcos, 5 of top 10 global manufacturers.