If you’ve applied for a job in the last 5 years, you may have wondered if a computer algorithm had any role in your ultimate success or failure. We are in the midst of a steep rise in machine learning applied to HR, with Gartner reporting that 17% of HR teams are already using machine learning and another 30% will do so in the coming year. Recruiting use cases are often on the short list because they have data readily available, but that doesn’t mean they are easy to design or execute. This blog post is the first in a series that tackles critical design considerations for HR recruitment algorithms.

Today’s design decision is whether the final machine learning algorithm will be used primarily to advance the top scoring applicants (the screen-in approach) or to reject the lowest scoring applicants (the screen-out approach). This is an important early choice when viewed from the perspective of trust and ethics in AI applications.

Having worked with HR teams at many different organizations, I know that many never even considered using a screen-out approach. This is unfortunate, since it addresses some of the common concerns about AI in hiring models.

Screen-In versus Screen-Out Philosophy

To help make a point, allow me to use more extreme language and call the screen-in approach the “keep the best of the best” approach and the screen-out approach the “remove the worst of the worst” approach. This wording helps draw attention to the applicants in the middle.

The key difference between the approaches is the impact on the applicants in the middle. In the context of a job application, the middle group is the group that has no clear disqualifiers, but also doesn’t match the attributes of the historically successful applicants.

In the context of corporate diversity and inclusion efforts, that group is valuable.

Machine learning algorithms in recruiting that take a screen-in approach often miss out on qualified candidates in the middle. In the worst case scenario, the initiatives of the VP of Recruiting and the VP of Diversity, Equity & Inclusion end up working against each other, and the Chief Human Resources Officer (to whom they both report) is completely unaware of the conflict. I’ve seen this happen more than once.

It seems contrarian to train a model to identify the job applicants least likely to succeed, but for many organizations it may be the better way to approach process automation in recruiting. By keeping the group in the middle (i.e., those who don’t represent previous hires but who also don’t have obvious disqualifying characteristics), organizations can help ensure that historical hiring disparities don’t keep good applicants out of the pipeline.

How to Do It

The good news is there are easy design fixes which don’t require collecting new data. As a DataRobot user, you will see the benefits of automation by being able to test and compare numerous different design options with minimal effort.

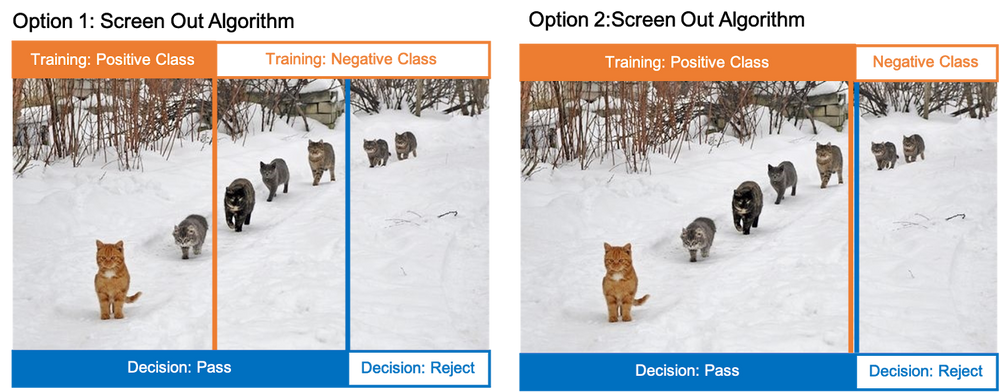

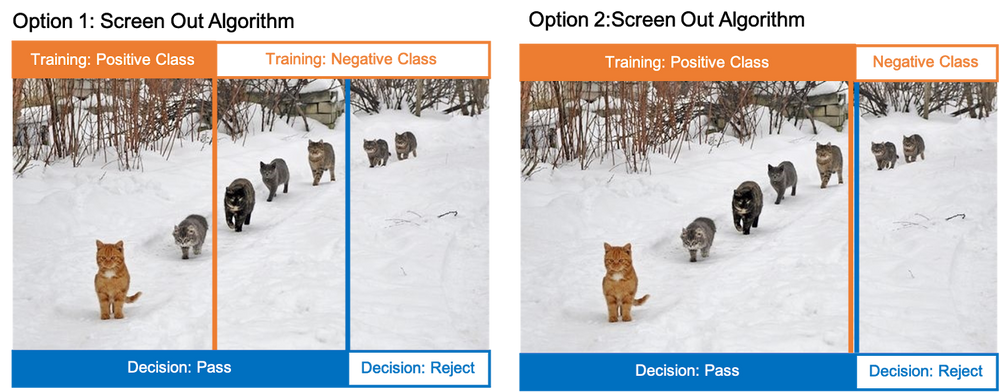

- Option 1: Train the model to learn the characteristics of the best of the best, but set the prediction threshold such that 60-80% of applicants advance to the next stage. This assigns each applicant a score based on their resemblance to the historical best, and removes the applicants who are very dissimilar while still retaining the middle.

- Option 2: Train the model to learn the characteristics of the worst of the worst, and set the decision boundary (called “prediction threshold” in DataRobot) at this same level. This has the net effect of focusing the entire system on removing the least suitable without trying to separate the best from the middle at the screening stage.

Photo credit: https://memegenerator.net/Cats-In-A-Line

Photo credit: https://memegenerator.net/Cats-In-A-Line

Tip: Try Both in DataRobot

In DataRobot pursuing Option 1 or 2 requires a different target variable column, but all the other data can be exactly the same. Since DataRobot makes model development so quick and easy, there is no reason to not try both approaches. You might be surprised that the same individuals receive very different scores using the two options. This makes intuitive sense when you think about your own job skills and the extent to which they would match a recruiter’s ‘best’ criteria versus not match the recruiter’s ‘worst’ criteria.

Let’s apply this idea to a job position for content writers. While a best-of-the-best, screen-in algorithm might value extensive use of literary devices such as metaphor and dramatic irony, a worst-of-the-worst, screen-out algorithm would remove applicants with poor grammar and spelling.

As a data scientist, who occasionally likes to write, I would probably not score well on the algorithm trained to find the best writers; however, I would probably also not match the characteristics of the worst writers. By using the screen-out approach, I’d have a shot at sharing my diverse content knowledge even though I’m not a top writer.

Up Next

This is the first post in a series on designing recruiting algorithms for HR. Future topics include designing the target, the choice of initial training data, common pitfalls when retraining, model blindspots, and ideas for improving baseline model performance.

In the next post, I dive deeply into designing the target variable. Most organizations select ‘was the applicant hired’ as the target of their binary classification model without considering the other options or implications.

Pathfinder

Explore Our Marketplace of AI Use Cases

Browse Now

Photo credit: https://memegenerator.net/Cats-In-A-Line

Photo credit: https://memegenerator.net/Cats-In-A-Line