In our last blog on this subject, we built a machine learning model to predict whether or not a pitch will be called a strike, and used it to evaluate the ability of major league catchers to frame the pitches. In closing, we mentioned that there was a problem with using the logistic regression approach for this model.

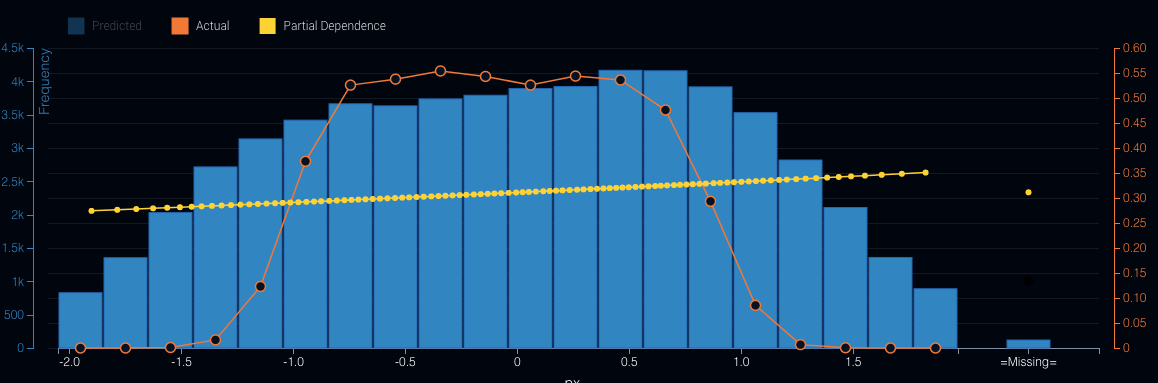

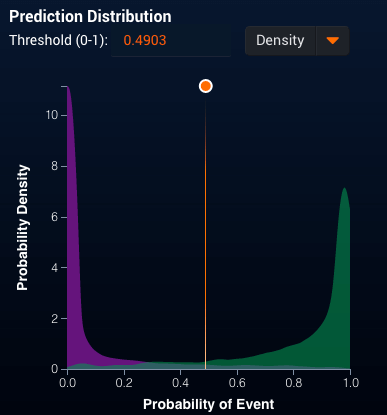

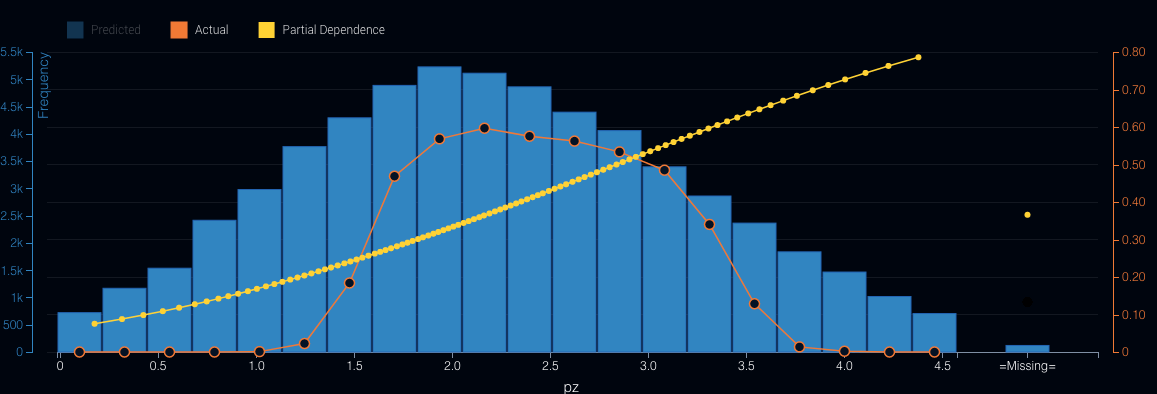

The problem is that the relationships between several of the independent variables (in particular, where the pitch crosses home plate, both vertically and horizontally) have a nonlinear relationship with the target. We can clearly see this if we plot the actual probability a pitch will be called a strike (the orange line in Figure 1) versus its horizontal location in the strike zone. Then, compare it to the partial dependence (which shows the dependence in the model between the target —strike or not), and where the pitch crosses the strike zone.

Figure 1: Horizontal Pitch Location

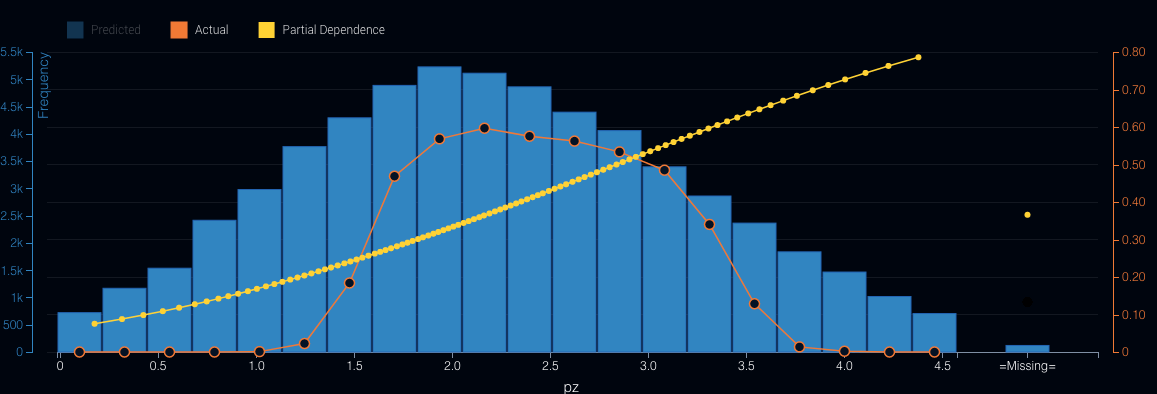

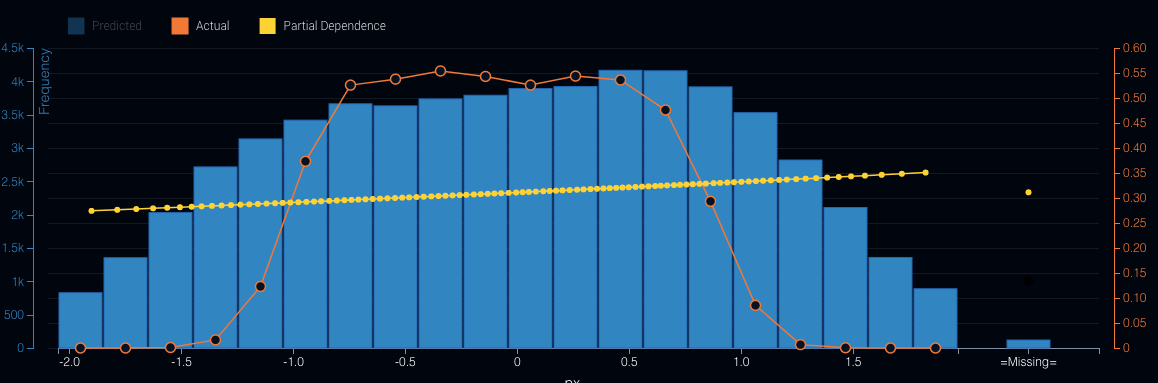

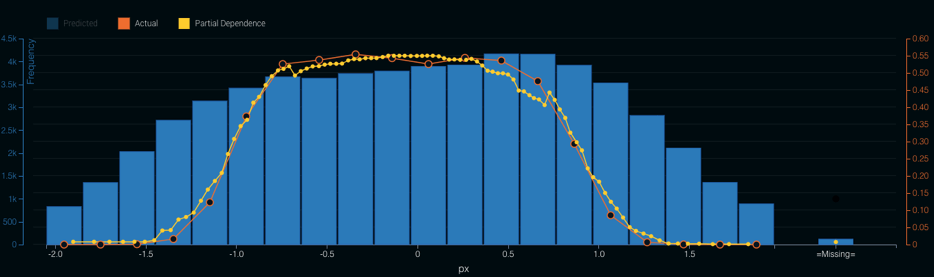

Figure 1: Horizontal Pitch LocationSimilarly, we can also see this for the vertical location of the pitch as it crosses the strike zone (see Figure 2).

Figure 2: Vertical Pitch Location

Figure 2: Vertical Pitch Location

As we can see in each case above, the model fails to correctly capture the underlying shape of the strike zone. In the second graph we see that the model will continue to increase the probability of a strike as the pitches’ location rises out of the strike zone.

There are a couple of ways to resolve this issue. The first is to perform some feature engineering. Knowing about the shape of the strike zone, we could create new features that would better capture the actual relationship of pitch location and strike calls. In fact, DataRobot does this automatically for some of the linear models it builds.

We can continue to improve our ability to measure the impact of catchers by controlling for more factors (much like Baseball Prospectus does) including the pitcher, umpire, etc.

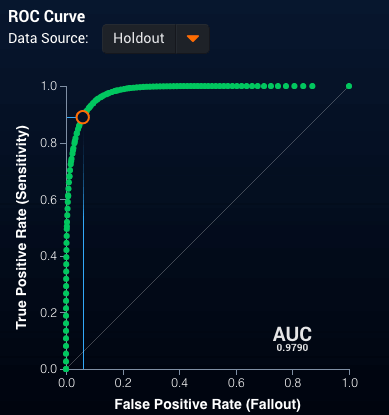

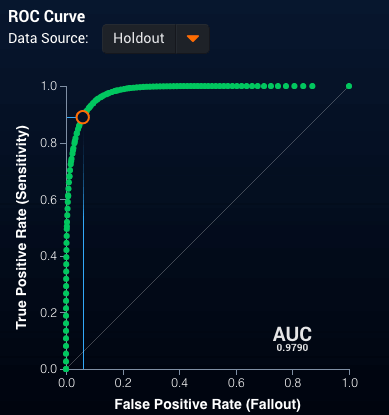

Alternatively, as we will do in this blog post, we can use machine learning techniques that will naturally capture this nonlinear behavior. So, again using DataRobot, we built an Extreme Gradient Boosted Tree Classifier using the same data as in the last blog. We get an even better classifier with an AUC of 0.9790, and a very good ROC curve (see Figure 3):

Figure 3: ROC Curve for Extreme Gradient Boosted Tree

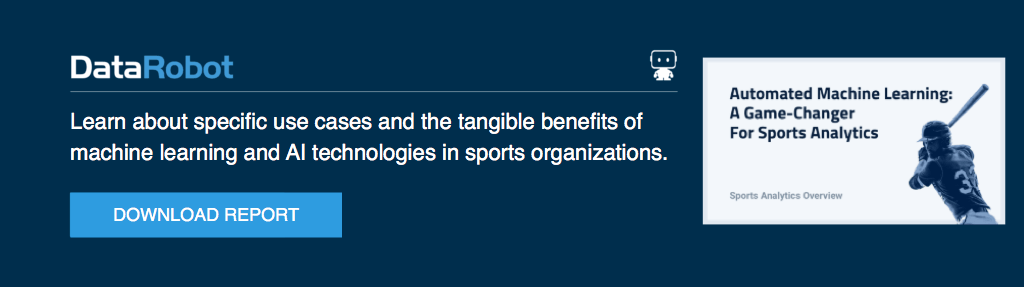

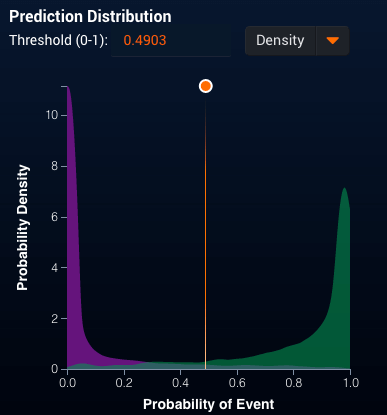

Figure 3: ROC Curve for Extreme Gradient Boosted TreeWith a really strong separation between the predictions for those pitches called strikes and those called balls (Figure 4):

Figure 4: Strike Probability Distribution

Figure 4: Strike Probability Distribution

This plot does not show the same issues as we saw with the logistic regression from the previous blog. Even better, when we examine the partial dependence plot (see Figure 5) for horizontal location where the pitch crosses the strike zone, we see that the model captures the nonlinear behavior we expect.

Figure 5: Horizontal Pitch Location

Figure 5: Horizontal Pitch Location

Now, we can follow the same procedure as in the last blog post: score the 2017 pitches, classify the pitch as a strike (if the probability is above 0.5) or a ball (if the probability is below 0.5) to get the top 10 catchers (by pitches framed and runs saved) who saw at least 1000 called pitches:

And the bottom 10 Catchers:

Similarly, using the probability that a pitch is a strike to generate the expected number of strikes, we get the following top 10 catchers:

And the bottom 10 Catchers:

We do see some minor changes in our ranking of the catchers due to the improved model. For example, while Austin Hedges is still a top pitch framer, his overall value drops. Meanwhile, Elias Diaz, the Pirates rookie catcher, no longer appears on the bottom of the list. We can continue to improve our ability to measure the impact of catchers by controlling for more factors (much like Baseball Prospectus does) including the pitcher, umpire, etc.

For our next blog in this series, we will explore this improved model and consider an alternate approach using machine learning to evaluate the impact of the catchers on strike calls.

About the Author:

Andrew Engel is a Customer Facing Data Scientist at DataRobot. He works with DataRobot customers in a wide variety of industries, including several Major League Baseball teams. He has been working as a data scientist and leading teams of data scientists for over 10 years in a wide variety of domains from fraud prediction to marketing analytics. Andrew received his PhD in Systems and Industrial Engineering with a focus on optimization and stochastic modeling. He has worked for Towson University, SAS Institute, the US Navy, Websense (now ForcePoint), Stics, and HP before joining DataRobot in February of 2016.

About the author

Andrew Engel

General Manager for Sports and Gaming, DataRobot

Andrew Engel is General Manager for Sports and Gaming at DataRobot. He works with DataRobot customers across sports and casinos, including several Major League Baseball, National Basketball League and National Hockey League teams. He has been working as a data scientist and leading teams of data scientists for over ten years in a wide variety of domains from fraud prediction to marketing analytics. Andrew received his Ph.D. in Systems and Industrial Engineering with a focus on optimization and stochastic modeling. He has worked for Towson University, SAS Institute, the US Navy, Websense (now ForcePoint), Stics, and HP before joining DataRobot in February of 2016.

Figure 1: Horizontal Pitch Location

Figure 1: Horizontal Pitch Location Figure 2: Vertical Pitch Location

Figure 2: Vertical Pitch Location Figure 3: ROC Curve for Extreme Gradient Boosted Tree

Figure 3: ROC Curve for Extreme Gradient Boosted Tree Figure 4: Strike Probability Distribution

Figure 4: Strike Probability Distribution Figure 5: Horizontal Pitch Location

Figure 5: Horizontal Pitch Location